Google celebrates its 20th anniversary and outlined the next chapter of Search, which will include relevant information related to your interests, more visual content and more.

Activity cards and collections

Many searches are related to longer sessions that span multiple days, with people coming back to Search to find the latest updates on a topic or explore the range of content available. For example, you might be planning a trip, and searching for information about a destination over the course of a month.

Google says that the updated search will help you resume tasks where you left off, keep track of ideas and content that you found useful, and get relevant suggestions of things to explore next.

A new activity card will help you pick up from where you left off in Search. When you revisit a query related to a task you've started in the past, Google will show you a card with relevant pages you've already visited and previous queries you've done on this topic. This helps you retrace your steps when you might not remember which sites had that useful information you'd found earlier.

Google says that this card won't appear for every search. And you'll have full control over it-you can remove results from your history, pause seeing this card, or choose not to see it all together. This new activity card will be available in Search later this year.

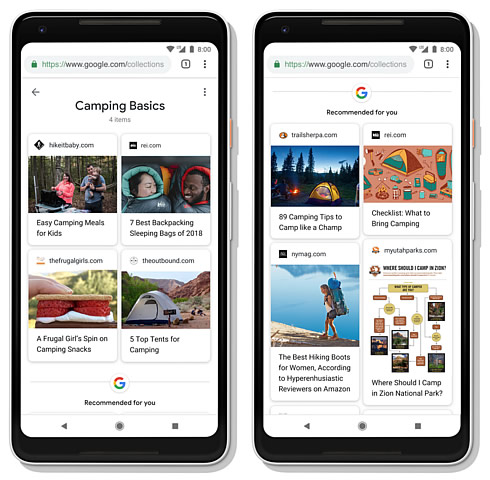

Another way to more easily navigate long search journeys is by adding content to Collections. Collections in Search help you keep track of content you've visited, such as a website or article or image, and quickly get back to it later.

Now, with an improved Collections experience, you can add your content from an activity card directly to Collections. This makes it even easier to keep track of and organize the content you want to revisit.

Google has also added content suggestions to help you explore topics further, based on the other content you've saved and things you've searched for. The company will start rolling out this new Collections experience later this fall.

In addition, the search giant is introducing a new way of dynamically organizing search results that helps you determine what information to explore next.

Rather than presenting information within a set of predetermined categories, Google can intelligently show the subtopics that are most relevant to what you're searching for and make it easy to explore information from the web, all with a single search.

So if you're searching for Pugs, for example, you'll now be able to see the tabs for the most common and relevant subtopics, like breed characteristics and names, right at the top. But if you search for something else, even a different kind of dog, like Yorkshire Terriers, you'll see options grooming tips and breed history.

Google says that this feature is that it continues to stay fresh and learns over time. As new information is published to the web, these tabs stay up to date to reflect what's most relevant to that topic.

To enable all of these updates, Search has to understand interests and how they progress over time. So Gogole has taken the existing Knowledge Graph-which understands connections between people, places, things and facts about them-and added a new layer, called the Topic Layer, engineered to deeply understand a topic space and how interests can develop over time as familiarity and expertise grow.

The Topic Layer is built by analyzing all the content that exists on the web for a given topic and develops hundreds and thousands of subtopics. For these subtopics, Google can identify the most relevant articles and videos-the ones that have shown themselves to be evergreen and continually useful, as well as fresh content on the topic. Google then looks at patterns to understand how these subtopics relate to each other, so it can more intelligently surface the type of content you might want to explore next.

Discover new information without search queries

Last year Google introduced the Google feed to surface relevant content to you, even when you're not searching. Today, the companyis launching an update to this experience, including a new name, a new look, and a new set of features.

Google feed is now called "Discover".

New topic headers explain why you're seeing a particular card in Discover, and whenever a topic catches your eye, you can dive deeper to explore more on that topic.

Next to each topic name is a Discover icon, which you'll also start to see in Search for an ever-growing set of topics. You can tap "Follow" to start seeing more about that topic in your experience.

In addition to this new look, you'll also see new types of content in Discover. You'll find more videos and fresh visual content, as well as evergreen content-articles and videos that aren't new to the web, but are new to you.

For example, when you're planning your next trip, Discover might show an article with the best places to eat or sights to see. Suddenly, a travel article published three months ago is timely for you. This can also be useful as you're taking up a new hobby or going deeper on a long-time interest. Using the Topic Layer in the Knowledge Graph, Discover can predict your level of expertise on a topic and help you further develop those interests.

More context and control

Because Discover is all about you and your interests, there are now even more ways to customize what you see.

Tap on the control icon to indicate that you want more or less content on that topic. You'll continue to see content from a range of sources on any given topic.

When it comes to news, Discover uses the same technology as Full Coverage in Google News to bring you a variety of perspectives on the latest news.

With this redesign, Discover will now be even more useful to people who speak multiple languages. You may like to use recipes in Spanish and read sports in English, and you will see content in your language of preference for each interest.

Google is starting with support for English and Spanish in the U.S. and will expand to more languages and countries soon.

Discover is coming to google.com on all mobile browsers.

Visual content more useful in Search

Google is introducing a range of new features that use AI to make your search experience more visual, and also makes the Google Images experience even more powerful.

Earlier this year Google worked with the AMP Project to announce AMP stories, an open source library that makes it easy for anyone to create a story on the open web. Publishers are experimenting with this format and provide people with a more visual way to get information from Search and News. To help people discover these visual stories, Google will also begin to show this content in Google Images and Discover.

Now Google beginning to use AI to intelligently construct AMP stories and surface this content in Search. Google is starting today with stories about notable people-like celebrities and athletes-providing a glimpse into facts and important moments from their lives in a rich, visual format. This format lets you tap to the articles for more information and provides a new way to discover content from the web.

Using computer vision, Google is now able to deeply understand the content of a video and help you quickly find the most useful information in a new experience called featured videos.

Imagine you're planning a hiking trip to Zion National Park, and you want to check out videos of what to expect and ideas for sites to visit. Since you've never been there, you might not know which specific landmarks to look for when mapping out your trek.

With featured videos, Google takes its deep understanding of the topic space (in this case, the most important landmarks in the park) and show the most relevant videos for those subtopics. For Zion National Park, you might see a video for each attraction, like Angels Landing or the Narrows.

Google also announced several new features to Google Images.

Over the last year, Google overhauled the Google Images algorithm to rank results that have both great images and great content on the page. For starters, the authority of a web page is now a more important signal in the ranking. If you're doing a search for DIY shelving, the site behind the image is now more likely to be a site related to DIY projects. Google also prioritizes fresher content.

Also, it wasn't long ago that if you visited an image's web page, it might be hard to find the specific image you were looking for when you got there. Google now prioritizes sites where the image is central to the page, and higher up on the page. So if you're looking to buy a specific pair of shoes, a product page dedicated to that pair of shoes will be prioritized above, say, a category page showing a range of shoe styles.

Starting this week, Google will also show more context around images, including captions that show you the title of the webpage where each image is published. Google will also suggest related search terms at the top of the page for more guidance.

Explore within an image using AI with Lens in Google Images

Google launched Google Lens last year to help you do more with what you see. People are already using it in their camera and on their photos-to find items in an outfit they like, learn more about landmarks, or identify that cute dog in the park. In the coming weeks, Google will bring Lens to Google Images to help you explore and learn more about visual content you find during your searches.

Lens' AI technology analyzes images and detects objects of interest within them. If you select one of these objects, Lens will show you relevant images, many of which link to product pages so you can continue your search or buy the item you're interested in. Using your finger on a mobile device screen, Lens will also let you "draw" on any part of an image, even if it's not preselected by Lens, to trigger related results and dive even deeper on what's in your image.

|