Imagination Technologies announced a new generation of PowerVR GPUs that raises the bar on graphics and compute in cost-sensitive devices. The company also released new category of IP - the PowerVR 2NX hardware neural network accelerator.

PowerVR Series9XE and Series9XM GPUs

The new PowerVR GPUs deliver graphical capabilities for even constrained price points, giving SoC developers a flexible family of GPUs that provides the right level of performance for products to stand out at any price point.

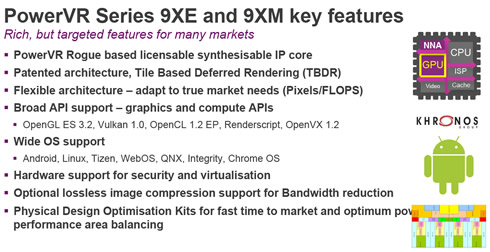

Imagination says that the Series9XM GPUs, with increased compute density (GFLOPS/mm2), represent "the industry's best graphics cores" for compute and gaming on devices such as gaming set-top boxes, mid-range smartphones and tablets, and automotive infotainment systems, with the ability to scale to 4K and beyond.

Both new families also benefit from improvements in the memory subsystem, reducing bandwidth by as much as 25% over previous generations. Other new features common to the 9XE and 9XM families include a new MMU, allowing a greater address range and standard support for 10-bit YUV across the range, without impacting area.

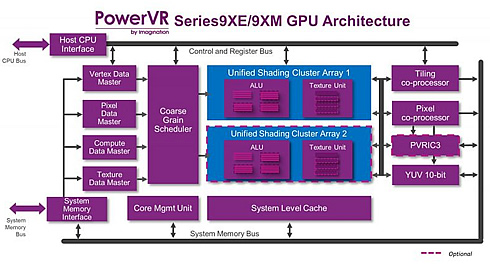

Imagination is initially rolling out several IP cores in each family, offering multiple ALU and texture performance balance points, coupled to single and multi-cluster designs.

Highlights:

Performance/mm2

- 9XE GPUs provide improved gaming performance while maintaining the same fillrate density compared to the previous generation

- Imagination claims that the 9XM GPUs use several new and enhanced architectural elements to achieve up to 70% better performance density than the competition, and up to 50% better than the previous 8XEP generation

- Bandwidth savings of up to 25% over the previous generation GPUs through architectural enhancements including parameter compression and tile grouping

- Memory system improvements: 36-bit addressing for improved system integration, improved burst sizes for efficient memory accesses, and enhanced compression capabilities

- Extremely low power consumption with Imagination's Tile Based Deferred Rendering (TBDR) technology

- Support for hardware virtualization and Imagination's OmniShield multi-domain security

- Support for Khronos graphics APIs including OpenGL ES 3.2, and Vulkan 1.0; and advanced compute and vision APIs such as RenderScript, OpenVX 1.1 and OpenCL 1.2 EP

- Optional support for PVRIC3, the latest PowerVR lossless image compression technology for optimal system integration efficiency.

Availability Series9XE GPU IP cores are available for licensing now in configurations including:

- 1 PPC with 16 F32 FLOPS/clock(GE9000)

- 2 PPC with 16 F32 FLOPS/clock (GE9100)

- 4 PPC with 32 F32 FLOPS/clock (GE9210)

- 8 PPC with 64 F32 FLOPS/clock (GE9420)

Series9XM GPU IP cores are available for licensing now in configurations including:

- 4 PPC with 64 FP32 FLOPS/clock (GM9220)

- 4 PPC with 128 FP32 FLOPS/clock (GM9240)

- 8 PPC with 128 FP32 FLOPS/clock (GM9240)

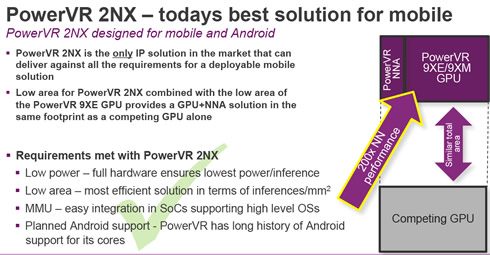

PowerVR 2NX NNA for neural net acceleration

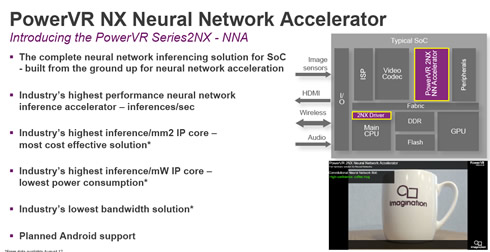

PowerVR is also adding to its history with the addition of an entirely new category of IP - a hardware neural network accelerator: introducing the PowerVR 2NX. This Neural Network Accelerator (NNA), is a full hardware solution built from the ground up to support many neural net models and architectures as well as machine learning frameworks, such as Google's TensorFlow and Caffe, at a high level of performance and low power consumption.

Neural networks are, of course, becoming ever more prevalent and are found in a wide variety of markets. They tailor your social media feed to match your interests, enhance your photos to make your pictures look better, and power the feature detection and eye tracking in AR/VR headsets.

To do their work, neural networks need to be trained, and this is usually done 'offline' on powerful server hardware. The recognising of patterns of objects is known as inferencing, and this is done in real time.

Of course, the bigger the network, the larger the computational needs, and this requires new levels of performance, especially in mobile use cases. While a neural network-based inference engine can be run on a CPU, they are typically deployed on GPUs to take advantage of their highly parallel design, which processes neural nets at orders of magnitude faster. However, to enable the next-generation of performance within strict power budgets, dedicated hardware for accelerating neural network inference is required.

Originally, the early desktop processors didn't even have a maths co-processor for accelerating the floating point calculations on which applications such as games are so dependent, but since the 1980s it has been a standard part of the CPU. In the 1990s, CPUs gained their own onboard memory cache to boost performance, while later GPUs were integrated. This was followed in the 2010s by ISPs and hardware support for codecs for smooth video playback. Now it's the turn of neural networks to get their own optimised silicon.

In many cases, the inferencing could be run on powerful hardware in the cloud, but for many reasons it's now time to move this to edge devices. Where fast response is required, it's simply not practical to run neural networks over the network due to latency issues. Moving it on-device also eliminates the security issues that could occur. As cellular networks may not always be available, be they 3G, 4G or 5G, dedicated local hardware will be more reliable, as well as offering greater performance and, crucially, much-reduced power consumption.

So what makes our 2NX hardware different from other neural network solutions, such as DSPs and GPUs?

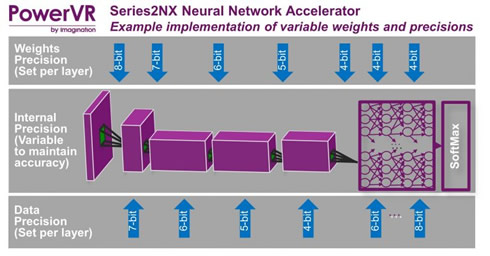

According to Imagination, the 2NX's ultra-low power consumption characteristics leverage the company's expertise in designing for mobile platforms. The second factor is Imagination's flexible bit-depth support, available crucially, on a per-layer basis. A neural network is commonly trained at full 32-bit precision, but doing so for interference would require a very large amount of bandwidth and consume a lot of power, which would be prohibitive within mobile power envelopes; i.e. even if you had the performance to run it, your battery life would take a huge hit.

To deal with this, the 2NX offers variation of the bit-depth for both weights and data, making it possible to maintain high inference accuracy, while drastically reducing bandwidth requirements, with a resultant dramatic reduction in power requirements. Imagination clains that its new hardware is the only solution on the market to support bit-depths from 16-bit (as required for use cases which mandate it, such as automotive), down to 4-bit, and everything in between.

Unlike other solutions, however, Imagination does not apply a blunt brute force approach to this reduced bit depth. It can be varied on a per-layer basis for both weights and data, so developers can fully optimise the performance of their networks.

In practice, Imagination claims that the 2NX requires as little as 25% of the bandwidth compared with competing solutions. Moving from 8-bit down to 4-bit precision for those use cases where it is appropriate enables the 2NX to consume 69% of the power with less than a 1% drop in accuracy.

In terms of raw performance, a single core of Imagination's initial PowerVR 2NX IP, running at a conservative 800MHz, can offer up to 2048 MACs/cycle (the industry standard performance indicator), meaning we can run over 3.2 trillion operations a second. The 2NX is a highly scalable solution, and through the use of multiple cores, much higher performance can be achieved, if required.

The 2NX is powerful despite the fact that it is a very low area component, delivering the highest inference/mm2 in the industry. In fact, if used together in a typical SoC, Imagination's PowerVR GPU and NNA solution will take up less silicon space than a competitor GPU on its own. Of course, a GPU is not required to use the 2NX; and a CPU is needed only for the drivers.

The 2NX IP is also available with an optional memory management unit (MMU), enabling it to be used with Android or other modern complex OSs, without the need to lay down any additional silicon or for any complex software integration.

At launch, the 2NX supports the major neural network frameworks, including Caffe and TensorFlow and support for others will be investigated on an ongoing basis.

Availability The PowerVR 2NX NNA is available for licensing now.