Xilinx Unveils Versal SoC, Ultra-fast AI Accelerator Cards

Xilinx CEO Victor Peng today unveiled Versal, the first adaptive compute acceleration platform (ACAP), along with the powerful Alveo data center and AI accelerator cards.

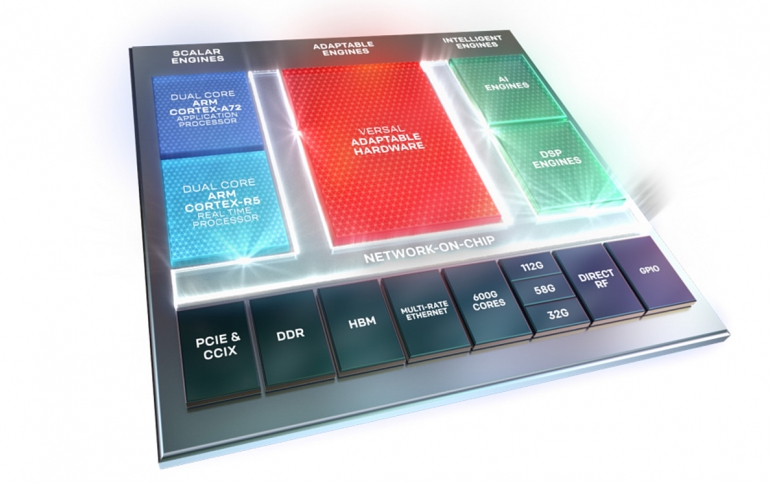

Previously known as Everest architecture, Versal ACAPs combine Scalar Processing Engines, Adaptable Hardware Engines, and Intelligent Engines with memory and interfacing technologies to deliver powerful heterogeneous acceleration for any application. But most importantly, the Versal ACAP's hardware and software can be programmed and optimized by software developers, data scientists, and hardware developers alike, enabled by a host of tools, software, libraries, IP, middleware, and frameworks that enable industry-standard design flows.

Built on TSMC's 7-nanometer FinFET process technology, the Versal portfolio is the first platform to combine software programmability with domain-specific hardware acceleration and the adaptability. The portfolio includes six series of devices architected to deliver scalability and AI inference capabilities for a host of applications across different markets, from cloud to networking to wireless communications to edge computing and endpoints.

Versal shrinks the size of a central FPGA block to make room for more ARM, DSP, inference and I/O blocks. Xilinx positioned Versal as the start of a broad new family of standard products. They aim to outperform CPUs and GPUs on a wide range of data center, telecom, automotive and edge applications and increasingly support programming in high-level languages such as C and Python.

The portfolio includes the Versal Prime series, Premium series and HBM series, which are designed to deliver industry-leading performance, connectivity, bandwidth, and integration for the most demanding applications. It also includes the AI Core series, AI Edge series, and AI RF series, which feature the AI Engine. The AI Engine is a new hardware block designed to address the emerging need for low-latency AI inference for a wide variety of applications and also supports advanced DSP implementations for applications like wireless and radar. It is tightly coupled with the Versal Adaptable Hardware Engines to enable whole application acceleration, meaning that both the hardware and software can be tuned.

The Versal AI Core series delivers the portfolio's highest compute and lowest latency, enabling breakthrough AI inference throughput and performance. The series is optimized for cloud, networking, and autonomous technology, offering the highest range of AI and workload acceleration. The Versal AI Core series has five devices, offering 128 to 400 AI Engines. The series includes dual-core Arm Cortex-A72 application processors, dual-core Arm Cortex-R5 real-time processors, 256KB of on-chip memory with ECC, more than 1,900 DSP engines optimized for high-precision floating point with low latency. It also incorporates more than 1.9 million system logic cells combined with more than 130Mb of UltraRAM, up to 34Mb of block RAM, and 28Mb of distributed RAM and 32Mb of new Accelerator RAM blocks, which can be directly accessed from any engine and is unique to the Versal AI series' - all to support custom memory hierarchies. The series also includes PCIe Gen4 8-lane and 16-lane, and CCIX host interfaces, power-optimized 32G SerDes, up to 4 integrated DDR4 memory controllers, up to 4 multi-rate Ethernet MACs, 650 high-performance I/Os for MIPI D-PHY, NAND, storage-class memory interfacing and LVDS, plus 78 multiplexed I/Os to connect external components and more than 40 HD I/Os for 3.3V interfacing. All of this is interconnected by a network-on-chip (NoC) with up to 28 master/slave ports, delivering multi-terabit per-second bandwidth at low latency combined with power efficiency and native software programmability. The full product table is now available.

The Versal Prime series is designed for broad applicability across multiple markets and is optimized for connectivity and in-line acceleration of a diverse set of workloads. This mid-range series is made up of nine devices, each including dual-core Arm Cortex-A72 application processors, dual-core Arm Cortex-R5 real-time processors, 256KB of on-chip memory with ECC, more than 4,000 DSP engines optimized for high-precision floating point with low latency. It also incorporates more than 2 million system logic cells combined with more than 200Mb of UltraRAM, greater than 90Mb of block RAM, and 30Mb of distributed RAM to support custom memory hierarchies. The series also includes PCIe Gen4 8-lane and 16-lane, and CCIX host interfaces, power-optimized 32 gigabits-per-second SerDes and mainstream 58 gigabits-per-second PAM4 SerDes, up to 6 integrated DDR4 memory controllers, up to 4 multi-rate Ethernet MACs, 700 high-performance I/Os for MIPI D-PHY, NAND, and storage-class memory interfaces and LVDS, plus 78 multiplexed I/Os to connect external components, and greater than 40 HD I/O for 3.3V interfacing. All of this is interconnected by a network-on-chip (NoC) with up to 28 master/slave ports, delivering multi-terabits per-second bandwidth at low latency combined with power efficiency and native software programmability.

Xilinx released a handful of benchmarks based on simulations showing initial 7nm chips can beat 16-12nm CPUs and GPUs.

The chips span 5-150W in power consumption. They will support 0.7, 0.78 and 0.88V. Prices will align with traditional Xilinx FPGAs, although they are likely to get more competitive at the low end and for hot use cases such as self-driving cars.

In a networking simulation, a Prime series delivered 150 million packets/second on an open virtual switch application versus 8.68 million for a traditional CPU. The AI Core version delivered 43x the inference performance on convolutional neural nets of a CPU and 2x of a GPU in high batch sizes.

At sub-two millisecond latencies targeting edge apps, AI Core beat a GPU by 8x in performance simulations and 4x in throughput at 75W, Xilinx claimed. The AI parts are optimized to run a mix of inference and other applications, not just deep learning.

The Versal portfolio is enabled by a development environment with a software stack including drivers, middleware, libraries and software framework support. More details on the software programming tools will be made available next year.

The Versal Prime series and Versal AI Core series will be generally available in the second half of 2019.

Data Center and AI Accelerator Cards

Xilinx also today launched Alveo, a portfolio of powerful accelerator cards designed to increase performance in industry-standard servers across cloud and on-premise data centers.

The Alveo U200 and Alveo U250 are powered by the Xilinx UltraScale+ FPGA and are available now for production orders.

For machine learning, the Alveo U250 increases real-time inference throughput by 20X versus high-end CPUs, and more than 4X for sub-two-millisecond low-latency applications versus fixed-function accelerators like high-end GPUs, according to Xilinx. Moreover, Alveo accelerator cards reduce latency by 3X versus GPUs, providing a significant advantage when running real-time inference applications. And some applications like database search can be radically accelerated to deliver more than 90X, versus CPUs.

Xilinx Alveo U200 and U250 accelerator cards are available today starting at $8,995 (USD) and can be purchased today.