AMD Announces Radeon Instinct GPU Accelerators for Deep Learning

AMD is taking the wraps off of their latest combined hardware and software initiative for the server market. Radeon Instinctis aimed directly at the deep learning/machine learning/neural networking market.

Deep learning has the potential to be a very profitable market for a GPU manufacturer such as AMD, and as a result the company has put together a plan for the next year to break into that market. That plan is the Radeon Instinct initiative, a combination of hardware (Instinct) and an optimized software stack to serve the deep learning market. Rival NVIDIA is already heavily vested in the deep learning market and it has paid off for the company as their datacenter revenues have exploded.

The Instinct cards are succeeding AMD?s current FirePro S series cards. Passively cooled cards geared for large scale server installations, offered across a range of power and performance options.

"Radeon Instinct is set to dramatically advance the pace of machine intelligence through an approach built on high-performance GPU accelerators, and free, open-source software in MIOpen and ROCm," said AMD President and CEO, Dr. Lisa Su. "With the combination of our high-performance compute and graphics capabilities and the strength of our multi-generational roadmap, we are the only company with the GPU and x86 silicon expertise to address the broad needs of the datacenter and help advance the proliferation of machine intelligence."

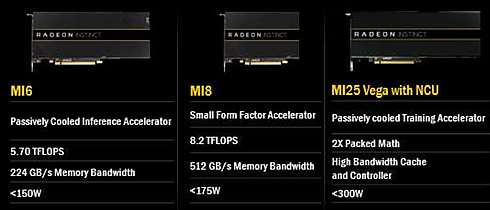

AMD is announcing 3 cards today, all 3 which tap different AMD GPUs, and are named after their expected performance levels.

The Radeon Instinct MI6 is a Polaris 10 card. At 5.7 TFLOPS (FP16 or FP32) it will draw under 150W.

AMD will be keeping their 2015 Fiji GPU around for the second card, the Instinct MI8. It offering higher throughput and increased memory bandwidth compared to the Instinct MI6, for a small increase in power consumption, with the drawback of Fiji?s 4GB VRAM limitation.

The MI6 and MI8 will be going up against NVIDIA'ss P4 and P40 accelerators.

The Instinct family also includes the powerful MI25. This is based on AMD's forthcoming Vega GPU family. The passively cooled card is rated for sub-300W operation, and based on AMD performance projections elsewhere, AMD makes it clear that they're targeting 25 TFLOPS FP16 (12.5 TFLOPS FP32) performance.

According to AMD, Vega supports packed math formats for FP16 operations.

As AMD's sole training card, the MI25 will be going up against NVIDIA's flagship accelerator, the Tesla P100.

Supermicro announced a rack-mounted system that can support three of the new AMD cards. Inventec announced two systems supporting on one rack node up to four or 16 of the M125 Vega boards with PCI Express slots that can also contain FPGAs or solid-state flash drives. Inventec also showed a rack that could contain 120 of the Vega cards for 3 petaflops of GPU computing.

In April, Nvidia started shipping its own design for a four-way cluster of its high-end GPUs, linked on its proprietary NV-Link that sports higher throughput and lower latency the PCIe. And its Cuda language for GPU computing has been widely used for years.

Last month, Intel detailed plans to use its Xeon and Xeon Phi processors as well as acquired processors from Nervana and Movidius to cover a range of inference and training jobs.

AMD also plans to support open interconnects such as CCIX and GenZ to link to FPGA accelerators and storage-class memories respectively. Meanwhile, the company supports PCIe and its single-root virtualization standard.

Along with the new hardware offerings, AMD announced MIOpen, a free, open-source library for GPU accelerators intended to enable high-performance machine intelligence implementations, and new, optimized deep learning frameworks on AMD's ROCm software to build the foundation of the next evolution of machine intelligence workloads.

Built on top of MIOpen will be updated versions of the major deep learning frameworks, including Caffe, Torch 7, and TensorFlow.

Finally, along with creating a full hardware and software ecosystem for the Instinct product family, AMD is also going beyond individual cards and out to servers.

The basis for this effort is AMD's upcoming Naples platform, the server platform based on Zen. Besides offering a potentially massive performance increase over AMD's Bulldozer server platform, Naples lets AMD flex their muscle in heterogeneous applications, tapping into their previous experience with HSA. This is a bit more forward looking - Naples doesn't have an official launch date yet.

Naples is expected to offer at least 64 PCIe lanes per CPU - enough lanes to give up to 4 Instinct cards a full, dedicated PCIe x16 link to the host CPU and the other cards. For installations focusing on GPU-heavy workloads, this gives AMD a distinct advantage over Intel since it means they can drive 4 Instinct cards off of a single CPU, making it a cheaper option than Xeon configurations.

The Radeon Instinct products are not set to ship until H1 of 2017.