AMD Takes on Intel With Epyc Server SoCs For the Data Center

AMD today officially launched the EPYC 7000 series of high-performance datacenter processors. Epyc is a multimodule SoC based on the 'Zen' architecture of AMD's Ryzen Threadripper PC processor, offering a whopping 32 cores.

With up to 32 high-performance "Zen" cores and an unparalleled feature set, the record-setting AMD EPYC design delivers greater performance across a full range of integer, floating point, memory bandwidth, and I/O benchmarks and workloads.

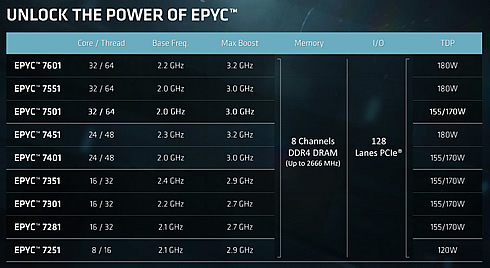

AMD announced nine Epyc processors, using its Zen x86 core to deliver 23-70% more performance than Intel's 14-nm Broadwell parts across a range of benchmarks. All support eight 2,666-MHz DDR 4 channels and 128 PCI Express Gen 3 lanes, compared to about four memory channels and 40 PCIe lanes in the average Xeon.

The full EPYC stack will contain twelve processors, with three for single socket environments, with the rest of the stack being made available at the end of July.

The new chips cover the current Xeon price range from $400 to $4,000, with AMD aiming to give users more performance at existing price points. They arrive as Intel is about to roll out its first 10-nm Xeon chips based on its Skylake architecture.

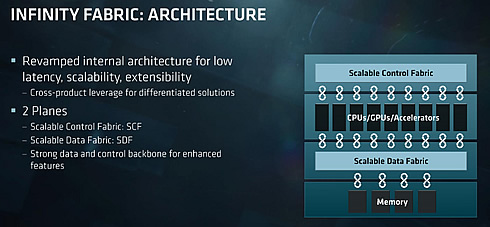

On the package are four silicon dies, each one containing the same 8-core silicon we saw in the AMD Ryzen processors. Each silicon die has two core complexes, each of four cores, and supports two memory channels, giving a total maximum of 32 cores and 8 memory channels on an EPYC processor. The dies are connected by AMD's newest interconnect, the Infinity Fabric, which plays a key role not only in die-to-die communication but also processor-to-processor communication and within AMD's new Vega graphics. AMD designed the Infinity Fabric to be modular and scalable in order to support large GPUs and CPUs, and states that within a single package the fabric is overprovisioned to minimize any issues with non-NUMA aware software.

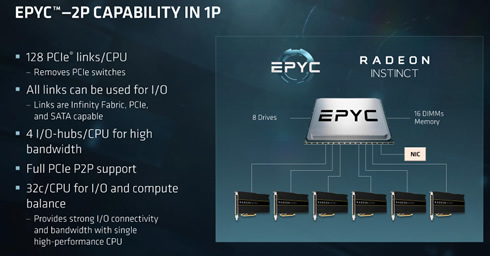

With a total of 8 memory channels, and support for 2 DIMMs per channel, AMD is quoting a 2TB per socket maximum memory support, scaling up to 4TB per system in a dual processor system. Each CPU will support 128 PCIe 3.0 lanes, suitable for six GPUs with full bandwidth support or up to 32 NVMe drives for storage. There are also 4 IO hubs per processor for additional storage support.

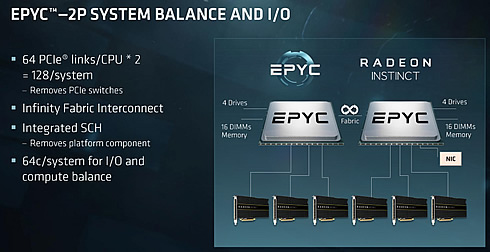

In a dual socket arrangement, each CPU uses 64 PCIe lanes in Infinity Fabric mode to communicate with each other. This means there is still a total of 128 PCIe lanes to be used inside the system, but the total memory support has doubled.

All CPUs will have 128 PCIe 3.0 lanes, have access to all 64MB of L3 cache, and support DDR4-2666. All processors will support all the features involved, and the only differentiation point will be on cores, frequencies, and power.

The dual-threaded Epyc processors range from eight-core chips drawing 120 W at 2.1 GHz to 32-core chips drawing 180 W at 2.2 GHz. AMD says that the hefty complement of memory and I/O, a single-socket Epyc server may be adequate for some users who needed two-socket Xeon servers.

The EPYC 7601 is sporting 32 cores with 64 threads, a base frequency of 2.2 GHz, an all-core boost of 2.7 GHz and a boost frequency of 3.2 GHz. Depending on the distribution of software across the cores, the chip should be at the boost frequency when fewer than 12 cores are in use.

The Epyc and Vega chips connect over AMD's Infinity fabric, the same link used to connect Epyc die on a module. Infinity rivals the proprietary Omnipath and NVLink interconnects used by Intel and Nvidia, respectively.

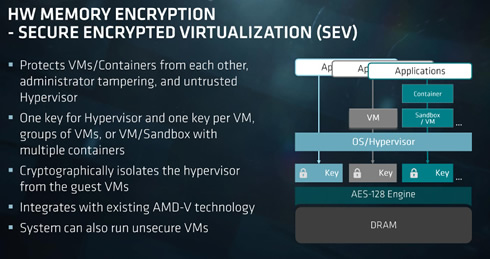

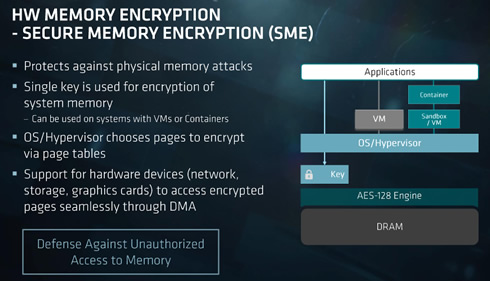

Separately, AMD described its approach in Epyc to security. Every processor packs a dedicated hardware accelerator for secure boot, and each memory controller supports a hardware encryption engine that draws less than 2% of the chip's performance.

Epyc also lets users apply encryption to virtual machines without requiring apps to be recompiled. Intel offers a similar function based on instruction set extensions that require recompiling apps.

AMD showed as many as two dozen systems using Epyc 7000.

Baidu commissioned four vendors to make single-socket Epyc servers for its data centers.

Later this year, Microsoft's cloud computing group is expected to start deploying dual-socket servers with separate versions using Epyc and Xeon Skylake processors.

AMD showed 30 systems using Epyc at an event in Austin. They included the Cloudline 3150 from Hewlett-Packard Enterprise, claiming records in integer, floating-point, and I/O performance for a single-socket storage server. Supermicro claims that it hit performance records with a dual-socket Epyc server.

Inventec showed a machine-learning system with an Epyc host and up to four Radeon MI-25 GPU accelerators based on AMD's Vega core, delivering a total of up to 100 Tflops. Such systems aim to attack a market for GPU computing jobs such as neural network training that rival Nvidia has owned to date.

Asus, Dell, Gigabyte, Lenovo, Sugon, Tyan, and Wistron also showed Epyc-based systems.

Major operating system and hypervisor developers support Epyc, including Canonical, Microsoft, RedHat, Suse, and VMware. Chip makers Mellanox, Samsung, and Xilinx said that they will support Epyc.

Radeon Instinct GPU server accelerators

At the event, AMD also launched Radeon Instinct GPU server accelerators including Radeon Instinct MI25, MI18 and MI6 as well as ROCm 1.6 software platform to help improve performance and efficiency for deep learning and artificial intelligence (AI) calculation.

The Radeon Instinct MI25 features AMD's new Vega architecture using a 14nm FinFET process and is suitable for large-scale machine intelligence and deep learning applications. The MI18 uses the high-performance, low-power Fiji GPU architecture and is designed for machine learning calculation and all sorts of HPC applications, AMD said. The MI6 is based on Polaris architecture and is also created for HPC, machine learning and Edge intelligence applications. AMD will start supplying the Radeon Instinct products to partners in the third quarter of 2017.