Microsoft to Showcase Advanced Spatial Computing Experiences at UIST 2019

Microsoft researchers continue to make technologies that expand and enhance the world of spatial computing.

This week, they’re presenting their latest and fanciest in New Orleans at the ACM Symposium on User Interface Software and Technology (UIST) 2019.

The researchers have developed a method for allowing people to safely navigate a given route in real-world environments, such as a daily walk to work, while seeing themselves strolling a different VR world, such as a city of their choosing.

The have also developed a technology that leverages newly introduced eye-tracking of users so that visual details in VR can be changed in real time without those changes being detected.

Finally, researchers have invented a new haptic feedback controller that uniquely emulates the human sense of touch by meshing centuries-old and cutting-edge technologies.

DreamWalker

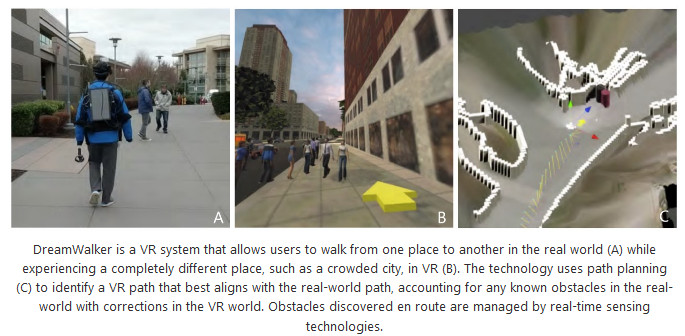

In “DreamWalker: Substituting Real-World Walking Experiences with a Virtual Reality,” Stanford University PhD student Jackie Yang, a Microsoft Research intern at the time of the work, and Microsoft researchers Eyal Ofek, Andy Wilson, and Christian Holz, who is now an ETH Zürich professor, have created a VR system that allows you to walk from one place to another in the real world while experiencing a completely different place in VR. The system adapts to a chosen route, making it possible to transform a walk to the grocery store or to a bus stop, say, into a walk through Times Square in VR.

A precursor project, VRoamer, enabled playing a VR game inside an uncontrolled indoor environment. DreamWalker takes the technology another step, merging the constraints of the virtual city tour and the actual path the user will travel in the real world, which may differ, while handling the complex difficulties of an uncontrolled outdoor environment. To accomplish this task, the technology first plans users’ paths in the virtual world and then uses real-time environment detection, walking redirection, and virtual world updates once users have embarked on their journeys.

Prior to the start of the walk, path planning finds a path in the virtual world that will minimize differences with the real-world path. It will also identify any potential corrections needed in the virtual world so that the user doesn’t collide with any known objects in the real world. Differences between the real path and the chosen virtual tour will be corrected by gradual redirection while users walk and also by introducing virtual scenarios that will force users to correct their own paths, such as road blocks.

During the walk, DreamWalker monitors users’ environments using a variety of sensing technology— inside-out tracking via a Windows Mixed Reality-provided relative position trace, a dual band GPS sensor, and two RGB depth cameras—to detect obstacles discovered along the way.

Discovered obstacles that may move or appear in users’ paths are managed by introducing moving virtual obstacles, or characters, such as pedestrians walking near users, to block them from any potential danger. Other options for controlling users’ paths may include pets and dynamic events such as vehicles being parked, moving carts and more, limited only by the imagination of the experience creator. The real-time environment detection technology provides guidance for the creation of this additional virtual content with great care taken to generate these virtual obstacles outside users’ field of view to avoid any unnatural “popping up” of objects in the virtual world. Thanks to recent advances, the technology can actually do so with the help of users’ gaze. Enter Mise-Unseen.

Mise-Unseen: Taking advantage of users’ gaze and attention to enhance the VR experience

In “Mise-Unseen: Using Eye-Tracking to Hide Virtual Reality Scene Changes in Plain Sight,” University of Potsdam PhD student Sebastian Marwecki, a Microsoft Research intern at the time of the work, and researchers Andy Wilson, Eyal Ofek, Mar Gonzalez Franco, and Christian Holz introduce technology that allows covert changes to be made to a VR environment while a user’s gaze and attention are fixed on something else. They call this technology Mise-Unseen in homage to the French term mise en scène, which is used in film and theater to describe the scenery and staging of what an audience sees. In film and theater, the field of view is controlled by a director, so the audience sees what the director wants them to see. The audience cannot see the camera or lighting riggings, for instance, and that is by design.

In VR, however, users are controlling their own field of view, so some changes to the scene need to be done discreetly within their peripheral vision. Mise-Unseen uses eye-tracking to identify where users are focusing their attention and then employs a perception model to recommend where changes to the environment can be made without users noticing those changes. For example, a painting in a virtual art gallery can be changed to better reflect users’ tastes while they’re looking elsewhere in the room. “This [technology] is all based on the idea that gaze and attention are linked; wherever you are gazing goes your attention, and that means you are not paying attention to other parts,” says Senior Researcher Mar Gonzalez Franco.

Another application is the ability to assess user understanding. While playing a game, a person might need to solve a puzzle to obtain a code that allows them to move forward. However, there is a chance that the person could guess the code without having solved, or even having looked at, the puzzle. With this technology, if a player hasn’t looked at certain areas of the puzzle before entering the code, the code and puzzle could be visually changed in real time so that the player needs to go back and attempt to solve the puzzle.

Other applications of Mise-Unseen include support for passive haptics, individualized content (like the painting example above), and adaptive difficulty. Another exciting aspect of this technology is its ability to reduce the field of view in VR in a way that alleviates motion sickness for those who experience it. Lastly, this technology allows valuable computing resources to be conserved when rendering visual objects—knowing where a user is looking allows the system to better disperse its resources.

CapstanCrunch: A palm-grounded haptic controller that, much like judo, enlists user-supplied force

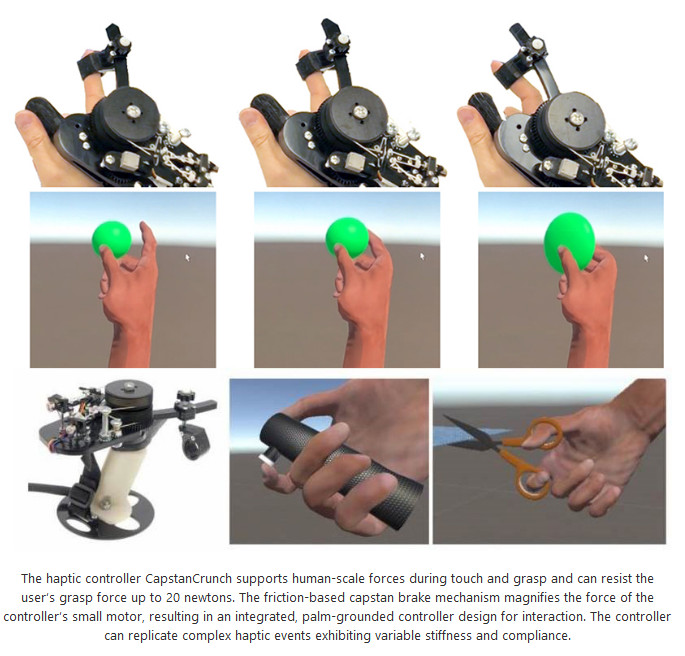

Imagine grasping for a virtual ball. You reach out to grab the ball in the VR system, but how do you know you’ve got it if you can’t feel it against your hand? That’s where haptic controllers come into play—they provide the sensation associated with touching an object in real life when you come into contact with it in a virtual world. A major challenge for such controllers, though, has been producing realistic feelings consistent with the strong forces applied by the human hand while keeping the handheld devices small and light.

In their paper “CapstanCrunch: A Haptic VR Controller with User-supplied Force Feedback,” researchers Mike Sinclair, Eyal Ofek, Mar Gonzalez Franco, and Christian Holz introduce a new haptic controller called CapstanCrunch. While CapstanCrunch may appear outwardly similar to past prototypes such as the CLAW, the magic of the new technology lies inside the controller: a linear and directional brake that can sustain human-scale forces and is light, energy efficient, and robust.

A key component of the brake—and the inspiration behind the controller’s name—is the capstan, a centuries-old mechanical device originally used to control ropes on sailing ships. “It’s an old technology that people used to tie boats, but we use it in a different way that right now enables us to multiply the force of a small internal motor,” says Eyal Ofek, a Senior Researcher.

Past efforts to design haptic controllers used large motors to resist the strong forces applied by the human hand, yet these expensive, heavy, and energy-hungry motors can break when too great a force is applied, making the device too expensive and not robust enough to be used in consumer scenarios. The capstan magnifies an input force by around a factor of 40, which allows the use of small, cheap, and energy-efficient motors while insulating them from external world forces.

CapstanCrunch leverages this technology to give fine-grain haptic feedback. Similar to an important tenet in the martial arts practice judo, the CapstanCrunch mechanism uniquely feeds off users’ own human force and friction to maximize efficiency and minimize effort needed from the motor to provide feedback. In addition to consuming low amounts of energy, CapstanCrunch is low cost, robust, safe, quiet, and fast when compared with its predecessors.

Check out the work

If you’re attending UIST 2019, Mise-Unseen will be presented on Tuesday, Oct. 22, at 2 p.m. Eastern time as will CapstanCrunch. DreamWalker will be presented at 2 p.m. the next day.