Arm's Project Trillium Offers Scalable, Machine Learning Compute Platform

Arm today announced Project Trillium, a suite of Arm IP including new scalable processors that will deliver machine learning (ML) and neural network (NN) functionality.

The current technologies are focused on the mobile market and will enable a new class of ML-equipped devices with advanced compute capabilities, including object detection.

"The rapid acceleration of artificial intelligence into edge devices is placing increased requirements for innovation to address compute while maintaining a power efficient footprint. To meet this demand, Arm is announcing its new ML platform, Project Trillium," said Rene Haas, president, IP Products Group, Arm. "New devices will require the high-performance ML and AI capabilities these new processors deliver. Combined with the high degree of flexibility and scalability that our platform provides, our partners can push the boundaries of what will be possible across a broad range of devices."

While the initial launch focuses on mobile processors, future Arm ML products will deliver the ability to move up or down the performance curve - from sensors and smart speakers, to mobile, home entertainment, and beyond.

Project Trillium completes the Arm Heterogenous ML compute platform with the Arm ML processor, the second-generation Arm Object Detection (OD) processor and open-source Arm NN software.

Arm's new ML and object detection processors provide a massive efficiency uplift from standalone CPUs, GPUs and accelerators, but they also exceed traditional programmable logic from DSPs.

The Arm ML processor delivers more than 4.6 trillion operations per second (TOPs) with a further uplift of 2x-4x in effective throughput in real-world uses through intelligent data management. It also offers great performance in thermal and cost-constrained environments with an efficiency of over three trillion operations per second per watt (TOPs/W). This means devices using the Arm ML processor will be able to perform ML independent of the cloud. That's vital for products such as dive masks but also important for any device, such as an autonomous vehicle, that cannot rely on a stable internet connection.

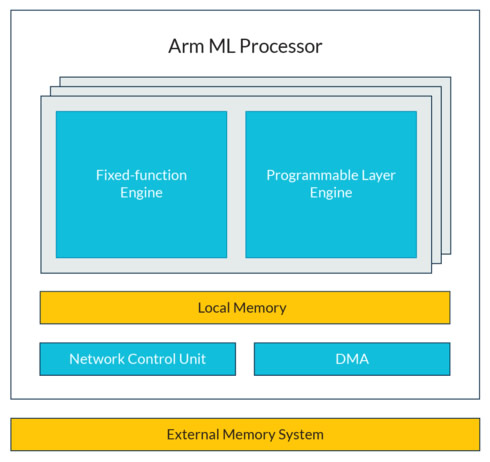

Arm Machine Learning processor is targeting mobile and adjacent markets. Additional programmable layer engines support the execution of non-convolution layers, and the implementation of selected primitives and operators, along with future algorithm generation. A network control unit manages the overall execution and traversal of the network and the DMA moves data in and out of the main memory. Onboard memory allows central storage for weights and feature maps, thus reducing traffic to the external memory and, therefore, power.

The Arm OD processor has been designed to efficiently identify people and other objects with virtually unlimited objects per frame. The second generation of the Arm Object Detection processor has been designed fo ruse in a new generation of smart cameras and other vision-based devices.

With a fixed-function computer vision pipeline, the Object Detection processor continuously scans every frame and provides a list of detected objects, along with their location within the scene.

The processor's people model allows you to detect not only whole human forms, but also faces, heads and shoulders, and even determine the direction each person is facing. It can object size from 50x60 pixels to full screen. In addition, detailed metadata allow even more information to be extracted from each frame.

It supports real-time detection with Full HD processing at 60 frames per second and up to 80x the performance of a traditional DSP. It can be combined with CPUs, GPUs or the Arm Machine Learning processor for additional local processing, reducing overall compute requirement.

In combination, the Arm ML and OD processors perform even better, delivering a high-performance, power-efficient people detection and recognition solution. This means that users will be able to enjoy high-resolution, real-time, detailed face recognition on their smart devices delivered in a battery-friendly way.

Arm NN software, when used alongside the Arm Compute Library and CMSIS-NN, is optimized for NNs and bridges the gap between NN frameworks such as TensorFlow, Caffe, and Android NN and the full range of Arm Cortex CPUs, Arm Mali GPUs, and ML processors.

Arm NN enables translation of existing neural network frameworks, allowing them to run efficiently - without modification - across Arm Cortex CPUs and Arm Mali GPUs. The software includes support for the Arm machine learning processor; for Cortex-A CPUs and Mali GPUs via the Compute Library; and for Cortex-M CPUs via CMSIS-NN.

The new suite of Arm ML IP will be available for early preview in April of this year, with general availability in mid-2018.

In two weeks, during the Mobile World Congress 2018, Arm will showcase early implementations in the form of the Arm Object Detection processor in products such as IP security and smart cameras.