AMD Says New Epyc Server Chip Is Faster Than Intel’s Pricier Offering

Advanced Micro Devices Inc. (AMD) promoted its new 2nd Gen EPYC processor and platform server processor as better-performing than more expensive parts from rival Intel, and said that it had landed Google and Twitter as customers.

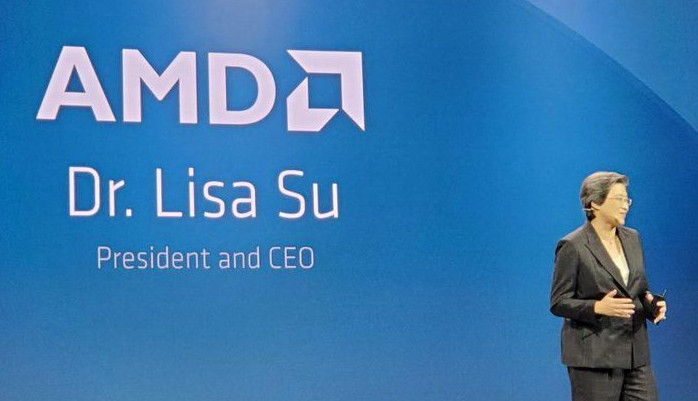

AMD’s Epyc chip will have as many as 64 processing cores and will be made using an 7-nanometer TSMC manufacturing method, Chief Executive Officer Lisa Su said Wednesday at an event in San Francisco. The increased performance and efficiency will reduce costs to data-center owners by half, she said.

AMD uses the 7nm chip-making technology from its contract manufacturers that helps the chips have better performance while consuming less power. Intel, which makes chips in its own factories instead of relying on contractors, is behind schedule delivering chips made with its own newer manufacturing process. It plans to release them next year.

“We are just getting started in the server market,” Su said. “What you can expect from us is consistency. It is not just about one generation. It is about building a multi-generational road map.”

Analysts said AMD’s Epyc 7002 series, aka Rome, likely will meet its goal of winning the company at least 10% of classic server sockets by next June, up from its low- to mid-single digit share today. To date, most of the gains AMD has made with its new Zen-based processors have been in desktops and notebooks.

The 7002 Series integrates up to 64 dual-threaded Zen-2 cores running at a base frequency of up to 3.1 GHz with up to 256 Mbytes L3 cache. Each processor’s package contains a 14nm I/O hub supporting 8 DDR4-3200 DRAM channels and 128 PCIe Gen 4 links.

Intel’s latest Cascade Lake processors sport fewer cores, DRAM channels and PCIe Gen 3 links. As a result, AMD claimed the 7200 delivers 80 new performance records, an average of up to twice the performance, and more than twice the performance/dollar of Cascade Lakes.

Google has already started using the new Epyc processors in its data centers and will deploy more in the server farms that support its cloud services. Google’s announcement means that all the major U.S. large cloud companies and some of their major Chinese counterparts are AMD customers.

AMD has done a great job with EPYC’s chip architecture, single-core IPC, and multi-core scalability leveraging its massive L3 caches and its hybrid, multi-die architecture with 2nd Gen Infinity Fabric.

Thanks to its system-in-package approach, AMD packs nearly 1,000mm2 of silicon and 32 billion transistors in a device. The 7002 uses an upgraded Infinity Fabric running up to 18 GTransfers/s to link the die. The new design flattens the memory hierarchy and lowers overall latency from AMD’s first generation Epyc, called Naples.

The company posted a comparison of its product with an Intel Xeon processor. According to its calculations, the top-of-the-line new Epyc, which is listed at less than $7,000, outperforms a Xeon 8280M listed at $13,012.

With the 2nd Gen EPYC platform, AMD improved most of its Gen 1 shortcomings like single-thread performance (+15%) and core scaling and added new RAS (uncorrectable DRAM error entry) and security (Secure Memory Encryption, Secure Encrypted Virtualization, 509 keys) capabilities in addition to substantial, multi-core performance gains.

2nd Gen AMD EPYC Processor Stack

Model # |

Cores |

Threads |

Base Freq (GHz) |

Max Boost Freq (GHz)16 |

Default TDP (w) |

L3 Cache (MB) |

1Ku Pricing |

7742 |

64 |

128 |

2.25 |

3.40 |

225w17 |

256 |

$6,950 |

7702 |

64 |

128 |

2.00 |

3.35 |

200w |

256 |

$6,450 |

7702P |

64 |

128 |

2.00 |

3.35 |

200w |

256 |

$4,425 |

7642 |

48 |

96 |

2.30 |

3.30 |

225w18 |

256 |

$4,775 |

7552 |

48 |

96 |

2.20 |

3.30 |

200w |

192 |

$4,025 |

7542 |

32 |

64 |

2.90 |

3.40 |

225w19 |

128 |

$3,400 |

7502 |

32 |

64 |

2.50 |

3.35 |

180w |

128 |

$2,600 |

7502P |

32 |

64 |

2.50 |

3.35 |

180w |

128 |

$2,300 |

7452 |

32 |

64 |

2.35 |

3.35 |

155w |

128 |

$2,025 |

7402 |

24 |

48 |

2.80 |

3.35 |

180w |

128 |

$1,783 |

7402P |

24 |

48 |

2.80 |

3.35 |

180w |

128 |

$1,250 |

7352 |

24 |

48 |

2.30 |

3.20 |

155w |

128 |

$1,350 |

7302 |

16 |

32 |

3.00 |

3.30 |

155w |

128 |

$978 |

7302P |

16 |

32 |

3.00 |

3.30 |

155w |

128 |

$825 |

7282 |

16 |

32 |

2.80 |

3.20 |

120w |

64 |

$650 |

7272 |

12 |

24 |

2.90 |

3.20 |

120w |

64 |

$625 |

7262 |

8 |

16 |

3.20 |

3.40 |

155w |

128 |

$575 |

7252 |

8 |

16 |

3.10 |

3.20 |

120w |

64 |

$475 |

7232P |

8 |

16 |

3.10 |

3.20 |

120w |

32 |

$450 |

AMD looks strong in Hadoop RT analytics (AMD says world record), Java throughput (AMD says 83% better), fluid dynamics (AMD says 2X better), and virtualization (AMD says up to 50% lower TCO, Twitter said 25%). Overall, AMD says it has achieved 80 world performance records. Intel will likely have advantages on low latency ML inference workloads that take advantage of Intel's DLBoost instructions and will also look very good in in-memory database workloads utilizing Optane DC.

Nearly every one of AMD's event benchmarks were published by OEMs and ODMs like HPE (HPE says "37 world records"), Gigabyte, Lenovo (Lenovo says "16 world records"), and Supermicro not AMD. Twitter said it improved its TCO by 25% going EPYC.

AMD's CSPs, OEMs, ODMs, end customers, ISVs, and IHVs showed their support for the new EPYC platform. It was a showing at the event with support from CSPs (Azure, GCP), OEMs (Cray, Dell Technologies, Hewlett Packard Enterprise, Lenovo, Cisco, H3C) with "2X the platforms", end customers (Google, Twitter, U.S. Air Force), ISVs (VMware, Canonical, RedHat, SUSE), ODMs (Gigabyte, Quanta, Supermicro), IHVs (Broadcom, Micron, Xilinx, Samsung). Ecosystem supporters are important, especially when you are out resourced like AMD.

AMD didn't share a lot of future server chip details, but it did share that it has already completed the design of a next-generation Zen-3 x86 core to be made in TSMC’s N7+ process for a server chip called Milan, scheduled to ship next year. It is now designing a Zen-4 core for a follow-on server processor called Genoa.