Google Enhances Translation, Your Old Photos And Goes All In on Cloud Machine Learning

Google today announced enhancements in its translation service, a new PhotoScan app smartphones, and cloud machine learning enhancements aimed at everyone from job applicants to corporate customers.

Better translation In 10 years, Google Translate has gone from supporting just a few languages to 103, connecting strangers, reaching across language barriers. At the start, the company pioneered large-scale statistical machine translation, which uses statistical models to translate text. Today, Google introduced the next step in making Google Translate even better: Neural Machine Translation.

Neural Machine Translation has been generating exciting research results for a few years and in September, Google's researchers announced Google's version of this technique. At a high level, the Neural system translates whole sentences at a time, rather than just piece by piece. It uses this broader context to help it figure out the most relevant translation, which it then rearranges and adjusts to be more like a human speaking with proper grammar. Since it’s easier to understand each sentence, translated paragraphs and articles are a lot smoother and easier to read. And this is all possible because of end-to-end learning system built on Neural Machine Translation, which basically means that the system learns over time to create better, more natural translations.

Today Google is putting Neural Machine Translation into action with a total of eight languages to and from English and French, German, Spanish, Portuguese, Chinese, Japanese, Korean and Turkish.

Google's goal is to eventually roll Neural Machine Translation out to all 103 languages and surfaces where you can access Google Translate.

And there’s more coming today too -- Google Cloud Platform, Google's public cloud service, offers Machine Learning APIs that make it easy for anyone to use Google's machine learning technology. Today, Google Cloud Platform is also making the system behind Neural Machine Translation available for all businesses through Google Cloud Translation API.

Now your photos look better than ever

We all have those old albums and boxes of photos, but we don’t take the time to digitize them because it’s just too hard to get it right.

So Google is introducing PhotoScan, a new, standalone app from Google Photos that easily scans just about any photo, free, from anywhere. It is available today for Android and iOS.

PhotoScan gets you great looking digital copies in seconds - it detects edges, straightens the image, rotates it to the correct orientation, and removes glare. Scanned photos can be saved in one tap to Google Photos to be organized, searchable, shared, and safely backed up at high quality-for free.

Google also today is rolling out three easy ways to get great looking photos in Google Photos: a new and improved auto enhance, new looks, and advanced editing tools.

12 new looks make edits based on the individual photo and its brightness, darkness, warmth, or saturation, before applying the style. All looks use machine intelligence to complement the content of your photo, and choosing one is just a matter of taste.

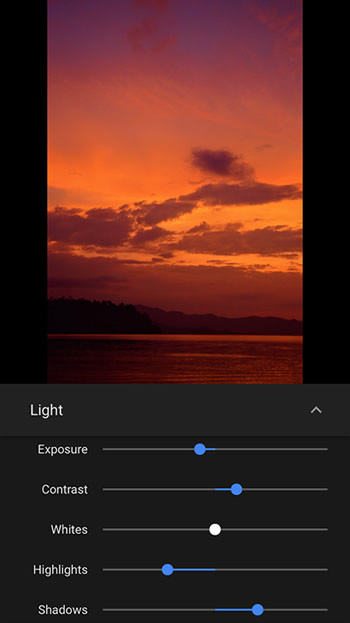

Third, Google's editing controls for Light and Color allow you to fine tune your photos, including highlights, shadows, and warmth. Deep Blue is particularly good for images of sea and sky where the color blue is the focal point.

The Google Photos app with the new photo editor will begin rolling out today across Android, iOS and the web.

Artificial intelligence group for Google Cloud

Google also today announced the formation of an artificial intelligence group for Google Cloud, the tech company's latest gambit to increase its market share in the lucrative cloud computing business.

Diane Greene, who leads Google's cloud business, announced the team at an event at the company's facilities in San Francisco. The group will be led by Fei-Fei Li, an artificial intelligence professor at Stanford University, and researcher Jia Li.

Google also announcing a set of enhancements to its existing suite of cloud machine learning capabilities. The first was a new Jobs API aimed at helping match job applicants with the right openings. In addition, the company is slashing the prices on its Cloud Vision API.

On top of that, Google is offering GPUs in its cloud both through the company's managed services and its infrastructure-as-a-service product. Companies that want to roll their own machine learning systems and algorithms can now take advantage of the new hardware.

The new Google Cloud Jobs API gives companies a tool to help them match the best candidates to the right jobs, based on the skills that each person can bring to bear. The API is designed to work with a company's job search system. Users plug in their skills, experience, and location, then the API takes that information to match them with jobs it thinks would suit them.

Right now, the API is in public alpha for customers in the United States and Canada, with FedEx, CareerBuilder, and Dice all giving it a shot. Google hasn't provided a timeline for when it would be more broadly available.

Google is slashing the prices on one of its most popular machine- learning-based cloud services. The Google Cloud Vision API now costs about 80 percent less for companies to implement. That means it's easier for them to build apps that can recognize the content of images without needing to build the machine learning algorithms behind that functionality.

On top of that, the Cloud Natural Language API, which is used to parse sentences written by humans, is now generally available. That launch comes with additional features, like the ability to determine the sentiment of text on a sentence-by-sentence basis, rather than generating one sentiment score for the content of an entire document.

Google is also bringing GPUs to its cloud infrastructure and managed machine learning offerings next year.

Users will be able to add GPUs to their infrastructure instances, for custom machine learning and high-performance computing tasks. It will be similar to compute instances that Microsoft and Amazon have introduced this year to give companies access to GPUs in the cloud.

In addition, Google Cloud Machine Learning will start taking advantage of the new hardware. The system, which lets users set up their own custom machine learning algorithms while managing the underlying infrastructure, will automatically tap into GPUs when the system believes that it's appropriate.

Google will be using AMD's Radeon-based FirePro S9300 x2 Server GPUs. That's a marked difference from Microsoft and Amazon, which are both using Nvidia GPUs.