HPE Sees Accelerators As a First Step Towards Making Quantum Computing a Reality

There is a lot of discussion around when we will see a quantum computer that can deliver reliable and repeatable results. With all of the complex challenges we need to solve, HPE believes we can explore and advance quantum computing while also finding alternative ways to solve these challenges by reimagining current computing architectures.

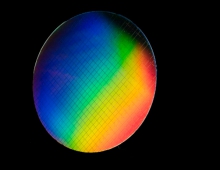

The need for quick processing massive amaounts of data has led scientists and researchers to the quantum computing concept. The goal is to eventually apply quantum principles to complex computing problems.

Quantum computing takes advantage of two key quantum properties of materials to help generate this potential. The first is a notion of superposition where a "bit" can exist in more than one state at any time. A classical bit exists in only one state at a time in a binary number equal to 0 or 1. A quantum bit (qubit) contains a great deal more information than a 0 or 1 and can still be manipulated using its quantum properties, resulting in infinite possibilities. The second is the property of entanglement. This really allows each qubit to impact the state of another. Combined, these two properties when harnessed make it possible to run far more complex calculations than we can today with traditional computing models.

Quantum computing will have a revolutionary impact on our understanding of quantum systems and will be good at solving intrinsically quantum problems. For example, it can help us solve physics problems where quantum mechanics and the interrelation of materials or properties are important. At an atomic level, quantum computing simulates nature and therefore could help us find new materials or identify new chemical compounds for drug discovery. It holds the promise of being able to take on problems that could take a normal computer billions of years to solve in seconds.

With all the promise that it holds, there are hurdles to overcome before quantum computers will reach a point of practical application. Quantum computers must attain a sufficient number of qubits to process complex algorithms at speed and overcome operating errors generated by noise interference and decoherence. And all of this must occur at a very low-temperature close to absolute zero or -273 Celsius. Needless to say, these systems require very special cooling units that consume a tremendous amount of energy. Finally, researchers also must find a way to efficiently translate data from classical to quantum bits.

Mark Potter, Senior Vice President and CTO, Hewlett Packard Enterprise & Director, Hewlett Packard Labs, believes that the industry could use classical computing devices to deliver real-world results around big data and deep learning much sooner than quantum computers can. These devices are called "accelerators" because we expect computers to "offload" certain tasks to them as necessary. But they are not what you think of as an accelerator today.

As an example, let's look at the algorithms underpinning deep learning, also known as neural networks. Any deep learning system is fundamentally running massive amounts of arithmetic as it multiplies matrix-by-matrix. This process burns a lot energy and takes a very long time using conventional methods. While quantum computing focuses on solving this challenge using the two core principles of quantum mechanics, we are also taking a look at how biosciences can inform computing architectures to solve this problem.

Taking a cue from how the brain works, HPE has developed the Dot Product Engine which could have an impact on big data and deep learning in just a few years. The Dot Product Engine uses memristors, circuit devices that operate somewhat similarly to synapses in the brain, to perform matrix-vector multiplication in an analog domain at room temperature. This means that the answer appears almost instantly in a single step, instead of tens of thousands of program steps. This promises to cut the time and energy required to obtain an answer by multiple orders of magnitude. HPE's models show we can reach hundreds of trillions of calculations per watt. "It is kind of strange to think we are really talking about an analog computer," Potter says.

Another promising accelerator is the work the Hewlett Packard Labs team is doing to replace electrons completely and compute with photons instead. In an optical system, circuits process information using photons (light) instead of electrical charges. Consider NP-hard problems, a problem where the time it takes to solve a problem grows faster than the size of the input data and requires enormous computational resources, much like a traveling salesman trying to find the fastest way to visit many cities. In this problem, a conventional computer finds the solution by sequentially checking each route combination. It can't give an answer until it's checked every possible combination. With optical computing, the problem is mapped onto the physical system, light runs through all the nodes at once, and every node works at the same time to find the most efficient connection path, which is the lowest energy state of the whole system.

"While we don't know quite when it will be possible, quantum computers will likely be capable of solving some of these types of NP-hard problems. At the same time, our research and development of a Coherent Ising Machine, an optical system that operates at room temperature, could be available in as soon as 3 to 5 years. This system has an important advantage when you consider that 75 percent of data generated in the future will be on the edge. It is very easy to imagine a super-efficient photonic accelerator or the Dot Product Engine on the edge running alongside other systems to turn data into action and insights right where the data is created," Potter added.

Potter believes that the best use of quantum computers in the future will involve a hybrid approach in which a classical computer uses a quantum computer to accelerate deeply quantum problems. To make this hybrid approach possible, we will need to find ways to integrate quantum computers with the rest of our IT systems by bridging the gap between two computers that speak completely different languages. There are a lot of people working to solve this challenge and HPE has developed an architecture called Memory-Driven Computing that is ready to support these accelerators now. Memory-Driven Computing puts a nearly limitless pool of memory at the core of the system which allows us to combine compute devices in virtually unlimited combinations with every component able to communicate with all the others at the speed of memory -- a sub-microsecond speed. Importantly, this architecture is open and will support any accelerator, or device for that matter, developed by our team or others.

HPE plans to invest in research around accelerators like the Dot Product Engine, optical computing and Memory-Driven Computing, in order to provide faster and more effective ways to solve tomorrow's data challenges with practical solutions. The company is also partnering with the industry in an open way to create an entirely new fabric to connect all this data.