Microsoft Brings Windows Server OS On ARM Chips

Microsoft is planning to use chips based on ARM Holdings architecture in the machines that run its cloud services, potentially imperiling Intel's longtime dominance in the market for data-center processors.

Microsoft, in collaboration with Qualcomm and Cavium, has developed a version of its Windows operating system for servers using ARM processors. The servers run on Qualcomm's 10 nanometer Centriq 2400, an ARM-based chip designed for cloud servers.

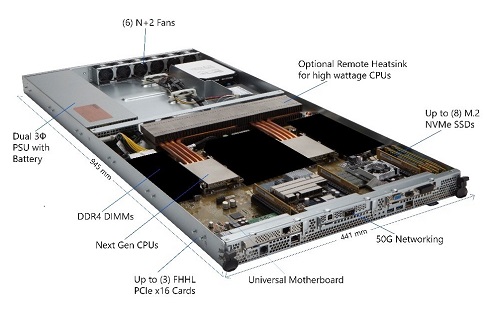

With the goal of enabling a variety of cloud workloads to run on the Microsoft Azure cloud platform powered by Qualcomm Centriq 2400 server solutions, QDT today submitted a server specification using its advanced 10 nanometer Qualcomm Centriq 2400 platform to OCP. The Qualcomm Centriq 2400 Open Compute Motherboard server specification is based on the latest version of Microsoft's Project Olympus. Moreover, QDT conducted the first public demonstration of Windows Server, developed for Microsoft's internal use, powered by the Qualcomm Centriq 2400 processor.

The Qualcomm Centriq 2400 Open Compute Motherboard pairs QDT's recently announced 10nm, 48-core server processor with advanced interfaces for memory, network, and peripherals enabling the OCP community to access and design ARM-based servers for the most common cloud compute workloads. It fits into a standard 1U server system. It can be paired with compute accelerators, multi-host NICs, and leading-edge storage technologies such as NVMe to optimize performance for specific workloads.

Microsoft is now testing these chips for tasks like search, storage, machine learning and big data, said Jason Zander, vice president of Microsoft's Azure cloud division.

"It's not deployed into production yet, but that is the next logical step," Zander said. "This is a significant commitment on behalf of Microsoft. We wouldn't even bring something to a conference if we didn't think this was a committed project and something that's part of our road map."

The news come from the Open Compute Project Summit held in Santa Clara, California. The company is announcing new partners and components for the design (AMD, Nvidia), first unveiled last year, as it moves closer to putting the machines into its own data centers later this year.

The server design, called Project Olympus, and Microsoft's work with ARM-based processors reflect the software maker's push to use hardware innovations to cut costs, boost flexibility and stay competitive with Amazon.com and Google, which also provide computing power, software and storage via the internet.

While Intel is among companies making components to work with the Project Olympus design, ARM-chip makers such as Qualcomm and Cavium are also in the running.

One of those planned components is an add-in box made by Microsoft and Nvidia that plugs into the server to enable powerful processing for artificial intelligence tasks. The device, called HGX-1 hyperscale GPU accelerator, runs on 8 NVIDIA Tesla P100 GPUs and features a new switching design based on NVIDIA NVLink interconnect technology and the PCIe standard -- enabling a CPU to dynamically connect to any number of GPUs. The system lets Microsoft and other cloud providers more easily and cheaply host AI applications on their cloud networks.

"AI is a new computing model that requires a new architecture," said Jen-Hsun Huang, founder and chief executive officer of NVIDIA. "The HGX-1 hyperscale GPU accelerator will do for AI cloud computing what the ATX standard did to make PCs pervasive today. It will enable cloud-service providers to easily adopt NVIDIA GPUs to meet surging demand for AI computing."

While the AI device announced today was developed with Nvidia, Microsoft said future updates could add products using Intel's Nervana chips.

Microsoft is also collaborating with AMD to incorporate the cloud delivery features of AMD's next-generation "Naples" processor with Project Olympus.

"Next quarter AMD will bring hardware innovation back into the datacenter and server markets with our high-performance "Naples" x86 CPU, that was designed with the needs of cloud providers, enterprise OEMs and customers in mind," said Scott Aylor, corporate vice president of enterprise systems, AMD. "Today we are proud to continue our support for the Open Compute Project by announcing our collaboration on Microsoft's Project Olympus."

Designed to securely scale across the cloud datacenter and traditional on-premise server configurations, "Naples" delivers the "Zen" x86 processing engine in configurations of up to 32 cores. It offers access to vast amounts of memory, and on-chip support for high-speed input / output channels in a single-chip SoC. The first "Naples" processors are scheduled to be available in Q2.