New NVIDIA Tesla T4 GPU and New TensorRT Software Enable Intelligent Voice, Video, Image and Recommendation Services

At the GPU Technology Conference in Tokyo, NVIDIA founder and CEO Jensen Huang announced the new NVIDIA Tesla T4 GPU and TensorRT software to enable intelligent voice, video, image and recommendation services.

The NVIDIA TensorRT Hyperscale Inference Platform features NVIDIA Tesla T4 GPUs based on the company's NVIDIA Turing architecture and a set of new inference software.

The platform enables hyperscale data centers to offer new services, such as enhanced natural language interactions and direct answers to search queries rather than a list of possible results.

To optimize the data center for maximum throughput and server utilization, the NVIDIA TensorRT Hyperscale Platform includes both real-time inference software and Tesla T4 GPUs, which process queries up to 40x faster than CPUs alone.

NVIDIA estimates that the AI inference industry is poised to grow in the next five years into a $20 billion market.

The NVIDIA TensorRT Hyperscale Platform includes:

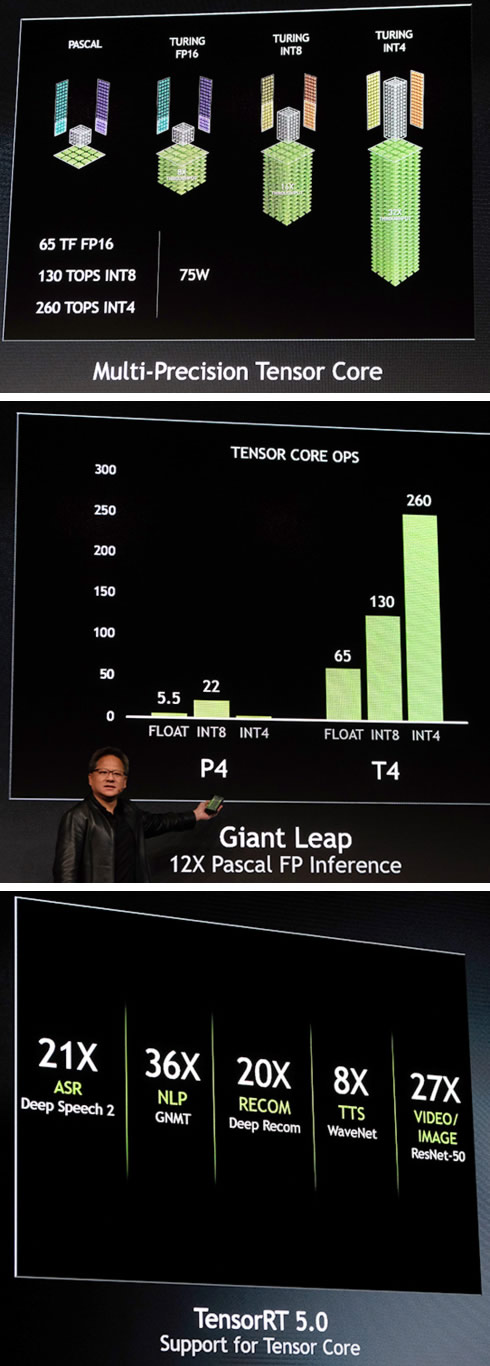

- NVIDIA Tesla T4 GPU - Featuring 320 Turing Tensor Cores and 2,560 CUDA cores, this new GPU provides high performance with flexible, multi-precision capabilities, from FP32 to FP16 to INT8, as well as INT4. Packaged in a 75-watt, small PCIe form factor that fits into most servers, it offers 65 teraflops of peak performance for FP16, 130 teraflops for INT8 and 260 teraflops for INT4.

- NVIDIA TensorRT 5 - An inference optimizer and runtime engine, NVIDIA TensorRT 5 supports Turing Tensor Cores and expands the set of neural network optimizations for multi-precision workloads.

- NVIDIA TensorRT inference server - This containerized microservice software enables applications to use AI models in data center production. Freely available from the NVIDIA GPU Cloud container registry, it maximizes data center throughput and GPU utilization, supports all popular AI models and frameworks, and integrates with Kubernetes and Docker.

NVIDIA Tesla T4 Specifications

- Turing Tensor Cores:320

- NVIDIA CUDA cores: 2,560

- Single Precision Performance (FP32): 8.1 TFLOPS

- Mixed Precision (FP16/FP32): 65 FP16 TFLOPS

- INT8 Precision: 130 INT8 TOPS

- INT4 Precision: 260 INT4 TOPS

- Interconnect: Gen3 x16 PCIe

- Memory: 16 GB GDDR6, 320+ GB/s

- Power: 75 watts

Autonomous cars

Huang also announced NVIDIA AGX, a series of embedded AI high-performance computers built around NVIDIA's new Xavier processors, the first processors built for autonomous machines.

Huang announced the availability of several developer kits that will let developers put Xavier to work: the Jetson AGX Xavier devkit for autonomous machine such as robots and drones and the DRIVE AGX Xavier devkit for autonomous vehicles.

Yamaha Motor Co. has selected NVIDIA Jetson AGX Xavier as the development system for its upcoming line of autonomous machines.

Huang also announced commercial vehicle manufacturer Isuzu is collaborating with NVIDIA to build AI technologies into its autonomous trucks.

Huang gave a shout-out to Fujifilm Corp., the first company in Japan to adopt the NVIDIA DGX-2 AI supercomputer, which it's using to build a supercomputing cluster to accelerate the development of AI technology for fields such as healthcare and medical imaging systems.

Huang also announced telecom giant NTT Group will adopt NVIDIA's AI platform for its company-wide "corevo" AI initiative.

Huang unveiled the NVIDIA Clara AGX - a computing architecture based on the NVIDIA Xavier AI computing module and NVIDIA Turing GPUs - and the Clara software development kit for developers to create a wide range of AI-powered applications for processing data from medical devices.