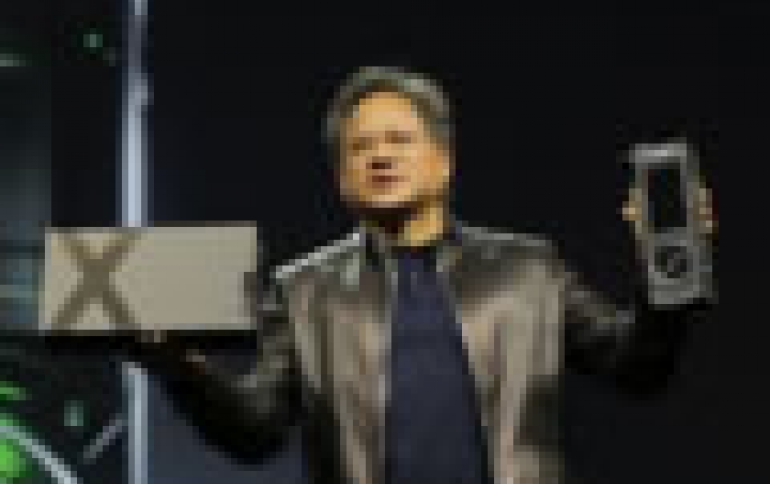

Nvidia Unveils The Titan X GPU, New DIGITS Training System and DevBox

Nvidia announced its next flagship graphics card, the $999 Titan X sporting eight billion transistors. In addition, the company provided details about its next-generation Pascal GPU architecture, the DIGITS Deep Learning GPU Training System for data scientists and the DIGITS DevBox hardware for deep learning appliances. The world’s "most advanced" GPU will feature a massive increase in CUDA cores, 12GB of RAM, the company said Tuesday, during a kick-off keynote for its GPU Technology Conference in San Jose.

Nvidia CEO Jen-Hsun Huang said the company’s newest card would pack 3,072 CUDA cores, which is a third more than its previous top-end gaming GPU, the GeForce GTX 980. Huang also pegged the single-precision floating point performance at 7 TFLOPS, which is a significant increase over the GeForce GTX Titan Black’s 5.1 TFLOPS.

Huang added that the card would hit roughly 200 GFLOPs in double-precision floating point performance. That puts it on par with the company’s discontinued GeForce GTX 780 Ti in double-precision performance, meaning it's more agaming card rather than a card designed for scientific calculations. The original GeForce Titan, for example, could hit 1,500 GFLOPS.

With that processing power and 336.5GB/s of memory bandwidth, the TITAN X can rip through the millions of pieces of data used to train deep neural networks. On AlexNet, an industry-standard model, for example, Huang said TITAN X took less than three days to train the model using the 1.2 million image ImageNet dataset, compared with over 40 days for a 16-core CPU. The original GeForce Titan would take six days and its replacement, last year’s GeForce Titan Black, would take five days.

Huang also showed a real-time animation called Red Kite by Epic Games running on the TITAN X. It captured 100 square miles of 3D graphics, depicting a yawning valley with 13 million plants. Any given frame was 20-30 million polygons and showed physically based rendering.

Huang added that the latest AAA titles are "breathtaking" on TITAN X in 4K. Middle-earth: Shadow of Mordor, for example, runs at 40 frames per second on high settings with FXAA enabled, compared with 30fps on the GeForce GTX 980, released in September.

Titan X uses a new Maxwell GPU, the GM200. Compared to the GM204, the new GPU has 50% more CUDA cores, 50% more memory bandwidth, 50% more ROPs, and almost 50% more die size.

Feeding GM200 is a 384-bit memory bus driving 12GB of GDDR5 clocked at 7GHz. The 12GB of VRAM continues NVIDIA’s trend of equipping Titan cards with as much VRAM as they can handle, and should ensure that the GTX Titan X has VRAM to spare for years to come.

As for clockspeeds, the base clockspeed is up to 1Ghz while the boost clock is 1075MHz. This is roughly 100MHz (~10%) ahead of the GTX Titan Black and will further push the GTX Titan X ahead.

NVIDIA’s official TDP for GTX Titan X is 250W, the same as the previous single-GPU Titan cards.

You should expect an average performance increase over the GTX 980 of more than 30%. Compared to its immediate predecessors such as the GTX 780 Ti and the original GTX Titan, the GTX Titan X represents a significant 50%-60% increase in performance.

| Specification Comparison | ||||||

| GTX Titan X | GTX 980 | GTX Titan Black | GTX Titan | |||

| CUDA Cores | 3072 | 2048 | 2880 | 2688 | ||

| Texture Units | 192 | 128 | 240 | 224 | ||

| ROPs | 96 | 64 | 48 | 48 | ||

| Core Clock | 1000MHz | 1126MHz | 889MHz | 837MHz | ||

| Boost Clock | 1075MHz | 1216MHz | 980MHz | 876MHz | ||

| Memory Clock | 7GHz GDDR5 | 7GHz GDDR5 | 7GHz GDDR5 | 6GHz GDDR5 | ||

| Memory Bus Width | 384-bit | 256-bit | 384-bit | 384-bit | ||

| VRAM | 12GB | 4GB | 6GB | 6GB | ||

| FP64 | 1/32 FP32 | 1/32 FP32 | 1/3 FP32 | 1/3 FP32 | ||

| TDP | 250W | 165W | 250W | 250W | ||

| GPU | GM200 | GM204 | GK110B | GK110 | ||

| Architecture | Maxwell 2 | Maxwell 2 | Kepler | Kepler | ||

| Transistor Count | 8B | 5.2B | 7.1B | 7.1B | ||

| Manufacturing Process | TSMC 28nm | TSMC 28nm | TSMC 28nm | TSMC 28nm | ||

| Price | $999 | $549 | $999 | $999 | ||

With today’s more official reveal of the Titan X and its massive 12GB frame buffer, Nvidia has now introduced four graphics cards into the market since AMD’s Radeon 285 in August 2014.

AMD is currently offering the powerful Radeon 295×2, a liquid cooled enthusiast part ideal for 4K gaming, and less expensive GPUs like the Radeon 285 offer great performance for the price. But Nvidia keeps hitting us over the head with Maxwell’s excellent power efficiency and evolving graphics technologies like GameWorks.

NVIDIA CEO and co-founder also showcased two new technologies that will fuel deep learning:

- DIGITS Deep Learning GPU Training System – a software application that makes it far easier for data scientists and researchers to quickly create high-quality deep neural networks.

- DIGITS DevBox – the "world’s fastest" deskside deep learning appliance - purpose-built for the task, powered by four TITAN X GPUs and loaded with the intuitive-to-use DIGITS training system.

Using deep neural networks to train computers to teach themselves how to classify and recognize objects can be an onerous, time-consuming task. Huang said that the DIGITS Deep Learning GPU Training System software changes that by giving users what they need from start to finish to build the best possible deep neural nets.

Available for download at http://developer.nvidia.com/digits, it’s the first all-in-one graphical system for designing, training and validating deep neural networks for image classification.

DIGITS guides users through the process of setting up, configuring and training deep neural networks – handling the heavy lifting so that scientists can focus on the research and results.

Preparing and loading training data sets with DIGITS – whether on a local system or from the web – is simple, thanks to its user interface and workflow management capabilities. It’s the first system of its kind to provide real-time monitoring and visualization, so users can fine tune their work. And it supports the GPU-accelerated version of Caffe, the framework used by many data scientists and researchers today to build neural nets.

Built by the NVIDIA deep learning engineering team for its own R&D work, the DIGITS DevBox is an all-in-one powerhouse of a platform for speeding up deep learning research.

Starting with its four TITAN X GPUs, every component of the DevBox – from memory to I/O to power – has been optimized to deliver efficient performance fordeep learning research. It comes preinstalled with all the software data scientists and researchers require to develop their own deep neural networks. This includes the DIGITS software package, the most popular deep learning frameworks – Caffe, Theano and Torch – and cuDNN 2.0, NVIDIA’s GPU-accelerated deep learning library.

And it’s all wrapped up in a cool-running package that fits under a desk and plugs into an ordinary wall socket.

DIGITS DevBox includes:

- Up to 4 dual-slot GPUs

- Up to 64 GB DDR4

- ASUS X99 (8 PCIe slots) + Core i7

- 2 x 48 port gen3 PCIe (PEX8748) + CPU for PCIe

- Up to 3x3 TB RAID 5 + M2 SATA + SSD

- Up to 1500W deskside

- Ubuntu 14.04

- NVIDIA-qualified driver

- NVIDIA CUDA Toolkit 7.0

- RAID setup with SSD/M.2 caching

- Caffe, Theano, Torch, cuda-convnet2

According to Nvidia, early results of multi-GPU training show the DIGITS DevBox delivers almost 4X higher performance than a single TITAN X on key deep learning benchmarks. Training AlexNet can be completed in only 13 hours with the DIGITS DevBox, compared to over 2 days with the best single GPU PC, or over a month with a CPU-only system.

DIGITS DevBox isn’t intended to be sold wildly – it’s for researchers, not the mass market. Starting price is $15,000, available in May 2015.

Nvidia also announced pricing and availability for its NVIDIA PX development platform. Introduced at the Consumer Electronics Show in January, NVIDIA DRIVE PX is a self-driving car computer designed to slip the power of deep neural networks into real-world cars.

Nvidia will make the DRIVE PX development platform available in May to automakers, Tier 1 automotive suppliers and research institutions, to get cars on the road toward driving themselves.

DRIVE PX’s twin NVIDIA Tegra X1 processors deliver 2.3 teraflops of performance. Yet each individual superchip is no bigger than a thumbnail. That’s enough to weave together data streaming in from 12 camera inputs and enable a wide range of advanced driver assistance features to run simultaneously – including surround view, collision avoidance, pedestrian detection, mirror-less operation, cross-traffic monitoring and driver-state monitoring.

Yet DRIVE PX is built to tap into a new technology called "deep learning" to give it capabilities far beyond what you can stuff into any of today’s passenger cars.That’s because today’s advanced driver assistance systems have evolved around the principle of classifying objects that the car’s sensors would detect.

The DRIVE PX development platform includes Nvidia's new DIGITS deep neural network software as well as video capture and video processing libraries.

Last but not least, Huang announced that its new Pascal GPU architecture, set to debut next year, will accelerate deep learning applications 10X beyond the speed of its current-generation Maxwell processors.

"It will benefit from a billion dollars worth of refinement because of R&D done over the last three years," he told the audience.

Pascal GPUs will have three key design features that will result in faster, more accurate training of richer deep neural networks – the human cortex-like data structures that serve as the foundation of deep learning research.

Along with up to 32GB of memory - 2.7X more than the newly launched NVIDIA flagship, the GeForce GTX TITAN X - Pascal will feature mixed-precision computing. It will have 3D memory, resulting in up to 5X improvement in deep learning applications. And it will feature NVLink – NVIDIA’s high-speed interconnect, which links together two or more GPUs - that will lead to a total 10X improvement in deep learning.

Mixed-precision computing enables Pascal architecture-based GPUs to compute at 16-bit floating point accuracy at twice the rate of 32-bit floating point accuracy.

Memory bandwidth constraints limit the speed at which data can be delivered to the GPU. The introduction of 3D memory will provide 3X the bandwidth and nearly 3X the frame buffer capacity of Maxwell. This will let developers build even larger neural networks and accelerate the bandwidth-intensive portions of deep learning training.

Pascal will have its memory chips stacked on top of each other, and placed adjacent to the GPU, rather than further down the processor boards. This reduces from inches to millimeters the distance that bits need to travel as they traverse from memory to GPU and back. The result is dramatically accelerated communication and improved power efficiency.

The addition of NVLink to Pascal will let data move between GPUs and CPUs five to 12 times faster than they can with today’s current standard, PCI-Express. This benefits applications, such as deep learning, that have high inter-GPU communication needs.

NVLink allows for double the number of GPUs in a system to work together in deep learning computations. In addition, CPUs and GPUs can connect in new ways to enable more flexibility and energy efficiency in server design compared to PCI-E.

Also back on Nvidia's roadmap is its Volta chip. Volta was originally scheduled to be the follow up to its Maxwell parts but was pulled from last year's GTC roadmap, with Pascal put in its place. The roadmap Huang showed off showed Pascal availability in 2016, with Volta slated for 2018.

No details of Volta were revealed, but that GPU was to use stacked RAM and unified memory.