Google's Live Relay Project Allows Easier Phone Calls Without Voice or Hearing

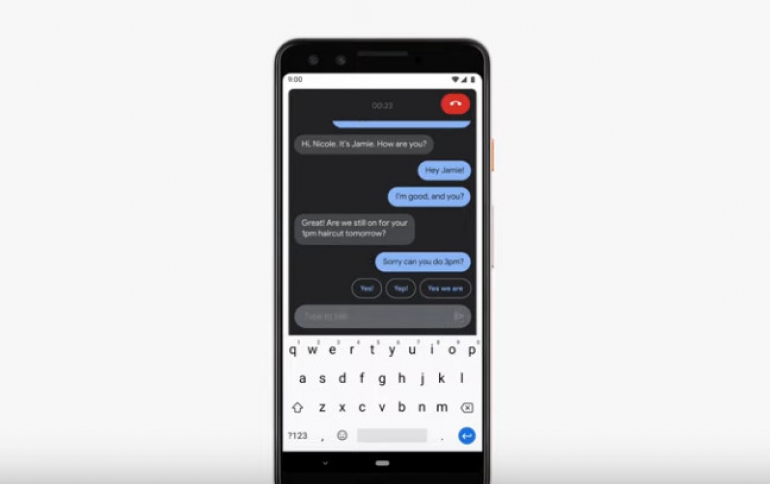

Google is helping people make and receive phone calls without having to speak or hear, through a research project, dubbed Live Relay.

Live Relay uses on-device speech recognition and text-to-speech conversion to allow the phone to listen and speak on the users’ behalf while they type. By offering instant responses and predictive writing suggestions, Smart Reply and Smart Compose help make typing fast enough to hold a synchronous phone call.

Live Relay is running entirely on the device, keeping calls private. Because Live Relay is interacting with the other side via a regular phone call (no data required), the other side can even be a landline.

Of course, Live Relay would be helpful to anyone who can’t speak or hear during a call, and it may be particularly helpful to deaf and hard-of-hearing users, complementing existing solutions.

With Live Relay, you would be able to take that call anywhere, anytime with the option to type instead of talk. Google is also exploring the integration of real-time translation capability, so that you could potentially call anyone in the world and communicate regardless of language barriers. This is the power of designing for accessibility first.

Live Relay is still in the research phase.

Using AI to Improve Products for People With Impaired Speech

To help people living with speech impairments caused by neurologic conditions, Google's Project Euphonia team is using AI to improve computers’ abilities to understand diverse speech patterns.

Google has partnered with the non-profit organizations ALS Therapy Development Institute (ALS TDI) and ALS Residence Initiative (ALSRI) to record the voices of people who have ALS, a neuro-degenerative condition that can result in the inability to speak and move. The company collaborated with these groups to learn about the communication needs of people with ALS, and worked toward optimizing AI based algorithms so that mobile phones and computers can more reliably transcribe words spoken by people with these kinds of speech difficulties.

To do this, Google software turns the recorded voice samples into a spectrogram, or a visual representation of the sound. The computer then uses common transcribed spectrograms to "train" the system to better recognize this less common type of speech. Google's AI algorithms currently aim to accommodate individuals who speak English and have impairments typically associated with ALS, but the company believes that the research can be applied to larger groups of people and to different speech impairments.

In addition to improving speech recognition, Google is also training personalized AI algorithms to detect sounds or gestures, and then take actions such as generating spoken commands to Google Home or sending text messages. This may be helpful to people who are severely disabled and cannot speak.