Adobe's AI tool Detects Photoshoped Images

Adobe researchers, along with their UC Berkeley collaborators, have developed a method for detecting edits to images that were made using Photoshop’s Face Aware Liquify feature.

The tool, sponsored by the DARPA MediFor program, is still in its early stages.

Adobe Photoshop was originally released in 1990, it was a major step toward democratizing creativity and expression. Since then, Photoshop and Adobe’s other creative tools have made an impact on the world, but the ethical implications of the graphics technology cannot be neglected. Trust in what we see is increasingly important in a world where image editing has become ubiquitous – fake content is a serious and increasingly pressing issue.

Using new technologies such as artificial intelligence (AI), Adobe is exploring ways to increase trust and authority in digital media.

Richard Zhang and Oliver Wang, along with their UC Berkeley collaborators, Sheng-Yu Wang, Dr. Andrew Owens, and Professor Alexei A. Efros, developed a method for detecting edits to images that were made using Photoshop’s Face Aware Liquify feature.

The research is framed with some basic questions:

- Can you create a tool that can identify manipulated faces more reliably than humans?

- Can that tool decode the specific changes made to the image?

- Can you then undo those changes to see the original?

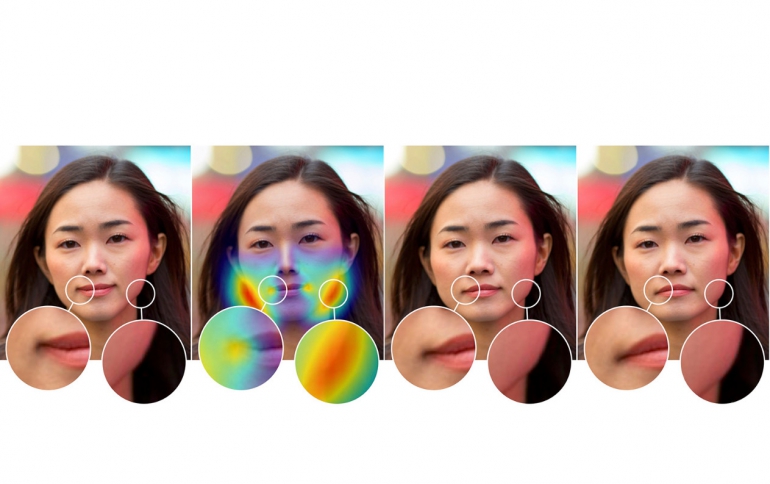

By training a Convolutional Neural Network (CNN), a form of deep learning, the research project is able to recognize altered images of faces. The researchers created an extensive training set of images by scripting Photoshop to use Face Aware Liquify on thousands of pictures scraped from the Internet. A subset of those photos was randomly chosen for training. In addition, an artist was hired to alter images that were mixed in to the data set. This element of human creativity broadened the range of alterations and techniques used for the test set beyond those synthetically generated images.

“We started by showing image pairs (an original and an alteration) to people who knew that one of the faces was altered,” Oliver says. “For this approach to be useful, it should be able to perform significantly better than the human eye at identifying edited faces.”

Those human eyes were able to judge the altered face 53% of the time, a little better than chance. But in a series of experiments, the neural network tool achieved results as high as 99%.

The tool also identified specific areas and methods of facial warping. In the experiment, the tool reverted altered images to its calculation of their original state, with results that impressed even the researchers.

“It might sound impossible because there are so many variations of facial geometry possible,” says Professor Alexei A. Efros, UC Berkeley. “But, in this case, because deep learning can look at a combination of low-level image data, such as warping artifacts, as well as higher level cues such as layout, it seems to work.”

“The idea of a magic universal ‘undo’ button to revert image edits is still far from reality,” Richard adds. “But we live in a world where it’s becoming harder to trust the digital information we consume, and I look forward to further exploring this area of research.”

“This is an important step in being able to detect certain types of image editing, and the undo capability works surprisingly well,” says head of Adobe Research, Gavin Miller. “Beyond technologies like this, the best defense will be a sophisticated public who know that content can be manipulated — often to delight them, but sometimes to mislead them.”