Amazon Announces New Cloud Services, Smaller Data Centers, and New Chips

Amazon.com Inc.’s cloud unit (aws) has unleashed a suite of offerings -- from chips to new types of data centers -- designed to push the company into more areas of computing and help it grab a bigger share of corporate technology budgets.

During AWS’s annual re:Invent conference on Tuesday, AWS Chief Executive Officer Andy Jassy framed cloud computing as a generational shift and outlined his company's new offerings that will be competing with longtime rivals Oracle, Microsoft, Google and International Business Machines.

Jassy talked about differentiated products which are tackling one of the inherent weaknesses of the cloud: the lag between a customer making a request of a cloud service and receiving a reply from a distant server farm. That milliseconds delay could be unacceptable for tasks like manufacturing controls or video streaming.

AWS on Tuesday formally released Outpost, a server rack containing some AWS services that Jassy previewed in Las Vegas a year ago. He also announced a new configuration of AWS server farms, called Local Zones, designed to cluster Outposts close to big customers, starting in the Los Angeles area. A separate product, called Wavelength and being piloted in the U.S. by Verizon Communications Inc., is designed to place AWS computing power at the core of 5G wireless service.

AWS Wavelength

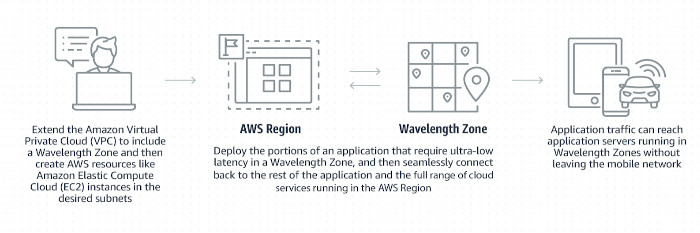

AWS Wavelength provides developers the ability to build applications that serve end-users with single-digit millisecond latencies over the 5G network. Wavelength embeds AWS compute and storage services at the edge of telecommunications providers’ 5G networks, enabling developers to serve use-cases that require ultra-low latency like machine learning inference at the edge, autonomous industrial equipment, smart cars and cities, Internet of Things (IoT), and Augmented and Virtual Reality.

Developers can deploy the portions of an application that require ultra-low latency within the 5G network, and then connect back to the rest of their application and full range of cloud services running in AWS.

With Wavelength, AWS developers can deploy their applications to Wavelength Zones, AWS infrastructure deployments that embed AWS compute and storage services within the network operators’ datacenters at the edge of the 5G network, so application traffic only needs to travel from the device to a cell tower to a Wavelength Zone running in a metro aggregation site. This removes a lot of the latency that would result from multiple hops between regional aggregation sites and across the Internet.

AWS customers will be able to use the same AWS APIs, tools, and functionality they use today, to deliver-low latency applications at the edge of the 5G network, around the world.

AWS is partnering with Verizon on making AWS Wavelength available across the United States. Currently, AWS Wavelength is being piloted by select customers in Verizon’s 5G Edge, Verizon’s mobile edge compute (MEC) solution,in Chicago. Additionally, AWS is collaborating with other telecommunications companies (including Vodafone, SK Telecom, and KDDI) to launch AWS Wavelength across Europe, South Korea, and Japan in 2020.

AWS Outposts

AWS Outposts are fully managed and configurable compute and storage racks built with AWS-designed hardware that allow AWS customers to run compute and storage on-premises, while connecting to AWS’s broad array of services in the cloud. AWS Outposts bring native AWS services, infrastructure, and operating models to virtually any datacenter, co-location space, or on-premises facility.

With AWS Outposts, AWS customers can use the same AWS APIs, control plane, tools, and hardware on-premises as in the AWS cloud to deliver a consistent hybrid experience.

AWS Outposts are delivering racks of AWS compute and storage—the same hardware used in AWS public region datacenters—to bring AWS services, infrastructure, and operating models on-premises. With AWS Outposts AWS customers can choose from a range of compute, storage, and graphics-optimized Amazon Elastic Compute Cloud (EC2) instances, both with and without local storage options, and Amazon Elastic Block Store (EBS) volume options. Customers can then run a broad range of AWS services locally, including Amazon EC2, Amazon EBS, Amazon Elastic Container Service (ECS), Amazon Elastic Kubernetes Service (EKS), Amazon Relational Database Service (RDS), and Amazon Elastic MapReduce (EMR), and can connect directly to regional services like Amazon Simple Storage Service (S3) buckets or Amazon DynamoDB tables through private connections.

Beginning in 2020, AWS plans to add the ability to run more AWS services locally on AWS Outposts, beginning with Amazon S3. AWS will deliver and install the racks to its customers and handle all maintenance, including automatically updating and patching infrastructure and services as part of being connected to an AWS Region, so developers and IT professionals do not have to worry about procuring or maintaining AWS Outposts.

AWS Outposts comes in two variants—an AWS native variant (available today), which allows AWS customers to use the exact same APIs and control plane on Outposts as they use in AWS Public Regions; and VMware Cloud on AWS Outposts (planned for availability in 2020), which enables customers to use the same VMware APIs and control plane on Outposts that they've used to run their on-premises infrastructure for years.

AWS Outposts racks fit into various environments with a variety of plug and play options for power and network, and comes in the standard 80-inch tall 42U dimension. AWS Outposts are currently available in the US East (N. Virginia), US West (Oregon), US East (Ohio), EU West (Ireland), Asia Pacific (Seoul), and Asia Pacific (Tokyo) regions, with more regions coming soon.

Graviton2 chip

Amazon, which has long filled those data centers with highly customized hardware, continued its march into building the high-tech brains behind servers themselves, announcing a second-generation chip, called Graviton2, that will underpin some AWS services.

The new chip is aimed at general-purpose computing tasks.

That’s another shot across the bow of Intel, whose chips account for more than 90% of the server chip market and handle most tasks at the biggest cloud providers.

AWS added to its compute and networking offerings new Arm-based instances (M6g, C6g, R6g) powered by AWS-designed processors in Graviton2, machine learning inference instances (Inf1) powered by AWS-designed Inferentia chips, a new Amazon EC2 feature that uses machine learning to cost and performance optimize Amazon EC2 usage, and networking enhancements.

Amazon says that the new Arm-based versions of Amazon EC2 M, R, and C instance families, powered by new AWS-designed Graviton2 processors, deliver up to 40% better price/performance than current x86 processor-based M5, R5, and C5 instances for a broad spectrum of workloads, including high performance computing, machine learning, application servers, video encoding, microservices, open source databases, and in-memory caches. These new Arm-based instances are powered by the AWS Nitro System, a collection of custom AWS hardware and software technologies that enable the delivery of cloud services with isolated multi-tenancy, private networking, and fast local storage, to reduce customer spend and effort when using AWS.

AWS Graviton2 processors introduce several new performance optimizations versus the first generation. AWS Graviton2 processors use 64-bit Arm Neoverse cores and custom silicon designed by AWS, built using advanced 7 nanometer manufacturing technology. Optimized for cloud native applications, AWS Graviton2 processors provide 2x faster floating point performance per core for scientific and high performance computing workloads, optimized instructions for faster machine learning inference, and custom hardware acceleration for compression workloads. AWS Graviton2 processors also offer always-on fully encrypted DDR4 memory and provide 50% faster per core encryption performance to further enhance security. AWS Graviton2 powered instances provide up to 64 vCPUs, 25 Gbps of enhanced networking, and 18 Gbps of EBS bandwidth.

AWS customers can also choose NVMe SSD local instance storage variant (C6gd, M6gd, and R6gd), or bare metal options for all of the new instance types. The new instance types are supported by several open source software distributions (Amazon Linux 2, Ubuntu, Red Hat Enterprise Linux, SUSE Linux Enterprise Server, Fedora, Debian, FreeBSD, as well as the Amazon Corretto distribution of OpenJDK), container services (Docker Desktop, Amazon ECS, Amazon EKS), agents (Amazon CloudWatch, AWS Systems Manager, Amazon Inspector), and developer tools (AWS Code Suite, Jenkins). Already, AWS services like Amazon Elastic Load Balancing, Amazon ElastiCache, and Amazon Elastic Map Reduce have tested the AWS Graviton2 instances, found they deliver superior price/performance, and plan to move them into production in 2020. M6g instances are available today in preview. C6g, C6gd, M6gd, R6g and R6gd instances will be available in the coming months.

With Amazon EC2 Inf1 instances, aws customers receive high performance and the lowest cost for machine learning inference in the cloud. Amazon EC2 Inf1 instances feature AWS Inferentia, a high performance machine learning inference chip designed by AWS. AWS Inferentia provides 128 Tera operations per second (TOPS or trillions of operations per second) per chip and up to two thousand TOPS per Amazon EC2 Inf1 instance for multiple frameworks (including TensorFlow, PyTorch, and Apache MXNet), and multiple data types (including INT-8 and mixed precision FP-16 and bfloat16).

Amazon EC2 Inf1 instances deliver low inference latency, up to 3x higher inference throughput, and up to 40% lower cost-per-inference than the Amazon EC2 G4 instance family.

AWS Compute Optimizer delivers actionable AWS resource recommendations so AWS customers can identify optimal Amazon EC2 instance types, including those that are a part of Auto Scaling groups, for their workloads, without requiring specialized knowledge. AWS Compute Optimizer analyzes the configuration and resource utilization of a workload to identify dozens of defining characteristics (e.g. whether a workload is CPU-intensive, or if it exhibits a daily pattern). AWS Compute Optimizer uses machine learning algorithms that AWS has built to analyze these characteristics and identify the hardware resource headroom required by the workload. Then, AWS Compute Optimizer infers how the workload would perform on various Amazon EC2 instances, and makes recommendations for the optimal AWS compute resources for that specific workload.

Amazon also showed a raft of new programming tools intended to make it easier to build machine-learning algorithms, including one called AutoPilot that sets up 100 different versions of a formula and lets customers decide on crucial tradeoffs between things like accuracy and speed.