Hackers Use Little Stickers To Trick Tesla Autopilot Into The Wrong Lane

Researchers at Keen Security Lab have found a way to trick a Tesla Model S into going into the wrong lane by placing some simple stickers on the road.

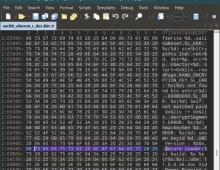

Tesla Autopilot recognizes lanes and assists control by identifying road traffic markings. The researchers proved that by placing interference stickers on the road, the Autopilot system will capture these information and make an abnormal judgement, which causes the vehicle to enter into the reverse lane.

The researchers actually created a "fake lane." They discovered that Tesla's autopilot would detect a lane where there were just three inconspicuous tiny squares strategically placed on the road. When they left small stickers at an intersection, the hackers believed they would trick the Tesla into thinking the patches marked out the continuation of the right lane. On a test track, their theory was proved correct, as the autopilot took the car into the real left lane.

"Our experiments proved that this architecture has security risks and reverse lane recognition is one of the necessary functions for autonomous driving in non-closed roads," the Keen Labs wrote in a paper. "In the scene we build, if the vehicle knows that the fake lane is pointing to the reverse lane, it should ignore this fake lane and then it could avoid a traffic accident."

“In this demonstration the researchers adjusted the physical environment (e.g. placing tape on the road or altering lane lines) around the vehicle to make the car behave differently when Autopilot is in use. This is not a real-world concern given that a driver can easily override Autopilot at any time by using the steering wheel or brakes and should be prepared to do so at all times,” Tesla said.

The researchers also also proved that they can control the steering system through the Autopilot system with a wireless gamepad, even when the Autopilot system is not activated by the driver.

“The primary vulnerability addressed in this report was fixed by Tesla through a robust security update in 2017, followed by another comprehensive security update in 2018, both of which we released before this group reported this research to us. In the many years that we have had cars on the road, we have never seen a single customer ever affected by any of the research in this report,” Tesla said.

Tesla Autopilot can identify the wet weather through image recognition technology, and then turn on the wipers if necessary. Based on the research by Keen Lab, the system can return an “improper” result and turn on the wipers by just placing an image on a TV directly in front of the windshield of a car.

“This is not a real-world situation that drivers would face, nor is it a safety or security issue. Additionally, as we state in our Owners’Manual, the ‘Auto setting [for our windshield wipers] is currently in BETA.’ A customer can also elect to use the manual windshield wiper setting at any time,” Tesla said.

It's not the first time Keen Labs has exposed potential problems in the safety and security of Tesla's digital systems. Back in 2016, the hackers discovered a way to remotely take control of a Tesla's brakes.