IBM Launches New IC922 POWER9-Based Server For ML Inference

IBM is expanding its scope to inference with the unveiling of its new IC922 Power Server.

IBM introduced a concept last year that it calls Data-Train-Inference (DTI).

The DTI model is not a linear workflow—rather the three components are constantly interacting with each other in a continuous loop. And because the process is ongoing and continually being refined, the insights extracted are valuable.

Data – the build-a-solid-foundation piece. Not starting with a solid data foundation will send you down the wrong path before you’ve even started.

Training – the piece where the magic of artificial intelligence occurs; where data becomes AI models.

Inference – the piece that is really the sum of all the parts. Without proper inference, all prior efforts are for naught.

Until now, the DTI model map to the IBM Power Systems hardware portfolio with IBM Power System AC922 packed with up to six NVIDIA V100 Tensor Core GPUs, engineered to be the most powerful training platform. But what about inference and data?

Meet the IBM Power System IC922 – a new inference server.

The new IBM Power System IC922 is a purpose-built inference server designed to put your AI models to work and help you unlock business insights.

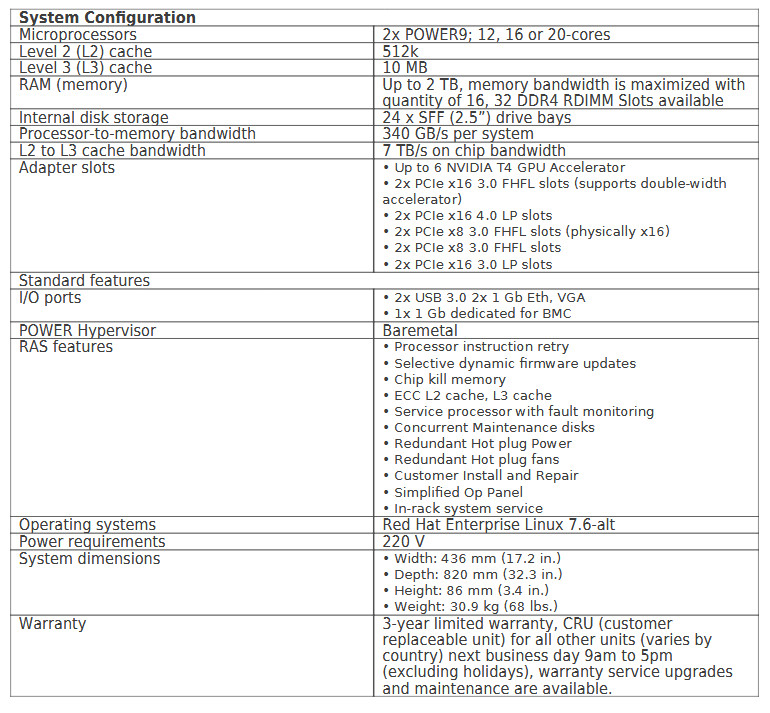

POWER9-based, the Power IC922 provides advanced interconnects (PCIe Gen4, OpenCAPI) to support faster data throughput and decreased latency. Accelerated, the Power IC922 supports up to six NVIDIA T4 Tensor Core GPUs and IBM intends to support up to eight accelerators and additional accelerator options.

Storage-rich, PowerIC922 features a maximum system memory of 2TB. Additionally, IBM says the server’s 8 DDR4 ports at 2666MHz translates to a 170 GB/s peak memory bandwidth per CPU and also features 32 DDR4 RDIMM slots. It also comes with

up to 24 SAS/SATA drives in a 2U system (future NVMe support intended).

The server features advanced I/O architecture with PCIe Gen4 and OpenCAPI support. To top it all off, it comes with IBM’s WMLA-inference, which is a complete AI software stack for inference.

Finally, IBM boasts that the IC922 features 33% more GPUs per 2U server than its Intel-based competitors, which should compute to a lower operational cost.

To showcase how the IC922 fits into the AI puzzle, the Department of Defense High Performance Computing Modernization Program (HPCMP) recently demonstrated how the IC922 and AC922 could be combined into a modular computing platform, creating an IBM POWER9-based supercomputer in a shipping container. This modular computing capability, initially installed at the U.S. Army Combat Capabilities Development Command’s Army Research Laboratory DoD Supercomputing Resource Center, will enable the DoD to redefine the term “edge” to include deployment of an AI supercomputing capability anywhere in the world, including the battlefield.

The new system will be available on February 7.