IBM to Unveil Phase-Change Memory Technologies for AI Applications at VLSI

IBM researchers will present three papers that provide novel solutions to AI computing based on emerging nano-devices called Phase-Change Memory (PCM).

Artificial Intelligence (AI) applications such as those used by devices to understand our speech and provide meaningful and precise answers require low-power operation, while modern processors are typically power hungry. In addition, the need to classify images, sounds and speech in a fast and efficient way requires speed, further challenging existing CMOS hardware.

To overcome this, researchers from the IBM AI Hardware Center are investigating novel computing paradigms, which could potentially surpass all existent hardware approaches for AI.

At the 2019 Symposia on Very Large Scale Integration (VLSI) Technology and Circuits, which will be held June 9-14 in Kyoto, Japan, IBM researchers from three of our labs (Thomas J. Watson Research Center, IBM Research-Almaden and IBM Research-Zurich) will present three papers that provide solutions to AI computing based on emerging nano-devices called Phase-Change Memory (PCM).

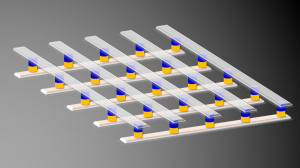

In-memory computing is an emerging non-von Neumann computing paradigm where the physical attributes of memory devices such as PCM are exploited to perform computational tasks in place without the need to shuttle around data between memory and processing units. For example, when PCM devices when organized in dense arrays, can easily implement the vector-matrix multiplication operation in place by leveraging physics laws. By tuning the PCM conductance, analog stable states are achieved, providing the ability to memorize neural network weights into the phase configuration of these devices. By applying a voltage on a single PCM, a current equal to the product of voltage and conductance flows. Applying voltages on all the rows of the array causes the parallel summation of all the single products. In other words, Ohm’s Law and Kirchhoff’s Law enable fully parallel propagation through fully connected networks, accelerating existent approaches based on CPUs and GPUs.

This technique is the core of the acceleration of Deep Neural Networks (DNNs). In servers, large DNNs are trained by tuning the weights. This procedure, called DNN training, requires the ability to analyze millions of images and correspondingly update the weights, and can take painfully long for actual DNNs. Instead, in embedded systems such as IoT sensors, cars or cameras, high classification speed is desired, while the weights are first calculated on software and then left unchanged in the chip. Inferencing DNNs at high speed shows major challenges in terms of low latency and low energy.

The paper, “Confined PCM-based Analog Synaptic Array for Deep Learning,” by Wanki Kim et al. reveals, for the first time, a PCM device that enables nearly-linear conductance tuning with 500 or even 1000 analog states. Such a high number of states with linear weight change is essential to provide high-quality device tuning during DNN training with simulated accuracies that can go beyond 97 percent under certain working conditions. In addition to this promising result, PCM conductance drift and noise are strongly reduced with improved endurance thanks to the carefully designed structure. This device could pave the way for accelerated training of DNNs with an extremely low area consumption, since such devices are extremely scalable and can be stacked in large arrays.

While DNN training requires devices which are extremely linear, DNN inference faces different challenges. Since PCMs in chips inferencing data should be carefully tuned only once or a few times, highly desirable properties are, in this case, the ability to precisely tune the device and the stability of the analog conductance states for increasingly long times.

In the paper “Inference of Long-Short Term Memory networks at software-equivalent accuracy using 2.5M analog Phase Change Memory devices,” by Hsinyu Tsai et al., the inferencing challenges are targeted using a relatively large number of devices to get close estimates of what will eventually happen in a real chip. PCMs are analog-tuned using a novel and parallel writing scheme that can be efficiently implemented in crossbar arrays. This method is then applied to perform inference of Long-Short Term Memory (LSTM), which is a recurrent network that enables speech recognition. Results show a substantial equivalence between software and hardware inference, with tolerance to PCM conductance instabilities and drift.

Finally, highlights of recent research performed in the field of in-memory computing for DNN inference and mixed-precision training are shown in the paper “Computational memory-based inference and training of deep neural networks.” by Abu Sebastian et al. The paper first presents a description of the key enabling features of PCM devices for DNN training and inference. Subsequently, the inference results on state-of-art Convolutional Neural Networks such as ResNet-20 are presented. It is shown that while direct mapping of trained weights in single precision reveals poor results, mapping of weights trained with custom techniques provides close to ideal accuracy, even after one day. The paper also presents a mixed-precision approach to DNN training where high-precision digital weight update calculation, together with analog forward and backpropagation of signals through PCM crossbar arrays reveals promising results for accelerated training of DNNs. After training, inference on this network is still being performed with marginal reduction in the test accuracy.

"We are enthusiastic about the promise of PCM-based in-memory computing as a novel computing paradigm with potential to further the advancement of AI," said Stefano Ambrogio Research Staff Member, IBM Research.