Intel Unveils High-density Reference Design, Facebook Collaboration on the Cooper Lake Processors

At the Open Compute Project (OCP) Global Summit, Intel today announced new open hardware advancements enabling greater computing capabilities and cost efficiencies for data center hardware developers.

The new advancements include a high-density, cloud-optimized reference design; collaboration with Facebook on the upcoming Intel Cooper Lake processor family; and optimization on Intel’s Rack Scale Design.

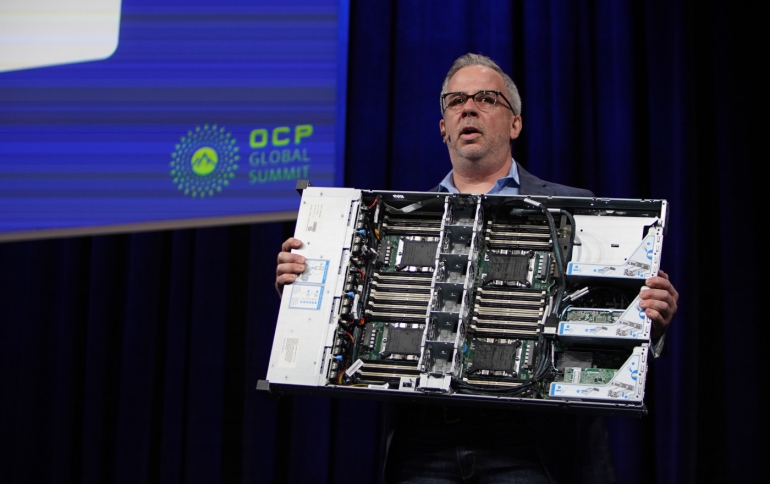

Jason Waxman, Intel corporate vice president in the Data Center Group and general manager of the Datacenter Solutions Group, delivered a keynote at the Open Compute Project Global Summit in San Jose, California.

“OCP is a vital organization that brings together a fast-growing community of innovators who are delivering greater choice, customization and flexibility to IT hardware. As a founding member of this open source community, Intel is committed to delivering innovative products that help deploy infrastructure underlying the services that support the digital economy, ” Waxman said.

The rise of artificial intelligence (AI), the internet of things (IoT), the cloud and network transformation are creating massive amounts of largely untapped data. Since the inception of OCP, the workloads and deployment models driving data center infrastructure have changed dramatically. In the move to a more data-centric world, homogenous infrastructure in data centers is being replaced by workload-optimized systems. Both OCP and Intel are designing hardware that is equipped to handle this scale in an efficient way.

Intel shocased a new cloud-optimized, 4-socket reference design offered for 2nd generation Intel Xeon Scalable processors with Intel Optane DC persistent memory. The design increases core count up to 112 in a single 2U platform, increases memory bandwidth and provides potential double-digit total cost of ownership savings.

Designed for cloud IaaS, Bare Metal and function-as-a service solutions, this design is jointly contributed to OCP by Intel and Inspur, as an Open Accepted Certified submittal. Dell, HP, Hyve Solutions, Lenovo, Quanta, Supermicro, Wiwynn and ZT Systems are expected to deliver solutions based on this reference design in 2019.

Intel also announced a collaboration with Facebook on Cooper Lake design. Intel will offer a scalable (up to 8 sockets) platform for machine learning with the upcoming Cooper Lake 14nm Intel Xeon processor family. The new processor will feature Bfloat 16 computations, a 16-bit floating point representation doubling the theoretical single precision FLOPS for deep learning training and inference. Bfloat 16 improves performance by offering the same dynamic range as the standard 32-bit floating point representations, accelerating AI deep learning training for various workloads such as image-classification, speech-recognition, recommendation engines and machine translation.

Additionally for AI workloads, Intel will introduce this year two Nervana Neural Network processors, each specialized for inference and training. The company is working with the community on open standards in this space to support the next generation of high power accelerator mezzanine cards and open compilers such as Glow with Facebook for example.

Intel will also contribute its RSD 2.3 Rack Management Module code to the OCP-based community, OpenRMC (rack management controller). With more than a year in development, Intel is aligning efforts to drive simple common standards for BIOS, baseboard management controller (BMC), and rack management software through its engagement in the OCP system, OpenBMC and OpenRMC firmware projects.

At the OCP summit Intel will demonstrate how pools of NVMe over fabric can be assigned to different compute nodes making open software defined infrastructure a reality.

Intel will also release a complete family of OCPv3.0-complaint network interface controllers (NIC) ranging from 1GbE to 100GbE (1, 10, 25, 50, 100) starting mid-year. To support the growth of AI and machine learning Intel will be shipping 400G pluggable optics and are working on co-packaging of the optical interfaces with the switch ASIC for future.

In the memory and storage space, as aforementioned, Intel will have OCP ready platforms to support Intel Optane DC persistent memory, which can increase performance by up to 8X on I/O intensive queries on Apache Spark compared to DRAM at 2.6TB data scale.2 Our dense (up to 1PB in a 1U) ruler SSD design is openly available as an EDSFF specification.

For cold Blu-ray optical storage, Intel is contributing the design based open source software to OCP, which we used for AI for autonomous driving development.

Beyond resource and rack management Intel is focused on helping drive open orchestration platforms with OpenStack and Kubernetes contributions which include projects like StarlingX for high performance edge cloud applications, Cyborg acceleration resource management, custom resource definition (CRD) manager, standards for application programming interfaces (APIs) , CPU manager for Kubernetes (CMK), Multus container network interface, and more.

At the application layer, solutions require optimized stack. For AI, Intel has full open stacks to accelerate development cycles. These include toolkits such as OpenVINO or the NAUTA deep learning development toolkit, or compilers such as nGraph, and others with partners.

Intel is also working with Microsoft, Facebook, Google, and other partners on platform root of trust and extension to peripherals such as persistent memory, accelerators, and etc... The end goal is propose a common register transfer language (RTL) and fully open source the code.