A Look at Nvidia's Turing Architecture and The New RTX 2080 Series

Nvidia unveiled its new Turing architecture and RTX 2080 graphics cards last month, promising to offer "six times more performance" than the previous generation, and the new ability to support real-time ray tracing.

First of all, the new Turing architecture brings support real-time ray tracing in games. Ray tracing is a rendering technique used by movie studios to generate light reflections and cinematic effects. But bringing the technology to video games has been difficult.

Have in mind that Nvidia' s new RTX cards - the GeForce RTX 2080 and RTX 2080 Ti - do not replace rasterization with ray-tracing.Instead, the company wants to continue using rasterization and add certain ray-traced effects where those techniques would produce better visual fidelity-a technique it refers to as hybrid rendering. Nvidia notes that the traditional rasterization pipeline and the new ray-tracing pipeline can operate "simultaneously and cooperatively" in its Turing architecture.

The software groundwork for this technique was laid earlier this year when Microsoft revealed the DirectX Raytracing API, or DXR, for DirectX 12. DXR provides access to some of the basic building blocks for ray-tracing alongside existing graphics-programming techniques, including a method of representing the 3D scene that can be traversed by the graphics card, a way to dispatch ray-tracing work to the graphics card, a series of shaders for handling the interactions of rays with the 3D scene, and a new pipeline state object for tracking what's going on across raytracing workloads.

To make DXR code practical for use in real-time rendering, Nvidia is implementing an entire platform it calls RTX that will let DXR code run on its hardware. In turn, GeForce RTX cards are the first hardware designed to serve as the foundation for real-time ray-traced effects with DXR and RTX.

So Nvidia is starting to introduce ray tracing with the RTX 2080, and some of the early demonstrations, such as the Battlefield V demo, have been impressive. However, ray tracing will be limited to certain games.

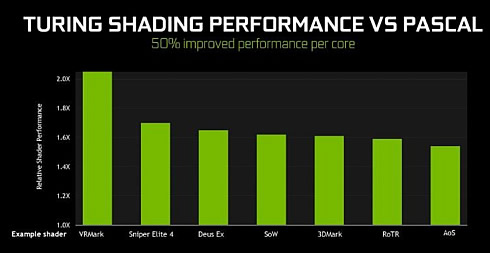

Turing brings two new elements to Nvidia's graphics cards: new Tensor cores and RT cores. The RT cores are dedicated to ray tracing, while the tensor cores will be used for Nvidia's AI work in games. Turing also includes some big changes to the way GPU caches work. Nvidia has moved to a unified memory structure with larger unified L1 caches and double the amount of L2 cache. Nvidia claims that the result is 50 percent performance improvement per core. Nvidia has also moved to GDDR6 with its RTX 2080, which means there's as much as 50 percent higher effective bandwidth for games.

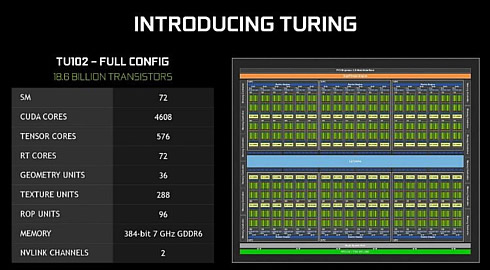

Here is what Nvidia says about the TU102 GPU, found inside the GeForce RTX 2080 Ti

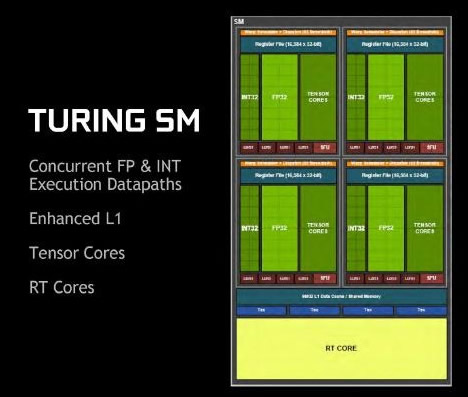

The TU102 GPU includes six Graphics Processing Clusters (GPCs), 36 Texture Processing Clusters (TPCs), and 72 Streaming Multiprocessors (SMs). Each GPC includes a dedicated raster engine and six TPCs, with each TPC including two SMs. Each SM contains 64 CUDA Cores, eight Tensor Cores, a 256 KB register file, four texture units, and 96 KB of L1/shared memory which can be configured for various capacities depending on the compute or graphics workloads' Tied to each memory controller are eight ROP units and 512 KB of L2 cache.

You'll also find a single RT processing core within each SM, so there are 72 in the GeForce RTX 2080 Ti. Because the RT and tensor cores are baked right into each streaming multiprocessor, the lower you go in the GeForce RTX 20-series lineup, the fewer you'll find of each. The RTX 2080 has 46 RT cores and 368 tensor cores, for example, and the RTX 2070 will have 36 RT cores and 288 tensor cores.

Each SM has access to a combined 96KB of L1 and shared memory. The reason for this change is to do with how workloads take the best advantage of memory. Nvidia expects graphics workloads to use 64KB for shader memory and 32KB for textures, with the opposite true for compute workloads.

Nvidia says that that going down this unified route enables diverse workloads to enjoy fewer cache misses and have lower latency. For example, if a program doesn't use shared-memory cache, then it's pointless devoting 96KB to it, and having a configurable cache makes more sense. Secondly, it makes programmers lives easier as applications have to be tuned specifically for shared memory whilst L1 cache is accessed directly.

This Turing cache arrangement is clearly designed to minimise the bottlenecks that have been identified within Pascal.

Adding to this configurability is the effective doubling of caching speeds for both L1 (through being part of the shared-mem block) and L2, while the latter is also doubled in size. This combination ought to result in less off-chip traffic, which is always a good thing.

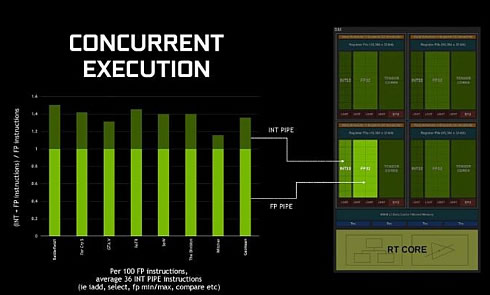

The overall benefit of having concurrent integer instruction execution, a smarter cache mechanism, and a number of minor tweaks that are outside the remit of this piece to cover, is approximately 50 per cent more shading performance per core.

The die measures in at a whopping 754mm, compared to the 471mm Pascal GPU inside the GTX 1080 Ti.

Nvidia also introduced some new shading technologies that developers can take advantage of to improve performance and visuals.

Mesh shading help take some of the burden off your CPU during very visually complex scenes, with tens or hundreds of thousands of objects. It consists of two new shader stages. Task shaders perform object culling to determine which elements of a scene need to be rendered. Once that's decided, Mesh Shaders determine the level of detail at which the visible objects should be rendered. Ones that are farther away need a much lower level of detail, while closer objects need to look as sharp as possible.

Variable rate shading is sort of like a supercharged version of the multi-resolution shading. Human eyes only see the focal points of what's in their vision at full detail; objects at the periphery or in motion aren't as sharp. Variable rate shading takes advantage of that to shade primary objects at full resolution, but secondary objects at a lower rate, which can improve performance.

One potential use case for this is Motion Adaptive Shading, where non-critical parts of a moving scene are rendered with less detail.

Content Adaptive Shading applies the same principles, but it dynamically identifies portions of the screen that have low detail or large swathes of similar colors, and shades those at lower detail, and more so when you're in motion.

Variable rate shading can also help in virtual reality workloads by tailoring the level of detail to where you're looking. Another new VR tech, Multi-View Rendering, expands upon the Simultaneous Multi-Projection technology introduced with the GTX 10-series to allow "developers to efficiently draw a scene from multiple viewpoints or even draw multiple instances of a character in varying poses, all in a single pass."

Finally, Nvidia also introduced Texture Space Shading, which shades an area around an object rather than a single scene to let developers reuse shading in multiple perspectives and frames.

All these changes make make it more difficult to exactly determine how significant any performance gains will be for regular PC gamers. It's not about just how much faster a CPU or GPU is. It's now the big architectural changes that will make a difference for both image quality and performance for years to come.

Nvidia is offloading some of the work that would normally be handled by the company's CUDA cores to these separate Tensor and RT cores. Nvidia is also introducing new rendering and texture shading techniques that will allow game developers to avoid having to continually shade every part of a scene when the player moves around.

Nvidia Deep Learning Super-Sampling (DLSS) could be the most important part of the company's performance improvements. DLSS is a method that uses Nvidia's supercomputers and a game-scanning neutral network to work out the most efficient way to perform AI-powered antialiasing. The supercomputers will work this out using early access copies of the game, with these instructions then used by Nvidia's GPUs. The end result should be improved image quality and performance whether you're playing online or offline.

During 4K gaming, Nvidia's GeForce GTX 1080 Ti struggled to hit 60 fps. However, Nvidia promises some impressive performance gains at 4K with DLSS enabled on the RTX 2080.

The list of games that support ray tracing right now inlcudes just 21 titles. Nvidia is also working with Microsoft to bring many of its RTX components to DirectX, but its main rival AMD will also need to help push this new era of ray tracing.

But don't expect to see any ray traced games available when the GeForce RTX 2080 and 2080 Ti launch on September 20, but at about one month later.

Microsoft has also teased the perfunctorily named Windows 10 October 2018 Update, the second big Windows update of the year, though it hasn't committed to an exact release date

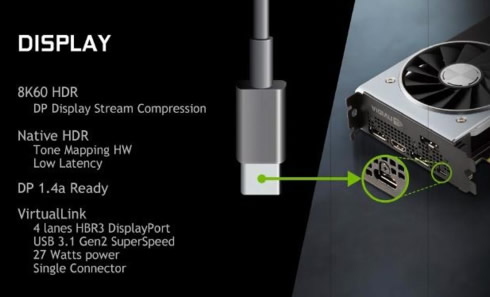

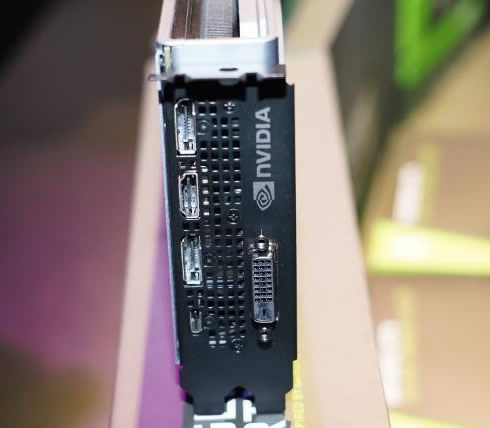

We should also have a look at the changes to Turing's display and video handling. The GeForce RTX 2070, 2080, and 2080 Ti all support VirtualLink, a new USB-C alternate-mode standard that contains all the video, audio, and data connections necessary to power a virtual reality headset. Turing also supports low-latency native HDR output with tone mapping, and 8K at up to 60Hz on dual displays. The DisplayPorts on GeForce RTX graphics cards are also DisplayPort 1.4a ready.

Turing also includes improvements in video encoding and decoding, which should be of particular interest to streamers and other video enthusiasts. Though Pascal already features space-saving H.265, Turing adds support for 8K30 HDR encode while the decoder has also been updated to support HEVC YUV444 10/12b HDR at 30 fps, H.264 8K, and VP9 10/12b HDR.

Nvidia has also changed the way in which multiple boards communicate with one another for multi-GPU SLI. Instead of relying on the old-school bridges for inter-GPU transfers, Turing uses either one (TU104) or two (TU102) dedicated NVLinks - a technology first seen in the Nvidia P100 chip. Each second-generation NVLink provides 25GT/s bandwidth between two GPUs, so 50 GT/s bidirectionally, and you can double that for dual NVLinks. Note that Nvidia will only support dual SLI with this new GPU-to-GPU technology, and a compatible RTX NVLink Bridge will cost a whopping $79.

Nvidia is also teasing old-school graphics enthusiasts who want to push their hardware to the limit. An auto-overclocking tool dubbed "Nvidia Scanner" will hit the streets with the GeForce RTX 20-series GPUs.

But don't expect to have a downloadable version of the Nvidia Scanner. It's actually an API that developers can implement, similar to how current GeForce overclocking software relies on Nvidia's NVAPI.

All of the major overclocking programs will implement Scanner. User will run the software bundled with each card and the final result will be tailored to your RTX card's capabilities, with an overclocking profile already set. But this kind of overclocking is not related to the card's memory.

| GPU Features | RTX 2080 Ti | GeForce RTX 2080 | GeForce RTX 2070 |

|---|---|---|---|

| GPU | TU102 |

TU104 |

TU106 |

| Architecture | Turing |

Turing |

Turing |

| GPCs | 6 |

||

| TPCs | 34 |

23 |

18 |

| SMS | 68 |

46 |

36 |

| CUDA Cores / SM | 64 |

||

| CUDA Cores / GPU | 4,352 |

2,944 |

2,304 |

| Tensor Cores / SM | 8 |

||

| Tensor Cores / GPU | 544 |

368 |

288 |

| RT Cores | 68 |

46 |

36 |

| GPU Base Clock MHz (Reference / Founders Edition) | 1,350 / 1,350 |

1,515 / 1,515 |

1,410 / 1,410 |

| GPU Boost Clock MHz (Reference / Founders Edition) | 1,545 / 1,635 |

1,710 / 1,800 |

1,620 / 1,710 |

| RTX-OPS (Tera-OPS) (Reference / Founders Edition) | 76/78 |

57/60 |

42/45 |

| Rays Cast (Giga Rays / sec) (Reference / Founders Edition) | 10/10 |

8/8 |

6/6 |

| Peak FP32 TFLOPS (Reference / Founders Edition) | 13.4 / 14.2 |

10 / 10.6 |

7.5 / 7.9 |

| Peak INT32 TIPS (Reference / Founders Edition) | 13.4 / 14.2 |

10 / 10.6 |

7.5 / 7.9 |

| Peak FP16 TFLOPS (Reference / Founders Edition) | 26.9 / 28.5 |

20.1 / 21.2 |

14.9 / 15.8 |

| Peak FP16 Tensor TFLOPS with FP16 Accumulate (Reference / Founders Edition) | 107.6 / 113.8 |

80.5 / 84.8 |

59.7 / 63 |

| Peak FP16 Tensor TFLOPS with FP32 Accumulate (Reference / Founders Edition) | 53.8 / 56.9 |

40.3 / 42.4 |

29.9 / 31.5 |

| Peak INT8 Tensor TOPS (Reference / Founders Edition) | 215.2 / 227.7 |

161.1 / 169.6 |

119.4 / 126 |

| Peak INT4 Tensor TOPS (Reference / Founders Edition) | 430.3 / 455.4 |

322.2 / 339.1 |

238.9 / 252.1 |

| Frame Buffer Memory Size and Type | GDDR6 8,192 MB |

||

| Memory Interface | 256-bit |

||

| Memory Clock (Data Rate) | 14 Gbps |

||

| Memory Bandwidth (GB / sec) | 616 |

448 |

|

| ROPs | 88 |

64 |

|

| Texture Units | 272 |

184 |

144 |

| Texel Fill-rate (Gigatexels / sec) | 420.2 / 444.7 |

314.6 / 331.2 |

233.3 / 246.2 |

| L2 Cache Size | 4,096 KB |

||

| Register File Size / SM | 256 KB |

||

| Register File Size / GPU | 17,408 KB |

11,776 KB |

9216 KB |

| NVLink | 2x8 |

1x8 |

NA |

| NVLink bandwidth (bi-directional) | 100GB / sec |

50GB / sec |

NA |

| TDP (Reference / Founders Edition) | 250/260 W |

215/225 W |

175/185 W |

| Transistor Count | 18.6 Billion |

13.6 Billion |

10.8 Billion |

| Die Size | 754 square mm |

545 square mm |

445 square mm |

| Manufacturing Process | 12nm FFN |

||

The GeForce RTX 2080 Ti uses 68 of the maximum 72 SMs, meaning 4,352 shaders. Also knowing that the Tensor cores, RT cores, geometry units, texture units and ROPs are all tied together from a ratio perspective, RTX 2080 Ti drops them to 544, 68, 34, 272, and 88, respectively. There's obviously also the associated diminution to total memory caches, register files, and crucially, memory-bus width, dropping from 384 bits to 352. At the same speed, RTX 2080 Ti is about 95 per cent of a full-on TU102.

The RTX 2080, meanwhile, uses the TU104 die that we have also spoken about. In its complete form, shown above, it uses 13.6bn transistors, is built on the same 12nm process, and has 48 SMs that are identical to TU102. Of course, being a smaller die means there's less cache, only a 256-bit memory interface, and up to 8GB of GDDR6 operating at that same 14Gbps.

The RTX 2080 isn't the full implementation, either, as it carries 46SMs instead of 48. Though there is that same commensurate drop because the SMs carry the associated Tensor and RT cores, Nvidia keeps the full 256-bit memory bus, full 64 ROPs and the same 4MB of L2 cache as the full TU104, depicted above.

Nvidia hasn't reduced the memory speed as it remains at 14Gbps and harnesses the same GDDR6 memory.

Nvidia has two sets of specifications for both new cards. There's the base spec that a number of add-in card partners will adhere to, then there are the Founders Edition cards that, for the first time, offer a higher peak boost clock - an extra 80MHz for the RTX 2080 Ti and 90MHz for the RTX 2080.Next week, two cards, the $700 RTX 2080 and $1,000 RTX 2080 Ti, will be vying for your cash, followed in October by the RTX 2070, which will be priced at $500.