NVIDIA Launches Volta GPU Platform, Fueling AI and High Performance Computing

NVIDIA today launched the Volta GPU computing architecture to drive artificial intelligence and high performance computing, along with the first Volta-based processor, the NVIDIA Tesla V100 data center GPU.

"Artificial intelligence is driving the greatest technology advances in human history," said Jensen Huang, founder and chief executive officer of NVIDIA, who unveiled Volta at his GTC keynote. "It will automate intelligence and spur a wave of social progress unmatched since the industrial revolution.

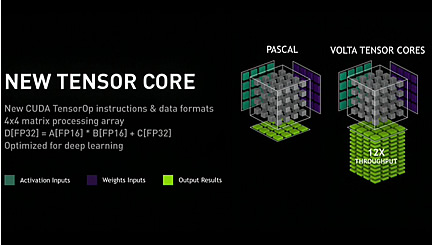

Volta, NVIDIA's seventh-generation GPU architecture, is built with 21 billion transistors and delivers the equivalent performance of 100 CPUs for deep learning. It's made on TSMC's 12nm finfet proces to pack 5,120 CUDA cores. The result is 120 TeraFLOPS of performance, delivered by a new processors called a Tensor Core. .The V100 is equipped with 640 Tensor Cores.

This has 16MB of HBM2 DRAM cache made by Samsung, and NVIDIA has achieved 900 GB/sec of memory. It also had new NVLink interconnect is 10X faster than the fastest PCI Express interconnect.

The New Tensor Core is a 4x4 matrix array. It's fully optimized for deep learning. This is one year later than Pascal but 12x its tensor operations, and 6x its capability for inferencing. It provides a 5x improvement over Pascal, the current-generation NVIDIA GPU architecture, in peak teraflops, and 15x over the Maxwell architecture, launched two years ago.

NVIDIA says that the R&D behind Volta was about $3 billion.

Developers, data scientists and researchers increasingly rely on neural networks to power their next advances in fighting cancer, making transportation safer with self-driving vehicles, providing new intelligent customer experiences and more. Data centers need to deliver greater processing power as these networks become more complex. And they need to efficiently scale to support the rapid adoption of highly accurate AI-based services, such as natural language virtual assistants, and personalized search and recommendation systems.

NVIDIA hopes that Volta will become the new standard for high performance computing, being a platform for HPC systems to drive both computational science and data science for discovering insights. By pairing CUDA cores and the new Volta Tensor Core within a unified architecture, a single server with Tesla V100 GPUs can replace hundreds of commodity CPUs for traditional HPC.

NVIDIA also announced the NVIDIA DGX-1 with eight Telsa v100. It's labeled on the slide as the "essential instrument of AI research and has been designed to replace 400 servers. It offers 960 tensor TFLOPS and will ship in Q3 for $149,000. NVIDIA says that if you get one now powered by Pascal, you'll get a free upgrade to Volta.

There's also a small version of DGX-1, the DGXX Station. It's liquid cooled and quiet and uses four Tesla V100s, priced at $69K. It can be ordered now and be delivered in Q3.

There are also more flavors of Tesla V100. One comes with HGX-1, which is specifically for GPU cloud computing. This is intended for the public cloud, whether for Deep Learning, graphics, CUDA computation.

Another flavor of V100 for hyperscale scaleout is small, the size of a large CD case, and provides a 15-25X inference speedup against Intel Skylake, according to NVIDIA. It draws 150 watts.

NVIDIA GPU Cloud Platform

NVIDIA also today announced the NVIDIA GPU Cloud (NGC), a cloud-based platform that will give developers access -- via their PC, NVIDIA DGX system or the cloud -- to a software suite for harnessing the transformative powers of AI.

Harnessing deep learning presents two challenges for developers and data scientists. One is the need to gather into a single stack the requisite software components -- including deep learning frameworks, libraries, operating system and drivers. Another is getting access to the latest GPU computing resources to train a neural network.

NVIDIA solved the first challenge earlier this year by combining the key software elements within the NVIDIA DGX-1 AI supercomputer into a containerized package. As part of the NGC, this package, called the NGC Software Stack, will be more widely available and kept updated and optimized for maximum performance.

To address the hardware challenge, NGC will give developers the flexibility to run the NGC Software Stack on a PC (equipped with a TITAN X or GeForce GTX 1080 Ti), on a DGX system or from the cloud.

NGC will offer the following benefits to developers:

- Purpose Built: Designed for deep learning on GPUs.

- Optimized and Integrated: The NGC Software Stack will provide a wide range of software, including: Caffe, Caffe2, CNTK, MXNet, TensorFlow, Theano and Torch frameworks, as well as the NVIDIA DIGITS GPU training system, the NVIDIA Deep Learning SDK (for example, cuDNN and NCCL), NVIDIA-docker, GPU drivers and NVIDIA CUDA for designing deep neural networks.

- With one NVIDIA account, NGC users will have a simple application that guides people through deep learning workflow projects across all system types whether PC, DGX system or NGC.

- It's built to run anywhere. Users can start with a single GPU on a PC and add more compute resources on demand with a DGX system or through the cloud.

- They can import data, set up the job configuration, select a framework and hit run. The output could then be loaded into TensorRT for inferencing.

NGC is expected to enter public beta by the third quarter. NVIDIA did not provide pricing details.

AI Coming to Ray Tracing to Speed Graphics Workloads

NVIDIA CEO showed at the GPU Technology Conference how NVIDIA is advancing the iterative design process to predict final renderings by applying artificial intelligence to ray tracing - a technique that uses complex math to realistically simulate how light interacts with surfaces in a specific space.

The ray tracing process generates highly realistic imagery but is computationally intensive, and can leave a certain amount of noise in an image. Removing this noise while preserving sharp edges and texture detail, is known in the industry as denoising. Using NVIDIA Iray, Huang showed how NVIDIA is the first to make high-quality denoising operate in real time by combining deep learning prediction algorithms with Pascal architecture-based NVIDIA Quadro GPUs.

The approach could be used by graphics-intensive industries like entertainment, product design, manufacturing, architecture, engineering and many others.

NVIDIA is already integrating deep learning techniques to its own rendering products, starting with Iray.

Existing algorithms for high-quality denoising consume seconds to minutes per frame, which makes them impractical for interactive applications. By predicting final images from only partly finished results, NVIDIA says that Iray AI produces accurate, photorealistic models without having to wait for the final image to be rendered.

Designers can iterate on and complete final images 4x faster, for a far quicker understanding of a final scene or model.

To achieve this, NVIDIA researchers and engineers turned to a class of neural networks called an autoencoder. Autoencoders are used for increasing image resolution, compressing video and many other image processing algorithms.

Using the NVIDIA DGX-1 AI supercomputer, the team trained a neural network to translate a noisy image into a clean reference image. In less than 24 hours, the neural network was trained using 15,000 image pairs with varying amounts of noise from 3,000 different scenes. Once trained, the network takes a fraction of a second to clean up noise in almost any image - even those not represented in the original training set.

Iray deep learning functionality will be included with the Iray SDK NVIDIA supplies to software companies, and exposed in Iray plugin products that the company will produce later this year.

NVIDIA Holodeck Project Advanced Photorealistic, Collaborative VR

NVIDIA also announced Project Holodeck, a photorealistic, collaborative virtual reality environment that incorporates the feeling of real-world presence through sight, sound and haptics.

The Holodeck environment allows creators to import high-fidelity, full-resolution models into VR to collaborate and share with colleagues or friends - and make design decisions easier and faster.

Holodeck is built on an enhanced version of Epic Games' Unreal Engine 4 and includes NVIDIA GameWorks, VRWorks and DesignWorks.

Jensen Huang demonstrated Holodeck's potential. The demo took the GTC audience inside the design review of a $1.9 million Koenigsegg Regera supercar. They watched engineers in the Holodeck environment explore the car at scale and in full visual fidelity, and consult each other on design changes in real time.

Thanks to Holodeck's high fidelity, designers can explore embedded equipment such as the instrument panel, air vents and engine components. And multiple lighting configurations can be simulated for scenarios such as day and night time driving, road conditions and weather to understand how light reflects off of windows or glass cockpits.

Holodeck makes it easy to import and beautifully render enormous models without geometric simplification. In the case of the Koenigsegg car, the model was a jaw-dropping 50 million polygons.