Verizon Labs Develops 5G Edge Technology to Drive VR, XR, and AR Experiences to Mobile Devices

Verizon’s 5G Lab, which is comprised of both network and XR technologists, is combining 5G network and XR expertise to produce new capabilities for the edge.

The Verizon team recently built and tested an independent GPU-based orchestration system and developed enterprise mobility capabilities designed to revolutionize mobility for virtual reality (VR), mixed reality (XR), augmented reality (AR), and cinematic reality (CR). Together, these capabilities could pave the way for a new class of mobile cloud services, provide a platform for developing ultra low-latency cloud gaming, and enable the development of scalable GPU cloud-based services.

5G technology and Verizon’s Intelligent Edge Network are designed to provide real time cloud services on the edge of the network nearest to a customer. Because of the heavy imaging and graphics that would benefit from this technology, many of these applications will run significantly better on a GPU. Artificial Intelligence and Machine Learning (AI/ML), Augmented, Virtual and Mixed Reality (AR/VR/XR), AAA Gaming, and Real-time Enterprise are highly dependent on GPUs for compute capabilities. The limited availability of efficient resource management in GPUs is a barrier to scalable deployment of such technologies.

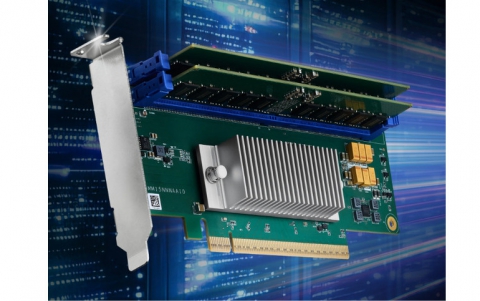

To meet this need, the Verizon team developed a prototype using GPU slicing and management of virtualization that supports any GPU-based service and will increase the ability for multiple user-loads.

In proof of concept trials on a live network in Houston, TX using the newly developed GPU orchestration technology in combination with edge services, Verizon engineers were able to successfully test this new technology. In one test for computer vision as a service, this new orchestration allowed for eight times the number of concurrent users, and using a graphics gaming service, it allowed for over 80 times the number of concurrent users.

“Creating a scalable, low cost, edge-based GPU compute [capability] is the gateway to the production of inexpensive, highly powerful mobile devices,” said Nicki Palmer, Chief Product Development Officer. “This new innovation will lead to more cost effective and user friendly mobile mixed reality devices and open up a new world of possibilities for developers, consumers and enterprises.”

To assist developers in creating these new applications and solutions, Verizon’s team developed a suite of edge capabilities. These capabilities, similar in nature to APIs (Application Programming Interface), describe processes that developers can use to build an application without the need for additional code. Building on this technology, the team has created eight services for developers to use when creating applications and solutions for use on 5G Edge technology:

- 2D Computer vision – Users provide 2D images that a device can recognize and track.(Examples: A consumer may view a poster through glasses and 2D computer vision could be used to make it come alive. A consumer may view a movie poster and a trailer would automatically play. A consumer may view a product box and see an overlay of nutritional info, coupons, etc.)

- XR lighting – Currently when 3D objects are inserted into the real world they appear as 2D and can seem out of place. XR lighting can send back environment lighting and video info to reproduce a scene reflecting accurate lighting, shadows, roughness, reflections, and metallics on the 3D object so that it blends perfectly into the environment around it.

- Split rendering – Split rendering enables the delivery of PC/console level graphics to any mobile device. Split rendering splits the graphics processing workload of games, 3D models, or other complex graphics and pushes the most difficult calculations onto the server allowing the lighter calculations to remain on the device.

- Real time ray tracing – Traditional 3D scenes or objects are designed using complex custom algorithms to extrapolate or calculate how light will fall and how ending colors will look and feel. With real-time ray tracing capability, each pixel can receive accurate light coloring, advancing the realism of 3D.

- Spatial audio – In the ongoing evolution of sound (from mono to stereo to 7.1 surround sound) spatial audio is the next step. This type of audio processing is extremely processor heavy. In designing for spatialized audio, objects in a 3D scene must react with the sound so that users have a true sense of the space and relative location of an object in an augmented reality environment. As audio is emitted it bounces off digital objects and based on directionality and where your head location is, reproduces what you would hear in the real world.

- 3D Computer vision – 3D computer visioning leads to 3D object recognition by training the edge network to understand what a 3D object is from all angles. (Examples: A football helmet could provide highlights, stats, etc. for players seen from any angle. At a grocery store, 3D computer visioning would allow a consumer using AR to recognize and respond to objects with unusual 3D shapes such as fruits and vegetables.)

- Real time transcoding – Transcoding is taking one file format and transferring it to another format. Footage is usually much larger in its raw format. Real time transcoding takes that away so a consumer doesn’t have to worry about what file format goes up and what comes down. Real time transcoding is a content creation tool that saves time and optimizes workflows.

- Asset caching – Asset caching provides for real time use of assets on the edge. It allows people to work collaboratively. (Example: Multiple people could work on a video file in real time altogether instead of handing it off to avoid overwriting each other’s work.) The fast file format for 5G and this caching optimization tool allow a limitless number of people to work on the same file in real time.

At Mobile World Congress Americas in LA next week, Verizon will demonstrate some of these new capabilities at the Verizon 5G Built Right booth. Some of the demos include:

- Workspace with augmented reality: 1000 Augmented Realities + AR smart glasses map the workspace and render overlays and information displays.

- Qwake Tech: AR helmet attachment allows firefighters to see in low visibility environments.

- 3D handheld scanner: Volumetric scanner creates detailed renderings of objects and entire scenes.

- Verizon AR Shopping: Instantly and seamlessly overlays digital displays on top of physical products.

- Visceral Science: Educational experiences in VR of essential science concepts (i.e. lifecycle of a star) resonating with the middle and high school curricula.