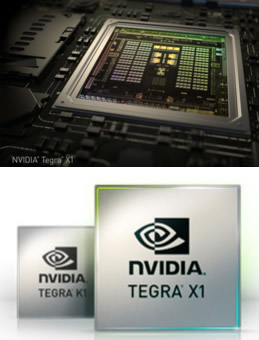

Nvidia Launches Tegra X1 Mobile Chip, Accelerates Move Into Smarter Automobiles

NVIDIA today unveiled Tegra X1, its next-generation mobile super chip with over one teraflops of processing power, delivering unprecedented graphics to mobile applications, car dashboards as well as auto-pilot systems. Tegra processors are built for embedded products, mobile devices, autonomous machines and automotive applications.

At a Sunday event in Las Vegas ahead of the Consumer Electronics Show, Nvidia Chief Executive Jen-Hsun Huang said the Tegra X1 chip would provide enough computing horsepower for automobiles with displays built into mirrors, dashboard, navigation systems and passenger seating.

At a Sunday event in Las Vegas ahead of the Consumer Electronics Show, Nvidia Chief Executive Jen-Hsun Huang said the Tegra X1 chip would provide enough computing horsepower for automobiles with displays built into mirrors, dashboard, navigation systems and passenger seating.

"The future car is going to have an enormous amount of computational ability," Huang said. "We imagine the number of displays in your car will grow very rapidly."

Nvidia Tegra X1 has twice the performance of its predecessor, the Tegra K1, and will come out in early 2015. It has a 256-core Maxwell architecture GPU, 8 ARM CPU cores, and can deliver 60 frames per second of 4K video.

Tegra X1 supports all major graphics standards, including Unreal Engine 4, DirectX 12, OpenGL 4.5, CUDA, OpenGL ES 3.1 and the Android Extension Pack, making it easier for developers to bring PC games to mobile.

Tegra X1's technical specifications include:

- 256-core Maxwell GPU (DX-12, OpenGL 4.4, NVIDIA CUDA, OpenGL ES 3.1, and AEP)

- 8 CPU cores (4x ARM Cortex A57 2MB L2; 4x ARM Cortex A53 512KB L2)

- 60 fps 4K video (H.265, H.264, VP9)

- 1.3 gigapixel of camera throughput

- 20nm process

- 4K x 2K @60 Hz, 1080p @120 Hz (HDMI 2.0 60 fps, HDCP 2.2)

While Tegra K1 had a version with NVIDIA?s custom Denver core for the CPU, NVIDIA has elected to use ARM?s Cortex A57 and A53 in the Tegra X1. The A57 CPU cluster has 2MB of L2 cache shared across the four cores, with 48KB/32KB L1s per core. The A53 cluster has 512KB of L2 cache shared by all four cores and 32KB/32KB L1s per core.

Nvidia is not using the standard big.LITTLE configuration. Their system includes a custom interconnect rather than ARM?s CCI-400, and cluster migration rather than global task scheduling which exposes all eight cores to userspace applications.

NVIDIA claims that Tegra X1 significantly outperforms Samsung System LSI?s Exynos 5433 in performance per watt with 1.4x more performance at the same amount of power or half the power for the same performance on the CPU. NVIDIA also uses the System EDP (Electrical Design Point) management to control throttling and turbo rather than ARM's IPA (Intelligent Power Allocation) drivers.

NVIDIA has also improved the rest of Tegra X1 in comparison with Tegra K1. There is a move from 64-bit wide LPDDR3 to 64-bit wide LPDDR4 on the memory interface, which improves peak memory bandwidth from 14.9 GB/s to 25.6 GB/s and improves power efficiency by around 40%. In addition, the maximum internal display resolution moves from 3200x2000 at 60 Hz to 3840x2160 at 60 Hz with support for VESA?s display stream compression. For external displays, ther is also a significant improvement with support for HDMI 2.0 and HDCP 2.2, which means that 4K60 is supported in contrast with the Tegra K1, which only supported 4K30.

The ISP is similar to the one we see in the Tegra K1 when it comes to feature set, but JPEG encode and decode rate is now five times as fast, going from 120 MP/s to 600 MP/s. For video encode and decode, we see support for 4K60 H.265 and VP9, with support for 10 bit color on decode on H.265. In addition to support for new codecs in the hardware blocks, we see that H.264 and VP8 now support 4K60. In addition, the K1's storage controller now supports eMMC 5.1 for faster storage performance.

So the Tegra X1 is a Tegra product with a Maxwell 2 GPU. Compared to Kepler before it, Maxwell 2 introduced a new features into the NVIDIA GPU architecture, including 3rd generation delta color compression, streamlined SMMs with greater efficiency per CUDA core, Voxel Global Illumination, or VXGI, for real-time dynamic global illumination and Multi-Frame Anti-Aliasing, or MFAA, for lifelike graphics in the most demanding games and apps.

TDP is a limiting factor in all modern mobile devices, but Nvidia sys that Maxwell's power optimizations along with TSMC's 20nm SoC process will further improve NVIDIA's SoC GPU performance.

The X1 will also be launching with a new GPU feature. Nvuidia calls it "double speed FP16" support in their CUDA cores. The company is changing how they are handling FP16 operations for X1. On the Tegra K1 FP16 operations were promoted to FP32 operations and run on the FP32 CUDA cores; but for X1, FP16 operations can in certain cases be packed together as a single Vec2 and issued over a single FP32 CUDA core.

Both Nvidia's "rivals" ARM and Imagination have FP16 capabilities on their current generation parts, and even AMD is going this route for GCN 1.2. So even if it only works for a few types of operations, this should help ensure NVIDIA doesn?t run past the competition on FP32 only to fall behind on FP16.

FP16 operations are heavily used in Android?s display compositor and are also used in mobile games at certain points. More critical to NVIDIA?s goals however, FP16 can also be leveraged for computer vision applications such as image recognition.

The Tegra X1 It will be featured in the newly announced NVIDIA DRIVE car computers.

DRIVE PX is an auto-pilot computing platform that can process video from up to 12 onboard cameras to run capabilities providing Surround-Vision, for a 360-degree view around the car, and Auto-Valet, for true self-parking. DRIVE PX, featuring two Tegra X1 super chips, has inputs for up to 12 high-resolution cameras, and can process up to 1.3 gigapixels per second.

Its computer vision capabilities can enable Auto-Valet, allowing a car to find a parking space and park itself, without human intervention. While current systems offer assisted parallel parking in a specific spot, NVIDIA DRIVE PX can allow a car to discover open spaces in a crowded parking garage, park autonomously and then later return to pick up its driver when summoned from a smartphone.

The learning capabilities of DRIVE PX enable a car to learn to differentiate various types of vehicles -- for example, discerning an ambulance from a delivery van, a police car from a regular sedan, or a parked car from one about to pull into traffic. As a result, a self-driving car can detect subtle details and react to the nuances of each situation, like a human driver.

DRIVE CX is a complete cockpit platform designed to power the advanced graphics required across the increasing number of screens used for digital clusters, infotainment, head-up displays, virtual mirrors and rear-seat entertainment.

It is computer is a complete solution with hardware and software to enable advanced graphics and computer vision for navigation, infotainment, digital instrument clusters and driver monitoring. It also enables Surround-Vision, which provides a top-down, 360-degree view of the car in real time -- solving the problem of blind spots -- and can completely replace a physical mirror with a digital smart mirror.

Available with either Tegra X1 or Tegra K1 processors, the DRIVE CX can power up to 16.8 million pixels on multiple displays -- more than 10 times that of current model cars.

Both NVIDIA DRIVE PX and DRIVE CX platforms include a range of software application modules from NVIDIA or third-party solutions providers. The DRIVE PX auto-pilot development platform and DRIVE CX cockpit computer will be available in the second quarter of 2015.

"We see a future of autonomous cars, robots and drones that see and learn, with seeming intelligence that is hard to imagine," said Jen-Hsun Huang, CEO and co-founder, NVIDIA. "They will make possible safer driving, more secure cities and great conveniences for all of us.

"To achieve this dream, enormous advances in visual and parallel computing are required. The Tegra X1 mobile super chip, with its one teraflops of processing power, is a giant step into this revolution."

Nvidia in recent years has been expanding beyond its core business of designing high-end graphics chips for personal computers.

After struggling to compete against larger chipmakers like Qualcomm in smartphones and tablets, Nvidia is now increasing its focus on using its Tegra mobile chips in cars and is already supplying companies including Audi, BMW and Tesla.

But even without wheels, Tegra X1 packs plenty of horsepower. One highlight from Nvidia's CES event was Epic Games' "Elemental" demo running on a Tegra X1 mobile processor.

The demo of Epic's Unreal Engine 4 has been a benchmark for what a high-end PC can do when it was first shown in 2012. A year later, it stunned developers when it debuted on Sony's PlayStation 4.

But while the demo is as powerful as ever, on Tegra X1, Elemental consumes just a fraction of the power.

And because Tegra X1 supports a full suite of modern graphics standards, Nvidia says it took a skilled developer just a week to get "Elemental" running on the chip, with a little longer spent on optimization and polish. "The content just came up, and it ran," says Evan Hart, a member of our Content & Technology team.

Unreal Engine 4, DirectX 12, OpenGL 4.5, CUDA, OpenGL ES 3.1 and the Android Extension Pack are all available to developers on Tegra X1.