Twitter Developing video Sharing Tool

Twitter Inc is developing a Snapchat-style tool that makes it simpler for users to post videos on its app, Bloomberg reported on Thursday.

The company already has a working demo of the camera-centered product, Bloomberg reported, citing people who have seen it.

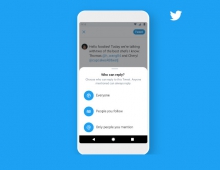

With the new product video posting will be more easy and straightforward. Twitter users will not have to

open the Twitter app, click the compose button, find the camera button, take the video or picture, then click on the tweet button.

The goal of the new feature is to entice people to share video clips of what's happening around them.

The design as well as the timing of the product's launch has not been settled upon, the report added.

Twitter declined to comment.

Facebook has copied features from Snap Inc.'s Snapchat, a mobile app focused on photos and videos.

Machine learning to crop photos

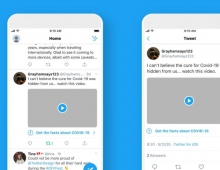

In related news, Twitter says that machine learning to automatically crop picture previews to their most interesting part.

ML researcher Lucas Theis and ML lead Zehan Wang used a blog post to explain how they started just using facial recognition to crop images to faces, but found that this method didn't work with pictures of scenery, objects, and cats.

Their solution was "cropping using saliency". A region having high saliency means that a person is likely to look at it when freely viewing the image. Academics have studied and measured saliency by using eye trackers, which record the pixels people fixated with their eyes. In general, people tend to pay more attention to faces, text, animals, but also other objects and regions of high contrast. This data can be used to train neural networks and other algorithms to predict what people might want to look at.

The basic idea is to use these predictions to center a crop around the most interesting region

Thanks to recent advances in machine learning, saliency prediction has gotten a lot better. Unfortunately, the neural networks used to predict saliency are too slow to run in production, since we need to process every image uploaded to Twitter and enable cropping without impacting the ability to share in real-time. On the other hand, Twitter doesn't need fine-grained, pixel-level predictions, since we are only interested in roughly knowing where the most salient regions are. In addition to optimizing the neural network's implementation, Twitter used two techniques to reduce its size and computational requirements.

First, the company used a technique called knowledge distillation to train a smaller network to imitate the slower but more powerful network. With this, an ensemble of large networks is used to generate predictions on a set of images. These predictions, together with some third-party saliency data, are then used to train a smaller, faster network.

Twitter says it drastically reduced the size of saliency prediction networks through a combination of knowledge distillation and pruning.

Second, the company developed a pruning technique to iteratively remove feature maps of the neural network which were costly to compute but did not contribute much to the performance. To decide which feature maps to prune, Twitter computed the number of floating point operations required for each feature map and combined it with an estimate of the performance loss that would be suffered by removing it.

Together, these two methods allowed Twitter to crop media 10x faster than just a vanilla implementation of the model and before any implementation optimizations.

These updates are currently in the process of being rolled out to everyone on twitter.com, iOS and Android.