Twitter to Start Warning Users That Post Offensive Replies

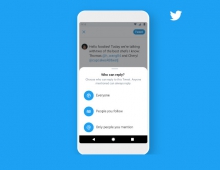

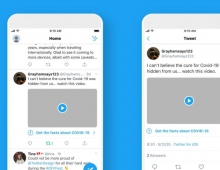

Twitter will test sending users a prompt when they reply to a tweet using “offensive or hurtful language,” in an effort to clean up conversations on the social media platform.

When users hit “send” on their reply, they will be told if the words in their tweet are similar to those in posts that have been reported, and asked if they would like to revise it or not.

Twitter said the experiment will start on Tuesday and last at least a few weeks. It will run globally but only for English-language tweets.

The announcement was made in a tweet on Tuesday.

“We’re trying to encourage people to rethink their behavior and rethink their language before posting because they often are in the heat of the moment and they might say something they regret,” Sunita Saligram, Twitter’s global head of site policy for trust and safety.

Twitter took action against almost 396,000 accounts under its abuse policies and more than 584,000 accounts under its hateful conduct policies between January and June of last year, according to its transparency report.