Intel Nervana NNP-L1000 Neural Network Processor Coming in 2019

Intel provided updates on its newest family of Intel Nervana Neural Network Processors (NNPs) at Intel AI DevCon, the company's inaugural AI developer conference.

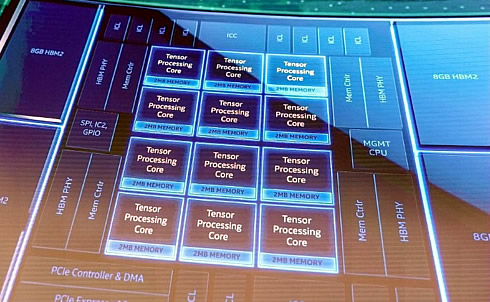

The Intel Nervana NNP has an explicit design goal to achieve high compute utilization and support true model parallelism with multichip interconnects.

Intel is building toward the first commercial NNP product offering, the Intel Nervana NNP-L1000 (Spring Crest), in 2019. The company anticipates the Intel Nervana NNP-L1000 to achieve 3-4 times the training performance of its first-generation Lake Crest product. Intel will also support bfloat16, a numerical format being adopted industrywide for neural networks, in the Intel Nervana NNP-L1000. Over time, Intel will be extending bfloat16 support across its AI product lines, including Intel Xeon processors and Intel FPGAs.

The company showed initial performance benchmarks on Intel's NNP family. These results come from the prototype of the Intel Nervana NNP (Lake Crest) from which the company is gathering feedback from its early partners:

- General Matrix to Matrix Multiplication (GEMM) operations using A(1536, 2048) and B(2048, 1536) matrix sizes have achieved more than 96.4 percent compute utilization on a single chip. This represents around 38 TOP/s of actual (not theoretical) performance on a single chip. Multichip distributed GEMM operations that support model parallel training are realizing nearly linear scaling and 96.2 percent scaling efficiency for A(6144, 2048) and B(2048, 1536) matrix sizes - enabling multiple NNPs to be connected together and freeing from memory constraints of other architectures.

- Intel is measuring 89.4 percent of unidirectional chip-to-chip efficiency of theoretical bandwidth at less than 790ns (nanoseconds) of latency and are excited to apply this to the 2.4Tb/s (terabits per second) of high bandwidth, low-latency interconnects.

All of this is happening within a single chip total power envelope of under 210 watts.