IBM to Present Latest Developments of Project Debater at Artificial Intelligence Conference

At the thirty-fourth AAAI conference on Artificial Intelligence (AAAI-20), IBM will present two papers on recent advancements in Project Debater.

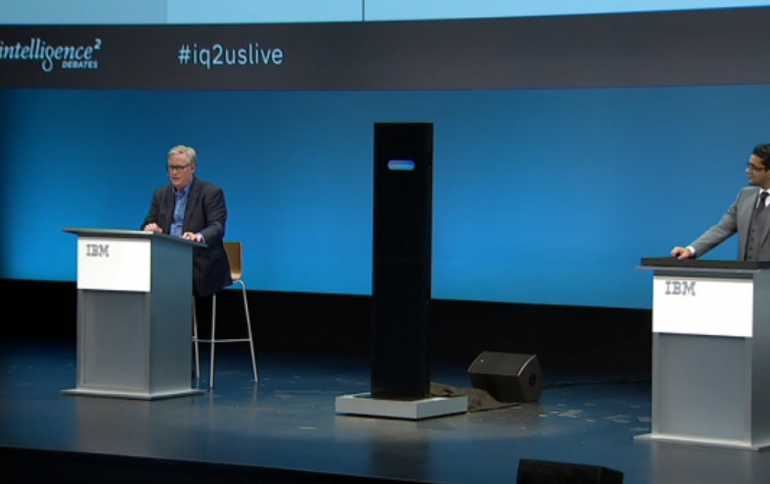

IBM has been studying computational argumentation. IBM Project Debater is the first AI system that can debate humans on complex topics, and was presented for the first time live at IBM Think 2019. The system’s capabilities have been also extended with Project Debater Speech by Crowd, an AI cloud technology for collecting free-text arguments from large audiences on debatable topics to generate meaningful narratives that express the participants’ opinions in a concise way. This past summer, IBM conducted a live experiment with Speech by Crowd at the IBM THINK conference in Tel Aviv, where the company collected arguments from more than 1,000 attendees in the audience relating to their stance on legalization of marijuana. While on stage, Project Debater summarized the arguments and articulated narratives for pro and con. It has since been demonstrated in events at the Swiss city of Lugano and the University of Cambridge.

Last year, Project Debater adopted new multi-purpose pre-trained neural network models such as BERT (Bidirectional Encoder Representations from Transformers). An important feature of these pre-trained models is their ability to be fine-tuned for specific domains or tasks. This family of models has been shown to contribute to achieving state-of-art results for a wide variety of NLP tasks.

At the heart of Project Debater is the ability to detect good arguments. In the context of argument mining, the goal is to pin-point relevant, argumentative claims and evidence, which either support or contest a given topic, among billions of sentences. Moreover, when arguments are collected from thousands of sources, the persuasiveness, coherence and writing style varies greatly. Ranking arguments by quality allows selection of the “best” arguments, highlighting them to policy makers and facilitating a coherent narrative.

At the AAAI conference on Artificial Intelligence (AAAI-20), IBM will present two papers on on recent advancements in Project Debater on these two core tasks, both utilizing BERT. The first paper deals with the problem of mining specific types of argumentative texts from a massive corpus of journals and newspapers. The second paper presents a new dataset for argument quality and provides a BERT-based neural method for ranking arguments by quality.

In the first paper, Corpus wide argument mining – a working solution, by Ein-Dor et al, IBM presents the first end-to-end solution for corpus-wide argument mining: a pipeline that is given a controversial topic and automatically pinpoints supporting and contesting evidence for this topic, within a massive corpus of journals and newspapers. The researchers demonstrate the efficiency and value of their solution on a corpus of 10 billion sentences, an order of magnitude higher than previous work. To be of practical use, such a system needs to provide a wide coverage of the relevant arguments, with high accuracy, over a wide array of potential topics. Such a system is faced with the challenging task of learning to recognize a specific flavor of argumentative texts (in our work, expert and study evidence) while overcoming the rarity of relevant texts within the corpus.

The first stage of IBM's solution is composed of dedicated sentence-level queries, designed to work as initial filters, while at the same time focuses on retrieval of the desired types of arguments. The output of the queries is fed into a dedicated classifier which recognizes the relevant argumentative texts by ranking them according their confidence score. This sentence-level classifier is obtained by fine-tuning BERT over a large set of annotated sentences, created by applying an iterative scheme for data annotation to overcome the rarity of relevant texts in the corpus. The annotated dataset which was obtained by applying IBM's annotation scheme to Wikipedia is publicly available as part of this work. When tested on 100 unseen topics, IBM says that the system achieved an average accuracy of 95% for its top-ranked 40 sentences per topic, outperforming previously reported results by a highly significant margin. The high precision together with the wide range of test topics represents a significant step forward in the argument mining domain.

IBM's research on argument quality was first introduced at EMNLP 2019, in the context of the Speech by Crowd platform. In that work, IBM published a new dataset comprised of actively collected arguments labeled for pair-wise and point-wise quality. In pair-wise annotations two arguments are shown to the annotators, and they are asked to select the higher-quality one. In point-wise annotation a single argument is shown, and annotators are asked to grade its quality without any reference. To avoid the high subjectivity of rating a single argument by scale, the point-wise annotations are binary (i.e., whether this argument has low or high quality), and IBM used a simple average of positive annotations to derive a continuous score.

In the new AAAI-20 paper, A Large-scale Dataset for Argument Quality Ranking: Construction and Analysis, IBM's researchers extend this work, focusing on point-wise argument quality. They use crowd-sourcing to label around 30,000 arguments on 71 debatable topics, making the dataset five times larger than any previously published point-wise quality dataset. This dataset is made publicly available as part of this work. A main contribution in the creation of the dataset is the analysis of two additional scoring functions that map a set of binary annotations to a single continuous score. Both consider the individual annotators’ reliability. The first is based on inter-annotator agreement, as measured by the Cohen’s kappa coefficient. The second is based on annotators’ competence estimate, as evaluated by the MACE tool. Intuitively, in both cases, the idea is to give more weight to the judgments made by more reliable human annotators. The researhcers compare the two functions in three evaluation tasks and discover that while the functions yield an inherently different distribution of continuous labels, no single function is better than the other. Their analysis emphasizes the importance of selecting the scoring function, as it can consequently impact the performance of learning algorithms. The researchers experiment with fine-tuning BERT on these data as well, suggesting that it performs best when arguments are concatenated with their respective topic. This model achieves 0.53 Pearson and 0.52 Spearman correlations on IBM's test set, outperforming several simpler alternatives.