Intel and Microsoft Enable AI Inference at the Edge with Intel Movidius Vision Processing Units on Windows ML

Today during Windows Developer Day, Microsoft announced Windows ML, which enables developers to perform machine learning tasks in the Windows OS.

Today during Windows Developer Day, Microsoft announced Windows ML, which enables developers to perform machine learning tasks in the Windows OS.

Windows ML uses hardware for any given artificial intelligence (AI) workload and intelligently distributes work across multiple hardware types - now including Intel Vision Processing Units (VPU). The Intel VPU, a purpose-built chip for accelerating AI workloads at the edge, will allow developers to build and deploy deep neural network applications on Windows clients.

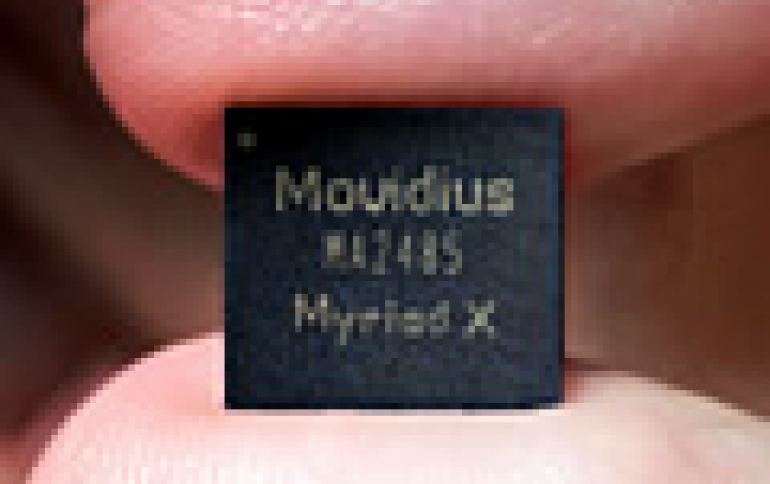

The Intel Movidius Myriad X VPU is the first system-on-chip shipping with a dedicated neural compute engine for hardware acceleration of deep learning inference at the edge. This third-generation VPU from Intel is specifically designed to run deep neural networks at high speed and low power to offload specific AI tasks from burdening other hardware.

By implementing support for Intel's VPU in Windows ML, Microsoft is providing independent software vendors the option of a dedicated deep learning inference solution, freeing up traditional hardware for other workloads or reducing the overall system power consumption without requiring custom code. The Windows ML and Intel VPU combination has the potential to enable more intelligent client applications and core OS features, such as personal assistants, enhanced biometric security, smart music, and photo search and recognition.

For now, the Movidius/Intel's Myriad X is the first AI processor for Windows ML to take advantage of on mobile PCs. But according to Intel, the Myriad X will find its way on the motherboard soon.

Also note that the deal isn't exclusive to Intel/Movidius.

There are plenty of Nvidia GPUs running machine-learning apps in desktop PCs.

Windows also supports ONNX, an industry standard format for ML models that is driven by Microsoft, Facebook, and Amazon Web Services, and supported by Windows IHVs including NVIDIA, Intel, Qualcomm and AMD.

The Intel/Movidus's Myriad X VPU is housed in an 8 x 9-mm package and is capable of 4 TOPS in total. Generally, the Myriad X architecture today can do a trillion operations per second (TOPS) of compute performance on deep-neural-network inferences while keeping power use within a watt.

In designing Myriad X, Movidius explained that it increased the number of its Streaming Hybrid Architecture Vector Engine (SHAVE) DSP Cores from 12 [in Myriad 2] to 16. Movidius added a neural-compute engine with more than 20 enhanced hardware accelerators. These accelerators are designed to perform specific tasks without additional compute overhead.