Intel Combines Optane Options with New MCP Cascade Lake and Xeon E-2100 Processors

Intel's latest data center strategy includes two new members of its Xeon process family. The Xeon E-2100 processor for entry-level server is available immediately, while its Cascade Lake advanced performance processor will be released in the first half of next year.

Cascade Lake advanced performance represents a new class of Intel Xeon Scalable processors designed for the most demanding high-performance computing (HPC), artificial intelligence (AI) and infrastructure-as-a-service (IaaS) workloads. The processor incorporates a performance optimized multi-chip package to deliver up to 48 cores per CPU and 12 DDR4 memory channels per socket. Intel shared initial details of the processor in advance of the Supercomputing 2018 conference.

Intel says that the mixture of increased Intel Xeon Scalable processor cores and improved memory bandwidth per socket will yield significant performance advancements, especially on HPC workloads. These include expected delivery of Linpack performance gains of up to 1.21X vs the Intel Xeon Scalable 8180 processor and 3.4X vs the best in market competitive performance showcasing Cascade Lake advanced performance’s pure core performance advancements. It also includes Stream Triad performance gains of 1.83X vs the Intel Xeon Scalable 8180 processor and 1.3X vs the best in market competitive performance highlighting major advancements in memory bandwidth vs. Intel’s current generation platform. Intel expects the new processor to hold performance leadership across a range of data-centric workloads through 2019 vs any other CPUs entering the market. Cascade Lake advanced performance will also take advantage of the Intel Deep Learning Boost capability featured on all Cascade Lake processors to improve AI/deep learning inference performance up to 17X vs Intel Xeon Platinum processor measurements at launch last July.1 Intel is also working with its OEM customers now to drive these solutions to the market with expected market introduction in the first half of 2019.

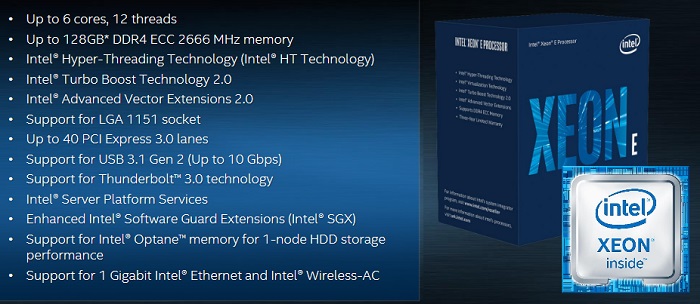

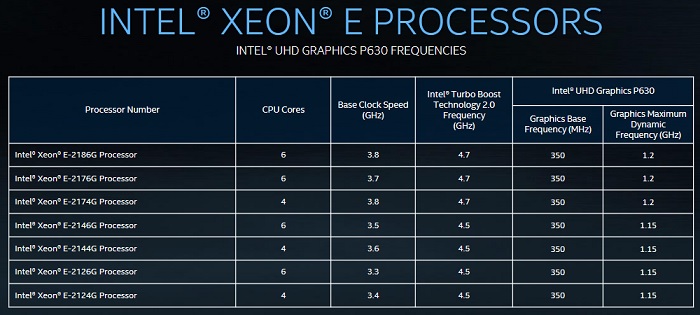

Intel is also bringing the Intel Xeon E processor to servers, delivering essential performance for 1S entry server solutions and offering a platform foundation for trusted enclave delivery via enhanced Intel Software Guard Extensions (Intel SGX). Trusted enclaves provide a capability for businesses to store their data and applications in a digital vault within a server and has been tapped by industry partners to deliver trusted key protection as well as trusted blockchain transaction management.Cloud service providers including Microsoft and IBM have also utilized the technology to offer hardware-enhanced confidential computing services to customers.

Intel Xeon E-2100 processors are available today through Intel and distributors.

Intel is also re-imaging the memory/storage hierarchy to handle high-performance computing (HPC), artificial intelligence (AI) and infrastructure-as-a-service (IaaS) workloads, and that’s where Optane comes into play. The company places Intel’s Optane DC persistent memory just below DRAM, the hottest tier. Below the persistent memory is Intel’s Optane SSD, followed by its own 3D NAND SSD with spinning disk sitting at the bottom.

As Intel oulined last month, Intel sees the technology working in combination with Xeon scalable processors through two special operating modes. The App Direct mode lets applications already tuned for the technology to take full advantage of product’s native persistence and larger capacity.

In Memory mode, applications running in a supported operating system or virtual environment can use Optane as volatile memory — just like DRAM —and take advantage of the additional system capacity made possible from module sizes of up to 512 GB without needing to rewrite software. And like DRAM, the data is gone once the system is powered down.

The Optane DIMMs have been shipping since August and are DDR4 compatible.