Mitsubishi Develops Voice-activated Drawing Function That Displays Spoken Words Where Finger is Traced on Scree

Mitsubishi Electric developed a technology combining voice-recognition and drawing functions to enable users to display their spoken words on a tablet or smartphone by dragging their finger across the screen. The technology is expected to be combined with other functions, such as, picture drawing and multilingual translation, to help people transcend disabilities and foreign languages for smoother global communication.

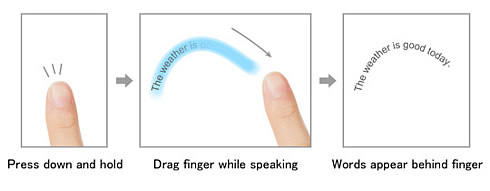

The technology, available for Android devices, allows a user to speak while he/she is pressing down on the screen, and the spoken words are then displayed wherever the finger is dragged across the screen. There is no need to write out words, so it's easier than conversing by writing.

The addition of multilingual translation would enable displayed words to be translated for face-to-face communication with speakers of other languages.

People with hearing disabilities cannot watch the speakers’ mouth and hand movements simultaneously, making it difficult to understand a conversation involving spoken words and hand movements. Mitsubishi’s technology allows a listener with a hearing disability to continue looking at the screen while the speaker talks and gestures with their hands. In addition, stored images displayed on a screen can be overlaid with text of the speaker’s words to explain the content of the images more clearly.

When the screen is tapped twice, the system analyzes the handwritten input and read out the written words, or translates them into a different language, allowing foreigners to communicate with people who are hard of hearing or people in noisy environments to communicate with others.

The technology is compatible with the Android OS.