NVIDIA CEO Lays Out Plan to Accelerate AI, Unveils New Orin Chip For Self-Driving Vehicles, Gaming Agreement With Tencent

NVIDIA founder and CEO Jensen Huang took the stage Wednesday to unveil the latest technology for speeding the mass adoption of AI.

His talk at this week’s GPU Technology Conference in Suzhou touched on advancements in AI deployment, as well as NVIDIA’s work in the automotive, gaming, and healthcare industries.

“We build computers for the Einsteins, Leonardo di Vincis, Michaelngelos of our time,” Huang told the crowd, which overflowed into the aisles. “We build these computers for all of you.”

Huang explained that demand is surging for technology that can accelerate the delivery of AI services of all kinds. And NVIDIA’s deep learning platform — which the company updated Wednesday with new inferencing software — promises to be the fastest, most efficient way to deliver these services.

“It is accepted now that GPU accelerated computing is the path forward as Moore’s law has ended,” Huang said.

The latest challenge for accelerated computing: driving a new generation of powerful systems, known as recommender systems, able to connect individuals with what they’re looking for in a world where the options available to them is spiraling exponentially.

“The era of search has ended: if I put out a trillion, billion million things and they’re changing all the time, how can you find anything,” Huang asked. “The era of search is over. The era of recommendations has arrived.

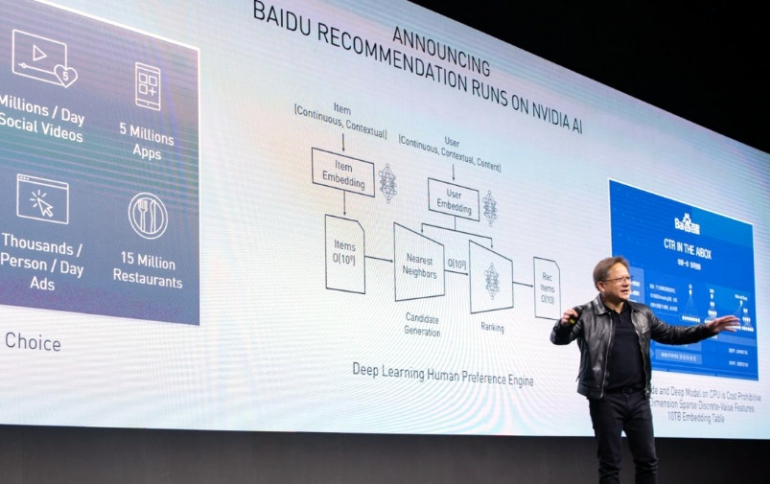

Baidu — one of the world’s largest search companies – is harnessing NVIDIA technology to power advanced recommendation engines.

“It solves this problem of taking this enormous amount of data, and filtering it through this recommendation system so you only see 10 things,” Huang said.

With GPUs, Baidu can now train the models that power its recommender systems 10x faster, reducing costs, and, over the long term, increasing the accuracy of its models, improving the quality of its recommendations.

Another example such systems’ power: Alibaba, which relies on NVIDIA technology to help power the recommendation engines behind the success of Single’s Day.

Huang also announced new inference software enabling smarter, real-time conversational AI.

NVIDIA TensorRT 7 — the seventh generation of the company’s inference software development kit — features a new deep learning compiler designed to automatically optimize and accelerate the increasingly complex recurrent and transformer-based neural networks needed for complex new applications, such as AI speech.

This speeds the components of conversational AI by 10x compared to CPUs, driving latency below the 300-millisecond threshold considered necessary for real-time interactions.

Sogou, which provides search services to WeChat, the world’s most frequently used application on mobile phones.

TensorRT 7 speeds up a universe of AI models that are being used to make predictions on time-series, sequence-data scenarios that use recurrent loop structures, called RNNs. In addition to being used for conversational AI speech networks, RNNs help with arrival time planning for cars or satellites, prediction of events in electronic medical records, financial asset forecasting and fraud detection.

With TensorRT’s new deep learning compiler, developers everywhere now have the ability to automatically optimize these networks — such as bespoke automatic speech recognition networks, and WaveRNN and Tacotron 2 for text-to-speech.

The new compiler also optimizes transformer-based models like BERT for natural language processing.

NVIDIA’s inference platform — which includes TensorRT, as well as several NVIDIA CUDA-X AI libraries and NVIDIA GPUs — delivers low-latency, high-throughput inference for applications beyond conversational AI, including image classification, fraud detection, segmentation, object detection and recommendation engines. Its capabilities are used by some of the world’s leading enterprise and consumer technology companies, including Alibaba, American Express, Baidu, PayPal, Pinterest, Snap, Tencent and Twitter.

TensorRT 7 will be available in the coming days for development and deployment, without charge to members of the NVIDIA Developer program. The latest versions of plug-ins, parsers and samples are also available as open source from the TensorRT GitHub repository.

“To have the ability to understand your intention, make recommendations, do searches and queries for you, and summarize what they’ve learned to a text to speech system… that loop is now possible,” Huang said. “It is now possible to achieve very natural, very rich, conversational AI in real time.”

Automotive Innovations

Huang also announced NVIDIA will provide the transportation industry with source access to its NVIDIA DRIVE deep neural networks (DNNs) for autonomous vehicle development.

NVIDIA is providing source access of it’s pre-trained AI models and training code to AV developers. Using a suite of NVIDIA AI tools, the ecosystem can extend and customize the models to increase the capabilities of their self-driving systems.

In addition to providing source access to the DNNs, Huang announcing the availability of a suite of advanced tools so developers can customize and enhance NVIDIA’s DNNs, utilizing their own data sets and target feature set. These tools allow the training of DNNs utilizing active learning, federated learning and transfer learning, Huang said.

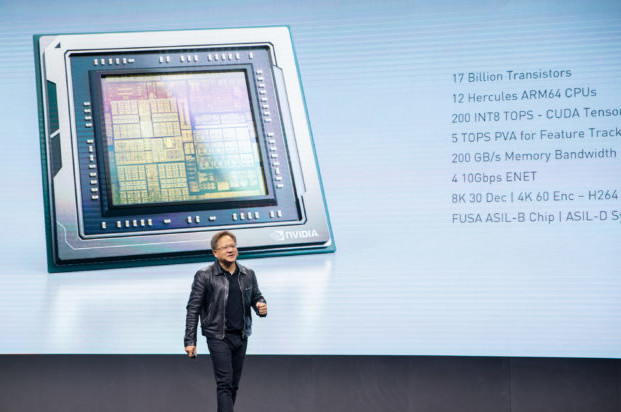

Haung also announced NVIDIA DRIVE AGX Orin, calling it "the world’s highest performance and most advanced system-on-a-chip." It delivers 7x the performance and 3x the efficiency per watt of Xavier, NVIDIA’s previous-generation automotive SoC. Orin — which will be available to be incorporated in customer production runs for 2022 — boasts 17 billion transistors, 12 CPU cores, and is capable of over 200 trillion operations per second. According to Nvidia, the Orin chip can handle over 200 GB/s of data. By way of comparison, the Tesla Autopilot V2.5 computer that also used older generations of Nvidia chips was limited to about 2 GB/s. The Xavier SoC delivers its 30 TOPS at about 30W of power consumption. The two chips used by Tesla consume about 72W. According to Nvidia’s senior director of automotive, Danny Shapiro, the Orin SoC should have about four times the performance per watt of Xavier or somewhere between 60 and 70W for 200 TOPS.

The Orin SoC integrates NVIDIA’s next-generation GPU architecture and Arm Hercules CPU cores, as well as new deep learning and computer vision accelerators.

Orin will be woven into a stack of products — all running a single architecture and compatible with software developed on Xavier — able to scale from simple level 2 autonomy, all the way up to full Level 5 autonomy.

Since both Orin and Xavier are programmable through open CUDA and TensorRT APIs and libraries, developers can leverage their investments across multiple product generations.

The NVIDIA DRIVE AGX Orin family will include a range of configurations based on a single architecture, targeting automakers’ 2022 production timelines.

And Huang announced that Didi — the world’s largest ride hailing company — will adopt NVIDIA DRIVE to bring robotaxis and intelligent ride-hailing services to market.

DiDi will use NVIDIA GPUs in the data center for training machine learning algorithms and NVIDIA DRIVE for inference on its Level 4 autonomous driving vehicles. In August, DiDi upgraded its autonomous driving unit into an independent company and began a wide range of collaborations.

As part of the centralized AI processing of DiDi’s autonomous vehicles, NVIDIA DRIVE enables data to be fused from all types of sensors (cameras, lidar, radar, etc.) using numerous deep neural networks (DNNs) to understand the 360-degree environment surrounding the car and plan a safe path forward.

To train these DNNs, DiDi will use NVIDIA GPU data center servers. For cloud computing, DiDi will also build an AI infrastructure and launch virtual GPU (vGPU) cloud servers for computing, rendering and gaming.

Game On

Adding to NVIDIA’s footprint in cloud gaming, Huang announced a collaboration with Tencent Games in cloud gaming.

“We are going to extend the wonderful experience of PC gaming to all the computers that are underpowered today, the opportunity is quite extraordinary,” Huang said. “We can extend PC gaming to the other 800 milliion gamers in the world.”

NVIDIA’s technology will power Tencent Games’ START cloud gaming service, which began testing earlier this year. START gives gamers access to AAA games on underpowered devices anytime, anywhere.Tencent Games intends to scale the platform to millions of gamers, with an experience that is consistent with playing locally on a gaming rig.

Earlier this year, NVIDIA announced collaborations with Japan’s SoftBank and Korea’s LG U+ for cloud gaming solutions. Additionally, NVIDIA operates GeForce NOW in select North American and European markets, delivering a dedicated PC gaming experience in the cloud to gamers around the world.

NVIDIA and Tencent Games also announced a joint innovation lab for gaming. They will work together to explore new applications for AI in games, game engine optimizations and new lighting techniques including ray tracing and light baking.

Huang also announced that six game developers will join the ranks of game developers around the world who have been using the realtime ray tracing capabilities of NVIDIA’s GeForce RTX to transform the image quality and lighting effects of their upcoming titles

Ray tracing is a graphics rendering technique that brings real-time, cinematic-quality rendering to content creators and game developers. NVIDIA GeForce RTX GPUs contain specialized processor cores designed to accelerate ray tracing so the visual effects in games can be rendered in real time.

The upcoming games include a mix of blockbusters, new franchises, triple-A titles and indie fare — all using real-time ray tracing to bring ultra-realistic lighting models to their gameplay.

They include Boundary, from Surgical Scalpels Studios; Convallarioa, from LoongForce; F.I.S.T. from Shanghai TiGames; an unnamed project from Mihyo; Ring of Elysium, from TenCent; and Xuan Yuan Sword VII from Softstar.

Accelerating Medical Advances, 5G

This year, Huang said, NVIDIA has added two major new applications to CUDA – 5G vRAN and genomic processing. With each, NVIDIA’s supported by world leaders in their respective industries – Ericsson in telecommunication and BGI in genomics.

“The ability to sequence the human genome in its totality is incredibly powerful,” Huang said.

Huang announced that NVIDIA is working with Beijing Genomics Institute.

BGI is using NVIDIA V100 GPUs and software from Parabricks, an Ann Arbor, Michigan- based startup acquired by NVIDIA earlier this month — to build the highest throughput genome sequencer yet, potentially driving down the cost of genomics-based personalized medicine.

“It took 15 years to sequence the human genome for the first time,” Huang said. “It is now possible to sequence 16 whole genomes per day.”

Huang also announced the availability of the NVIDIA Parabricks Genomic Analysis Toolkit, and its availability on NGC, NVIDIA’s hub for GPU-optimized software for deep learning, machine learning, and high-performance computing.

Robotics with NVIDIA Isaac

As the talk wound to a close, Huang announced a new version of NVIDIA’s Isaac software development kit. The Isaac SDK achieves a milestone in establishing a unified robotic development platform — enabling AI, simulation and manipulation capabilities.

The showstopper: Leonardo, a robotic arm with exquisite articulation created by NVIDIA researchers in Seattle, that not only performed a sophisticated task — recognizing and rearranging four colored cubes — but responded almost tenderly to the actions of the people around it in real time. It purred out a deep squeak, seemingly out of a Steven Spielberg movie.

As the audience watched the robotic arm was able to gently pluck a yellow colored block from Hunag’s hand and set it down. It then went on to rearrange four colored blocks, gently stacking them with fine precision.

The feat was the result of sophisticated simulation and training, that allows the robot to learn in virtual worlds, before being put to work in the real world. “And this is how we’re going to create robots in the future,” Huang said.

The SDK is establishing a unified robotic development platform — enabling AI, simulation and manipulation capabilities.

It includes the Isaac Robotics Engine (which provides the application framework), Isaac GEMs (pre-built deep neural networks models, algorithms, libraries, drivers and APIs), reference apps for indoor logistics, as well as the first release of Isaac Sim (offering navigation capabilities).

As a result, the new Isaac SDK can greatly accelerate developing and testing robots for researchers, developers, startups and manufacturers.

To jumpstart AI robotic development, the new Isaac SDK includes a variety of camera-based perception deep neural networks. Among them:

- Object detection — recognizes objects for navigation, interaction or manipulation

- Free space segmentation — detects and segments the external world, such as determining where a sidewalk is and where a robot is allowed to travel

- 3D pose estimation — understands an object’s position and orientation, enabling such tasks as a robotic arm picking up an object

- 2D human pose estimation — applies pose estimation to humans, which is important for robots that interact with people, such as delivery bots, and for cobots, which are specifically designed to work together with humans

The SDK’s object detection has also been updated with the ResNet deep neural network, which can be trained using NVIDIA’s Transfer Learning Toolkit. This makes it easier to add new objects for detection and train new models that can get up and running with high accuracy levels.

The new release introduces an important capability — using Isaac Sim to train a robot and deploy the resulting software into a real robot that operates in the real world. This promises to greatly accelerate robotic development, enabling training with synthetic data.

Simulation enables testing in so-called corner cases — that is, under difficult, unusual circumstances — to further sharpen training. Feeding these results into the training pipeline enables neural networks to improve accuracy based on both real and simulated data.

The new SDK provides multi-robot simulation, as well. This allows developers to put multiple robots into a simulation environment for testing, so they learn to work in relation to each other. Individual robots can run independent versions of Isaac’s navigation software stack while moving within a shared virtual environment.

The new SDK also integrates support for NVIDIA DeepStream software, which is widely used for processing analytic capabilities. Video streams can be processed deploying DeepStream and NVIDIA GPUs for AI at the edge supporting robotic applications.

Developers can now build a wide variety of robots that require analysis of camera video feeds, provided for both onboard applications and remote locations.

Finally, for robot developers who have developed their own code, the new SDK is designed to integrate that work, with the addition of a new API based on the C programming language.

This enables developers to connect their own software stacks to the Isaac SDK and minimize programming language conversions — giving users the features of Isaac routed through the C API access.

The inclusion of C-API access also allows the use of the Isaac SDK in other programming languages.