NVIDIA Unveils Cloud GPU Technologies at GTC

NVIDIA today kicked off the GPU Technology Conference

(GTC) by unveiling technologies that accelerate cloud

computing using the computing capabilities of the GPU.

Nvidia Corp Chief Executive Jen-Hsun Huang wants his graphics chips to be adopted in data centers to help stream better graphics to smartphones and tablets, his company's newest bid to diversify beyond personal computers.

NVIDIA's cloud GPU technologies are based on the company's new Kepler GPU architecture, designed for use in large-scale data centers. Its virtualization capabilities allow GPUs to be simultaneously shared by multiple users. NVIDIA claims that its ultra-fast streaming display capability eliminates lag, making a remote data center feel like it's just next door. And its extreme energy efficiency and processing density lowers data center costs.

"Kepler cloud GPU technologies shifts cloud computing into a new gear," said Jen-Hsun Huang, NVIDIA president and chief executive officer. "The GPU has become indispensable. It is central to the experience of gamers. It is vital to digital artists realizing their imagination. It is essential for touch devices to deliver silky smooth and beautiful graphics. And now, the cloud GPU will deliver amazing experiences to those who work remotely and gamers looking to play untethered from a PC or console."

NVIDIA VGX Platform

The enterprise implementation of Kepler cloud technologies, the NVIDIA VGX platform, accelerates virtualized desktops. The platform enables IT departments to deliver a virtualized desktop with the graphics and GPU computing performance of a PC or workstation to employees using any connected device.

With the NVIDIA VGX platform in the data center, employees can now access a true cloud PC from any device -- thin client, laptop, tablet or smartphone -- regardless of its operating system. NVIDIA claims that VGX enables knowledge workers for the first time to access a GPU-accelerated desktop similar to a traditional local PC.

Integrating the VGX platform into the corporate network also enables enterprise IT departments to address the complex challenges of "BYOD" -- employees bringing their own computing device to work. It delivers a remote desktop to these devices, providing users the same access they have on their desktop terminal.

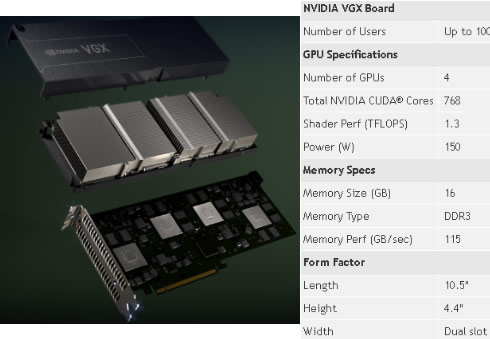

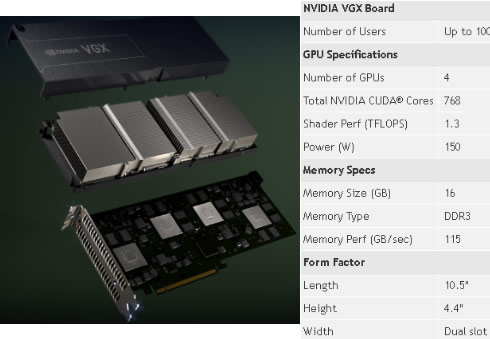

NVIDIA VGX is based on NVIDIA VGX Boards, NVIDIA VGX GPU Hypervisor and NVIDIA User Selectable Machines (USMs).

NVIDIA VGX Boards are designed for hosting large numbers of users in an energy-efficient way. The first NVIDA VGX board is configured with four GPUs and 16 GB of memory, and fits into the standard PCI Express interface in servers.

NVIDIA VGX GPU Hypervisor is a software layer that integrates into commercial hypervisors, such as the Citrix XenServer, enabling virtualization of the GPU.

NVIDIA User Selectable Machines (USMs) is a manageability option that allows enterprises to configure the graphics capabilities delivered to individual users in the network, based on their demands. Capabilities range from true PC experiences available with the NVIDIA standard USM to enhanced professional 3D design and engineering experiences with NVIDIA Quadro or NVIDIA NVS GPUs.

The NVIDIA VGX platform enables up to 100 users to be served from a single server powered by one VGX board, improving user density on a single server compared with traditional virtual desktop infrastructure (VDI) solutions.

NVIDIA GeForce GRID

NVIDIA GeForce GRID

The gaming implementation of Kepler cloud technologies, NVIDIA GeForce GRID, powers cloud gaming services. NVIDIA will offer the platform to gaming-as-a-service providers, who will be able to use it to remotely deliver gaming experiences, "with the potential to surpass those on a console," according to the company.

Gamers will be able to play the latest games on any connected device, including TVs, smartphones and tablets running iOS and Android.

"Gamers will now have access to seamlessly play the world's best titles anywhere, anytime, from phones, tablets, TVs or PCs," said Phil Eisler, general manager of cloud gaming at NVIDIA. "GeForce GRID represents a massive disruption in how games are delivered and played."

The key technologies powering the new platform are NVIDIA GeForce GRID GPUs with dedicated ultra-low-latency streaming technology and cloud graphics software. Gaming-as-a-service providers will be able to operate scalable data centers at costs that are in line with those of movie-streaming services.

Using the NVIDIA Kepler architecture, NVIDIA GeForce GRID GPUs minimize power consumption by simultaneously encoding up to eight game streams. This allows providers to scale their service offerings to support millions of concurrent gamers.

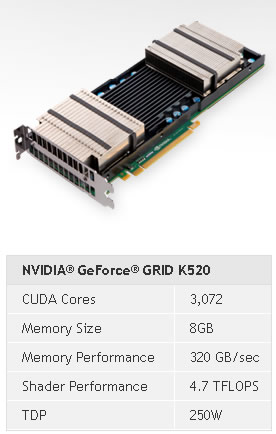

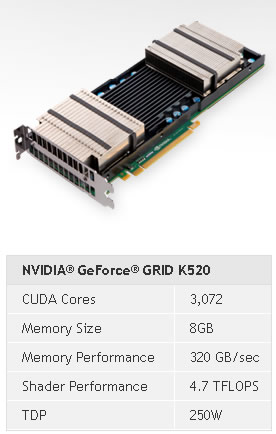

Featuring two Kepler architecture-based GPUs, each with its own encoder, the processors have 3,072 CUDA technology cores and 4.7 teraflops of 3D shader performance. This enables providers to render highly complex games in the cloud and encode them on the GPU, rather than the CPU, allowing their servers to simultaneously run more game streams.

Fast streaming technology reduces server latency to as little as 10 milliseconds by capturing and encoding a game frame in a single pass. The GeForce GRID platform uses fast-frame capture, concurrent rendering and single-pass encoding to achieve ultra-fast game streaming.

The latency-reducing technology in GeForce GRID GPUs compensates for the distance in the network, so "gamers will feel like they are playing on a gaming supercomputer located in the same room," NVIDIA claims.

Also at GTC, NVIDIA and Gaikai demonstrated a virtual game console, consisting of an LG Cinema 3D Smart TV running a Gaikai application connected to a GeForce GRID GPU in a server 10 miles away. Lag-free play was enabled on a highly complex PC game, with only an Ethernet cable and wireless USB game pad connected to the TV.

A number of gaming-as-a-service providers including Gaikai, Playcast Media System and Ubitus, announced their support of the GeForce GRID. EPIC Games, Capcom and THQ have also announced support for Nvidia's new technology.

New Tesla GPUs

New Tesla GPUs

NVIDIA also today unveiled a new family of Tesla GPUs based on the company's newest Kepler GPU computing architecture.

The new NVIDIA Tesla K10 and K20 GPUs are computing accelerators built to handle complex HPC problems. Nvidia says that Kepler is three times as efficient as its predecessor, the NVIDIA Fermi architecture.

NVIDIA developed a set of architectural technologies that make the Kepler GPUs high performing and highly energy efficient. Among the major technologies are:

- SMX Streaming Multiprocessor -- The basic building block of every GPU, the SMX streaming multiprocessor was redesigned from the ground up for high performance and energy efficiency. It delivers up to three times more performance per watt than the Fermi streaming multiprocessor, making it possible to build a supercomputer that delivers one petaflop of computing performance in just 10 server racks. SMX's energy efficiency was achieved by increasing its number of CUDA architecture cores by four times, while reducing the clock speed of each core, power-gating parts of the GPU when idle and maximizing the GPU area devoted to parallel-processing cores instead of control logic.

- Dynamic Parallelism -- This capability enables GPU threads to dynamically spawn new threads, allowing the GPU to adapt dynamically to the data. It simplifies parallel programming, enabling GPU acceleration of a broader set of popular algorithms, such as adaptive mesh refinement, fast multipole methods and multigrid methods.

- Hyper-Q -- This enables multiple CPU cores to simultaneously use the CUDA architecture cores on a single Kepler GPU. This increases GPU utilization, slashing CPU idle times and advancing programmability. Hyper-Q is ideal for cluster applications that use MPI.

Optimized for customers in oil and gas exploration and the defense industry, a single Tesla K10 accelerator board features two GK104 Kepler GPUs that deliver an aggregate performance of 4.58 teraflops of peak single-precision floating point and 320 GB per second memory bandwidth.

The NVIDIA Tesla K20 GPU is the new flagship of the Tesla GPU product family. The Tesla K20 is planned to be available in the fourth quarter of 2012.

The Tesla K20 is based on the GK110 Kepler GPU. This GPU delivers three times more double precision compared to Fermi architecture-based Tesla products and it supports the Hyper-Q and dynamic parallelism capabilities. The GK110 GPU is expected to be incorporated into the new Titan supercomputer at the Oak Ridge National Laboratory in Tennessee and the Blue Waters system at the National Center for Supercomputing Applications at the University of Illinois at Urbana-Champaign.

In addition to the Kepler architecture, NVIDIA today released a preview of the CUDA 5 parallel programming platform. Available to the members of NVIDIA's GPU Computing Registered Developer program, the platform will enable developers to begin exploring ways to take advantage of the new Kepler GPUs, including dynamic parallelism.

The CUDA 5 parallel programming model is planned to be widely available in the third quarter of 2012.

NVIDIA's cloud GPU technologies are based on the company's new Kepler GPU architecture, designed for use in large-scale data centers. Its virtualization capabilities allow GPUs to be simultaneously shared by multiple users. NVIDIA claims that its ultra-fast streaming display capability eliminates lag, making a remote data center feel like it's just next door. And its extreme energy efficiency and processing density lowers data center costs.

"Kepler cloud GPU technologies shifts cloud computing into a new gear," said Jen-Hsun Huang, NVIDIA president and chief executive officer. "The GPU has become indispensable. It is central to the experience of gamers. It is vital to digital artists realizing their imagination. It is essential for touch devices to deliver silky smooth and beautiful graphics. And now, the cloud GPU will deliver amazing experiences to those who work remotely and gamers looking to play untethered from a PC or console."

NVIDIA VGX Platform

The enterprise implementation of Kepler cloud technologies, the NVIDIA VGX platform, accelerates virtualized desktops. The platform enables IT departments to deliver a virtualized desktop with the graphics and GPU computing performance of a PC or workstation to employees using any connected device.

With the NVIDIA VGX platform in the data center, employees can now access a true cloud PC from any device -- thin client, laptop, tablet or smartphone -- regardless of its operating system. NVIDIA claims that VGX enables knowledge workers for the first time to access a GPU-accelerated desktop similar to a traditional local PC.

Integrating the VGX platform into the corporate network also enables enterprise IT departments to address the complex challenges of "BYOD" -- employees bringing their own computing device to work. It delivers a remote desktop to these devices, providing users the same access they have on their desktop terminal.

NVIDIA VGX is based on NVIDIA VGX Boards, NVIDIA VGX GPU Hypervisor and NVIDIA User Selectable Machines (USMs).

NVIDIA VGX Boards are designed for hosting large numbers of users in an energy-efficient way. The first NVIDA VGX board is configured with four GPUs and 16 GB of memory, and fits into the standard PCI Express interface in servers.

NVIDIA VGX GPU Hypervisor is a software layer that integrates into commercial hypervisors, such as the Citrix XenServer, enabling virtualization of the GPU.

NVIDIA User Selectable Machines (USMs) is a manageability option that allows enterprises to configure the graphics capabilities delivered to individual users in the network, based on their demands. Capabilities range from true PC experiences available with the NVIDIA standard USM to enhanced professional 3D design and engineering experiences with NVIDIA Quadro or NVIDIA NVS GPUs.

The NVIDIA VGX platform enables up to 100 users to be served from a single server powered by one VGX board, improving user density on a single server compared with traditional virtual desktop infrastructure (VDI) solutions.

NVIDIA GeForce GRID

NVIDIA GeForce GRID

The gaming implementation of Kepler cloud technologies, NVIDIA GeForce GRID, powers cloud gaming services. NVIDIA will offer the platform to gaming-as-a-service providers, who will be able to use it to remotely deliver gaming experiences, "with the potential to surpass those on a console," according to the company.

Gamers will be able to play the latest games on any connected device, including TVs, smartphones and tablets running iOS and Android.

"Gamers will now have access to seamlessly play the world's best titles anywhere, anytime, from phones, tablets, TVs or PCs," said Phil Eisler, general manager of cloud gaming at NVIDIA. "GeForce GRID represents a massive disruption in how games are delivered and played."

The key technologies powering the new platform are NVIDIA GeForce GRID GPUs with dedicated ultra-low-latency streaming technology and cloud graphics software. Gaming-as-a-service providers will be able to operate scalable data centers at costs that are in line with those of movie-streaming services.

Using the NVIDIA Kepler architecture, NVIDIA GeForce GRID GPUs minimize power consumption by simultaneously encoding up to eight game streams. This allows providers to scale their service offerings to support millions of concurrent gamers.

Featuring two Kepler architecture-based GPUs, each with its own encoder, the processors have 3,072 CUDA technology cores and 4.7 teraflops of 3D shader performance. This enables providers to render highly complex games in the cloud and encode them on the GPU, rather than the CPU, allowing their servers to simultaneously run more game streams.

Fast streaming technology reduces server latency to as little as 10 milliseconds by capturing and encoding a game frame in a single pass. The GeForce GRID platform uses fast-frame capture, concurrent rendering and single-pass encoding to achieve ultra-fast game streaming.

The latency-reducing technology in GeForce GRID GPUs compensates for the distance in the network, so "gamers will feel like they are playing on a gaming supercomputer located in the same room," NVIDIA claims.

Also at GTC, NVIDIA and Gaikai demonstrated a virtual game console, consisting of an LG Cinema 3D Smart TV running a Gaikai application connected to a GeForce GRID GPU in a server 10 miles away. Lag-free play was enabled on a highly complex PC game, with only an Ethernet cable and wireless USB game pad connected to the TV.

A number of gaming-as-a-service providers including Gaikai, Playcast Media System and Ubitus, announced their support of the GeForce GRID. EPIC Games, Capcom and THQ have also announced support for Nvidia's new technology.

New Tesla GPUs

New Tesla GPUsNVIDIA also today unveiled a new family of Tesla GPUs based on the company's newest Kepler GPU computing architecture.

The new NVIDIA Tesla K10 and K20 GPUs are computing accelerators built to handle complex HPC problems. Nvidia says that Kepler is three times as efficient as its predecessor, the NVIDIA Fermi architecture.

NVIDIA developed a set of architectural technologies that make the Kepler GPUs high performing and highly energy efficient. Among the major technologies are:

- SMX Streaming Multiprocessor -- The basic building block of every GPU, the SMX streaming multiprocessor was redesigned from the ground up for high performance and energy efficiency. It delivers up to three times more performance per watt than the Fermi streaming multiprocessor, making it possible to build a supercomputer that delivers one petaflop of computing performance in just 10 server racks. SMX's energy efficiency was achieved by increasing its number of CUDA architecture cores by four times, while reducing the clock speed of each core, power-gating parts of the GPU when idle and maximizing the GPU area devoted to parallel-processing cores instead of control logic.

- Dynamic Parallelism -- This capability enables GPU threads to dynamically spawn new threads, allowing the GPU to adapt dynamically to the data. It simplifies parallel programming, enabling GPU acceleration of a broader set of popular algorithms, such as adaptive mesh refinement, fast multipole methods and multigrid methods.

- Hyper-Q -- This enables multiple CPU cores to simultaneously use the CUDA architecture cores on a single Kepler GPU. This increases GPU utilization, slashing CPU idle times and advancing programmability. Hyper-Q is ideal for cluster applications that use MPI.

Optimized for customers in oil and gas exploration and the defense industry, a single Tesla K10 accelerator board features two GK104 Kepler GPUs that deliver an aggregate performance of 4.58 teraflops of peak single-precision floating point and 320 GB per second memory bandwidth.

The NVIDIA Tesla K20 GPU is the new flagship of the Tesla GPU product family. The Tesla K20 is planned to be available in the fourth quarter of 2012.

The Tesla K20 is based on the GK110 Kepler GPU. This GPU delivers three times more double precision compared to Fermi architecture-based Tesla products and it supports the Hyper-Q and dynamic parallelism capabilities. The GK110 GPU is expected to be incorporated into the new Titan supercomputer at the Oak Ridge National Laboratory in Tennessee and the Blue Waters system at the National Center for Supercomputing Applications at the University of Illinois at Urbana-Champaign.

In addition to the Kepler architecture, NVIDIA today released a preview of the CUDA 5 parallel programming platform. Available to the members of NVIDIA's GPU Computing Registered Developer program, the platform will enable developers to begin exploring ways to take advantage of the new Kepler GPUs, including dynamic parallelism.

The CUDA 5 parallel programming model is planned to be widely available in the third quarter of 2012.