Simultaneous Localization and Mapping Could Change The World Around Us

Despite all the recent exciting developments around Augmented Reality, we are still scratching the surface at its true potential, as the technology has the potential to transform the daily lives of users and operations of businesses.

A new and exciting technology part of AR is SLAM (Simultaneous Localization and Mapping). SLAM is a series of complex computations and algorithms which use sensors to construct maps and structures in unknown environments and localize the device position and orientation. Cameras and Inertial Measurement Units (IMUs) are available in 80 percent of smartphones globally, but it's mainly higher-end smartphones that can actually run SLAM today.

From a technical standpoint, SLAM is essentially a procedure that estimates the position and orientation of a device and builds a map of the environment using a camera input feed and IMUs (accelerometer and gyroscope) readings. Being able to determine the accurate location of a device in an unknown environment brings a lot of opportunities for developers to create new use cases and applications.

So how does the location determined by SLAM work on today's smartphones? The smartphone is building information about the environment through gathering data from IMU sensors and processing a video feed from the monocular camera.

IMUs provide information about the actual movements of the smartphone, but unfortunately not accurately enough to rely only on their readings. This limitation is overcome by combining it with the camera feed that provides valuable information about the environment. The camera feed is used to detect "D feature points" within the current frame and then attempts to correlate them with the points found in the previous frame. The points are likely be found in different positions from frame-to-frame, with this being the essence of the algorithm. Based on these relative points and the IMUs readings, the algorithm can work out the 3D position and orientation of the device with an accuracy to the centimeter range.

IMUs work at a higher frequency than the camera, with this helping to compensate for sudden camera movements that would otherwise provide blurry images. The magic of SLAM is that by combining IMUs with the camera feed it achieves an accuracy higher than the ones associated with the camera and IMUs separately.

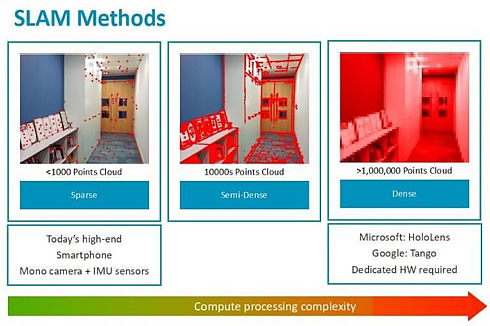

As a result, the SLAM algorithmic pipeline also provides the 3D position of the 2D feature points that the system has been tracking. This set of 3D points is known as a "point cloud". The pipeline described above can also provide a "sparse point cloud" which we can then use to extract planes from the environment. Obviously, the devil is in the detail and it is more complicated, but this is, in essence, how "Sparse SLAM" works.

Through having an accurate position and orientation of the device provided by SLAM and knowing the camera parameters, it is then possible to render 3D virtual objects on top of the camera feed as "they were there". This allows for the enhancement of the real environment through virtual objects and features. This could provide a number of handy use cases for retail, for example, consumers could use SLAM to establish whether a wooden pot would be a good purchase through the virtual simulation.

The features described above are all part of "Sparse SLAM" but looking to the future it is likely that mapping will evolve to "Dense SLAM", with the simple planes moving towards more accurate geometrical representations of the real world.

Looking even further ahead, the ultimate goal for SLAM is rendering a virtual object in the real environment, so no one can tell what is real and what is virtual. This is something that won't happen overnight, but is likely to be the long-term future goal for those working in the AR and VR industries.

Both Sparse and Dense SLAM are technically possible today, but Sparse SLAM is far more common. Currently, Sparse SLAM is a method that is present on today's high-end smartphones based on monocular RGB cameras and IMU sensors. Whereas Dense SLAM can be found in more AR dedicated devices such as HoloLens, which has four cameras for tracking user positions, a Time of Flight sensor and IMU sensors.

A big focus for SLAM will be mobile devices. However, the technology is not just for mobile, as it will transcend across a number of different devices such as cars, robots, smartphones, VR headsets, laptops, etc. All these devices have different variations of inputs needed for SLAM, so there are likely to be a significant amount of different SLAM solutions in the future.

"Indeed, SLAM is likely to play a pivotal role in a number of exciting use cases for businesses and consumers, such as navigation, advertising and gaming," said ARM's Sylwester Bala, who will present more about SLAM in his Techtalk at Arm TechCon on Thursday 18th October.