Microsoft Research Showcases Natural User Interfaces

TechFest 2011, Microsoft Research's annual showcase of forward-looking computer-science technology, will feature several projects that show how the move toward NUIs is progressing.

Not all the TechFest projects are NUI-related. Microsoft Research investigates the possibilities in many computer-science areas. But quite a few of the demos to be shown do shine a light on natural user interfaces, and each points to a new way to see or interact with the world.

One demo shows how patients' medical images can be interpreted automatically, enhancing considerably the efficiency of a physician's work. One literally creates a new world - instantly converting real objects into digital 3-D objects that can be manipulated by a real human hand. A third acts as a virtual drawing coach to would-be artists. And yet another enables a simple digital stylus to understand whether a person wants to draw with it, paint with it, or, perhaps, even play it like a saxophone.

Semantic Understanding of Medical Images

Semantic Understanding of Medical Images

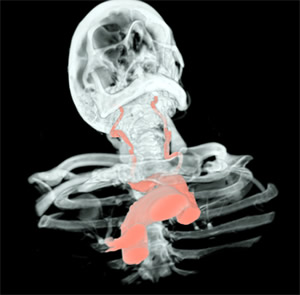

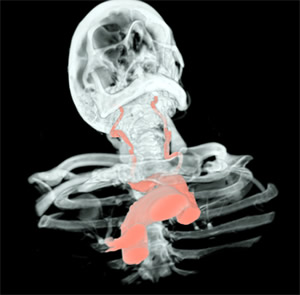

To help make medical images easier to read and analyze, a team from Microsoft Research Cambridge has created InnerEye, a research project that uses the latest machine-learning techniques to speed image interpretation and improve diagnostic accuracy. InnerEye also has implications for improved treatments, such as enabling radiation oncologists to target treatment to tumors more precisely in sensitive areas such as the brain.

In the case of radiation therapy, it can take hours for a radiation oncologist to outline the edge of tumors and healthy organs to be protected. InnerEye greatly reduces the time needed to delineate accurately the boundaries of anatomical structures of interest in 3-D.

To use InnerEye, a radiologist or clinician uses a computer pointer on a screen image of a medical scan to highlight a part of the body that requires treatment. InnerEye then employs algorithms to accurately define the 3-D surface of the selected organ. In the resulting image, the highlighted organ - a kidney, for instance, or even a complete aorta - seems to almost leap from the rest of the image. The organ delineation offers a quick way of assessing things such as organ volume, tissue density, and other information that aids diagnosis.

InnerEye also enables extremely fast visual navigation and inspection of 3-D images. A physician can navigate to an optimized view of the heart simply by clicking on the word "heart," because the system already knows where each organ is. This yields considerable time savings, with big economic implications.

The InnerEye project team also is investigating the use of Kinect in the operating theater. Surgeons often wish to view a patient's previously acquired CT or MR scans, but touching a mouse or keyboard could introduce germs. The InnerEye technology and Kinect help by automatically interpreting the surgeon's hand gestures. This enables the surgeon to navigate naturally through the patient's images.

Blurring the Line Between the Real and the Virtual

Breaking down the barrier between the real world and the virtual world is a staple of science fiction? Avatar and The Matrix are but two recent examples. But technology is coming closer to actually blurring the line.

Microsoft researchers have taken a step toward making the virtual real with a project called MirageBlocks. Its aim is to simplify the process of digitally capturing images of everyday objects and to convert them instantaneously to 3-D images. The goal is to create a virtual mirror of the physical world, one so readily understood that a MirageBlocks user could take an image of a brick and use it to create a virtual castle - brick by brick.

Capturing and visualizing objects in 3-D long has fascinated scientists, but new technology makes it more feasible. In particular, Kinect for Xbox 360 gave researchers an easy-to-use, $150 gadget that easily could capture the depth of an object with its multicamera design. Coupled with new-generation 3-D projectors and 3-D glasses, Kinect helps make MirageBlocks perhaps the most advanced tool ever for capturing and manipulating 3-D imagery.

The MirageBlocks environment consists of a Kinect device, an Acer H5360 3-D projector, and Nvidia 3D Vision glasses synchronized to the projector's frame rate. The Kinect captures the object image and tracks the user?s head position so that the virtual image is shown to the user with the correct perspective.

Users enter MirageBlocks' virtual world by placing an object on a table top, where it is captured by the Kinect's cameras. The object is instantly digitized and projected back into the workspace as a 3-D virtual image. The user then can move or rotate the virtual object using an actual hand or a numbered keypad. A user can take duplicate objects, or different objects, to construct a virtual 3-D model. To the user, the virtual objects have the same depth and size as their physical counterparts.

MirageBlocks has several real-world applications. It could apply an entirely new dimension to simulation games, enabling game players to create custom models or devices from a few digitized pieces or to digitize any object and place it in a virtual game. MirageBlocks? technology could change online shopping, enabling the projection of 3-D representations of an object. It could transform teleconferencing, enabling participants to examine and manipulate 3-D representations of products or prototypes. It might even be useful in health care?an emergency-room physician, for instance, could use a 3-D image of a limb with a broken bone to correctly align the break.

Giving the Artistically Challenged a Helping Hand

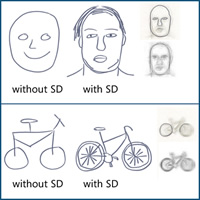

It?s fair to say that most people cannot draw well. But what if a computer could help by suggesting to the would-be artist certain lines to follow or shapes to create? That's the idea behind ShadowDraw.

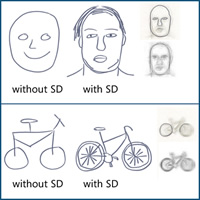

In concept, ShadowDraw seems disarmingly simple. A user begins drawing an object?a bicycle, for instance, or a face?using a stylus-based Cintiq 21UX tablet. As the drawing progresses, ShadowDraw surmises the subject of the emerging drawing and begins to suggest refinements by generating a "shadow" behind the would-be artist's lines that resembles the drawn object. By taking advantage of ShadowDraw's suggestions, the user can create a more refined drawing than otherwise possible, while retaining the individuality of their pencil strokes and overall technique.

The seeming simplicity of ShadowDraw, though, belies the substantial computing power being harnessed behind the screen. ShadowDraw is, at its heart, a database of 30,000 images culled from the Internet and other public sources. Edges are extracted from these original photographic images to provide stroke suggestions to the user.

The main component created by the Microsoft Research team is an interactive drawing system that reacts to the user's pencil work in real time. ShadowDraw uses a novel, partial-matching approach that finds possible matches between different sub-sections of the user's drawing and the database of edge images. Think of ShadowDraw's behind-the-screen interface as a checkerboard - each square where a user draws a line will generate its own set of possible matches that cumulatively vote on suggestions to help refine a user's work. The researchers also created a novel method for spatially blending the various stroke suggestions for the drawing.

To test ShadowDraw, researchers enlisted eight men and eight women. Each was asked to draw five subjects - a shoe, a bicycle, a butterfly, a face, and a rabbit -with and without ShadowDraw. The rabbit image was a control - there were no rabbits in the database. When using ShadowDraw, the subjects were told they could use the suggested renderings or ignore them. And each subject was given 30 minutes to complete 10 drawings.

To test ShadowDraw, researchers enlisted eight men and eight women. Each was asked to draw five subjects - a shoe, a bicycle, a butterfly, a face, and a rabbit -with and without ShadowDraw. The rabbit image was a control - there were no rabbits in the database. When using ShadowDraw, the subjects were told they could use the suggested renderings or ignore them. And each subject was given 30 minutes to complete 10 drawings.

A panel of eight additional subjects judged the drawings on a scale of one to five, with one representing "poor" and five "good." The panelists found that ShadowDraw was of significant help to people with average drawing skills?their drawings were significantly improved by ShadowDraw. Interestingly, the subjects rated as having poor or good drawing skills, pre-ShadowDraw, saw little improvement. Researchers said the poor artists were so bad that ShadowDraw couldn?t even guess what they were attempting to draw. The good artists already had sufficient skills to draw the test objects accurately.

Enabling One Pen to Simulate Many

Human beings have developed dozens of ways to render images on a piece of paper, a canvas, or another drawing surface. Pens, pencils, paintbrushes, crayons, and more?all can be used to create images or the written word.

Each, however, is held in a slightly different way. That can seem natural when using the device itself - people learn to manage a paintbrush in a way different from how they use a pen or a pencil. But those differences can present a challenge when attempting to work with a computer. A single digital stylus or pen can serve many functions, but to do so typically requires the user to hold the stylus in the same manner, regardless of the tool the stylus is mimicking.

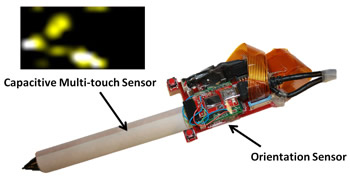

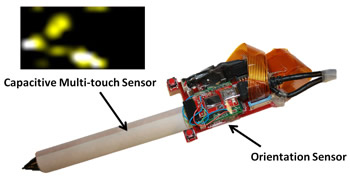

A Microsoft Research team aimed to find a better way to design a computer stylus. The researchers asked the question: How can a digital pen or stylus be as natural to use as the varied physical tools people employ? The solution, to be shown as part of a demo called Recognizing Pen Grips for Natural UI: A digital pen enhanced with a capacitive, multitouch sensor that knows where the user's hand touches the pen and an orientation sensor that knows at what angle the pen is held.

With that information, the digital pen can recognize different grips and automatically behave like the desired tool. If a user holds the digital pen like a paintbrush, the pen automatically behaves like a paintbrush. Hold it like a pen, it behaves like a pen?with no need to manually turn a switch on the device or choose a different stylus mode.

The implications of the technology are considerable. Musical instruments such as flutes or saxophones and many other objects all build on similar shapes. A digital stylus with grip and orientation sensors conceivably could duplicate all, while enabling the user to hold the stylus in the manner that is most natural. Even game controllers could be adapted to modify their behavior depending on how they are held, whether as a driving device for auto-based games or as a weapon in games such as Halo.

Avatars Get Real

Avatars can serve as a visual representation of someone's presence in a gaming scenario. They can play a similar role on social networks. They even can act as virtual representations of ourselves in telephony applications.

What they can?t do is look like us. But a project from Microsoft Research Asia aims to change all that.

The 3-D, Photo-Real Talking Head is an ingenious attempt to bring an air of realism to our avatar representations. Lijuan Wang, a postdoctoral researcher at that facility, explains.

"The 3-D, Photo-Real Talking Head project is about creating a 3-D, photorealistic avatar that looks just like you," Wang says. "We only need a short, 2-D video clip of you, talking to a webcam or a Kinect sensor. In this 2-D video, we need to capture all your mouth movements, all your facial animations, eye blinking, eyebrow movement?all those raw materials should be in the training data. Once we get the training data, we'll use that to build a 3-D, photorealistic avatar for you."

"The 3-D, Photo-Real Talking Head project is about creating a 3-D, photorealistic avatar that looks just like you," Wang says. "We only need a short, 2-D video clip of you, talking to a webcam or a Kinect sensor. In this 2-D video, we need to capture all your mouth movements, all your facial animations, eye blinking, eyebrow movement?all those raw materials should be in the training data. Once we get the training data, we'll use that to build a 3-D, photorealistic avatar for you."

The technology invoked combines 3-D modeling and the 2-D video, and, in so doing, creates a realistic avatar with natural head motion and facial expressions. It?s not a simple matter.

"A cartoon avatar is relatively easy," Wang says, "but a more humanlike and realistic avatar is very challenging. People may see some realistic avatars in games or movies, but it is very expensive to do that. They may need to use an expensive, motion-capture system to capture a real person?s motion, or they may need to have an artist to work frame by frame. We are looking for an easier way for everyone to automatically create an avatar for themselves."

The project uses a simple 3-D head model for rotation and the translation, with all the facial animation rendered by a 2-D imaging sequence, which is synthesized atop the 3-D model.

"It?s just like our experience when we watch a movie," Wang says. "A 2-D imaging sequence is projected to a 2-D flat screen. Here, we project a 2-D face image to a 3-D head model. When your mouth opens and closes in the 2-D video, its projection on the 3-D model also opens and closes, and since it?s 3-D, it can be viewed from different directions. All the 2-D images are photorealistic, and the output 3-D is a photorealistic talking head?or avatar. It?s very natural and acceptable to human eyes. It looks natural, and it moves in natural ways."

For 3-D, photorealistic avatars, the most difficult part is soft tissues on the face: the eyes, lips, tongue, teeth, wrinkles on the face, all of which require highly accurate geometry modeling. Building such models--and running them on a PC--are complex chores, but that work represents much of what Wang and her colleagues have achieved.

There are, Wang notes, many potential applications for this technology.

"It can be used in telepresence," she says. "You can create an immersive virtual experience that has multiple people appearing together in the same space when they physically cannot.

"Another potential use is in gaming. It also can be used in social networking, and there are a lot of consumer-based applications, like online shopping. You could use your model to try out makeup and try on clothes."

One demo shows how patients' medical images can be interpreted automatically, enhancing considerably the efficiency of a physician's work. One literally creates a new world - instantly converting real objects into digital 3-D objects that can be manipulated by a real human hand. A third acts as a virtual drawing coach to would-be artists. And yet another enables a simple digital stylus to understand whether a person wants to draw with it, paint with it, or, perhaps, even play it like a saxophone.

Semantic Understanding of Medical Images

Semantic Understanding of Medical ImagesTo help make medical images easier to read and analyze, a team from Microsoft Research Cambridge has created InnerEye, a research project that uses the latest machine-learning techniques to speed image interpretation and improve diagnostic accuracy. InnerEye also has implications for improved treatments, such as enabling radiation oncologists to target treatment to tumors more precisely in sensitive areas such as the brain.

In the case of radiation therapy, it can take hours for a radiation oncologist to outline the edge of tumors and healthy organs to be protected. InnerEye greatly reduces the time needed to delineate accurately the boundaries of anatomical structures of interest in 3-D.

To use InnerEye, a radiologist or clinician uses a computer pointer on a screen image of a medical scan to highlight a part of the body that requires treatment. InnerEye then employs algorithms to accurately define the 3-D surface of the selected organ. In the resulting image, the highlighted organ - a kidney, for instance, or even a complete aorta - seems to almost leap from the rest of the image. The organ delineation offers a quick way of assessing things such as organ volume, tissue density, and other information that aids diagnosis.

InnerEye also enables extremely fast visual navigation and inspection of 3-D images. A physician can navigate to an optimized view of the heart simply by clicking on the word "heart," because the system already knows where each organ is. This yields considerable time savings, with big economic implications.

The InnerEye project team also is investigating the use of Kinect in the operating theater. Surgeons often wish to view a patient's previously acquired CT or MR scans, but touching a mouse or keyboard could introduce germs. The InnerEye technology and Kinect help by automatically interpreting the surgeon's hand gestures. This enables the surgeon to navigate naturally through the patient's images.

Blurring the Line Between the Real and the Virtual

Breaking down the barrier between the real world and the virtual world is a staple of science fiction? Avatar and The Matrix are but two recent examples. But technology is coming closer to actually blurring the line.

Microsoft researchers have taken a step toward making the virtual real with a project called MirageBlocks. Its aim is to simplify the process of digitally capturing images of everyday objects and to convert them instantaneously to 3-D images. The goal is to create a virtual mirror of the physical world, one so readily understood that a MirageBlocks user could take an image of a brick and use it to create a virtual castle - brick by brick.

Capturing and visualizing objects in 3-D long has fascinated scientists, but new technology makes it more feasible. In particular, Kinect for Xbox 360 gave researchers an easy-to-use, $150 gadget that easily could capture the depth of an object with its multicamera design. Coupled with new-generation 3-D projectors and 3-D glasses, Kinect helps make MirageBlocks perhaps the most advanced tool ever for capturing and manipulating 3-D imagery.

The MirageBlocks environment consists of a Kinect device, an Acer H5360 3-D projector, and Nvidia 3D Vision glasses synchronized to the projector's frame rate. The Kinect captures the object image and tracks the user?s head position so that the virtual image is shown to the user with the correct perspective.

Users enter MirageBlocks' virtual world by placing an object on a table top, where it is captured by the Kinect's cameras. The object is instantly digitized and projected back into the workspace as a 3-D virtual image. The user then can move or rotate the virtual object using an actual hand or a numbered keypad. A user can take duplicate objects, or different objects, to construct a virtual 3-D model. To the user, the virtual objects have the same depth and size as their physical counterparts.

MirageBlocks has several real-world applications. It could apply an entirely new dimension to simulation games, enabling game players to create custom models or devices from a few digitized pieces or to digitize any object and place it in a virtual game. MirageBlocks? technology could change online shopping, enabling the projection of 3-D representations of an object. It could transform teleconferencing, enabling participants to examine and manipulate 3-D representations of products or prototypes. It might even be useful in health care?an emergency-room physician, for instance, could use a 3-D image of a limb with a broken bone to correctly align the break.

Giving the Artistically Challenged a Helping Hand

It?s fair to say that most people cannot draw well. But what if a computer could help by suggesting to the would-be artist certain lines to follow or shapes to create? That's the idea behind ShadowDraw.

In concept, ShadowDraw seems disarmingly simple. A user begins drawing an object?a bicycle, for instance, or a face?using a stylus-based Cintiq 21UX tablet. As the drawing progresses, ShadowDraw surmises the subject of the emerging drawing and begins to suggest refinements by generating a "shadow" behind the would-be artist's lines that resembles the drawn object. By taking advantage of ShadowDraw's suggestions, the user can create a more refined drawing than otherwise possible, while retaining the individuality of their pencil strokes and overall technique.

The seeming simplicity of ShadowDraw, though, belies the substantial computing power being harnessed behind the screen. ShadowDraw is, at its heart, a database of 30,000 images culled from the Internet and other public sources. Edges are extracted from these original photographic images to provide stroke suggestions to the user.

The main component created by the Microsoft Research team is an interactive drawing system that reacts to the user's pencil work in real time. ShadowDraw uses a novel, partial-matching approach that finds possible matches between different sub-sections of the user's drawing and the database of edge images. Think of ShadowDraw's behind-the-screen interface as a checkerboard - each square where a user draws a line will generate its own set of possible matches that cumulatively vote on suggestions to help refine a user's work. The researchers also created a novel method for spatially blending the various stroke suggestions for the drawing.

To test ShadowDraw, researchers enlisted eight men and eight women. Each was asked to draw five subjects - a shoe, a bicycle, a butterfly, a face, and a rabbit -with and without ShadowDraw. The rabbit image was a control - there were no rabbits in the database. When using ShadowDraw, the subjects were told they could use the suggested renderings or ignore them. And each subject was given 30 minutes to complete 10 drawings.

To test ShadowDraw, researchers enlisted eight men and eight women. Each was asked to draw five subjects - a shoe, a bicycle, a butterfly, a face, and a rabbit -with and without ShadowDraw. The rabbit image was a control - there were no rabbits in the database. When using ShadowDraw, the subjects were told they could use the suggested renderings or ignore them. And each subject was given 30 minutes to complete 10 drawings.

A panel of eight additional subjects judged the drawings on a scale of one to five, with one representing "poor" and five "good." The panelists found that ShadowDraw was of significant help to people with average drawing skills?their drawings were significantly improved by ShadowDraw. Interestingly, the subjects rated as having poor or good drawing skills, pre-ShadowDraw, saw little improvement. Researchers said the poor artists were so bad that ShadowDraw couldn?t even guess what they were attempting to draw. The good artists already had sufficient skills to draw the test objects accurately.

Enabling One Pen to Simulate Many

Human beings have developed dozens of ways to render images on a piece of paper, a canvas, or another drawing surface. Pens, pencils, paintbrushes, crayons, and more?all can be used to create images or the written word.

Each, however, is held in a slightly different way. That can seem natural when using the device itself - people learn to manage a paintbrush in a way different from how they use a pen or a pencil. But those differences can present a challenge when attempting to work with a computer. A single digital stylus or pen can serve many functions, but to do so typically requires the user to hold the stylus in the same manner, regardless of the tool the stylus is mimicking.

A Microsoft Research team aimed to find a better way to design a computer stylus. The researchers asked the question: How can a digital pen or stylus be as natural to use as the varied physical tools people employ? The solution, to be shown as part of a demo called Recognizing Pen Grips for Natural UI: A digital pen enhanced with a capacitive, multitouch sensor that knows where the user's hand touches the pen and an orientation sensor that knows at what angle the pen is held.

With that information, the digital pen can recognize different grips and automatically behave like the desired tool. If a user holds the digital pen like a paintbrush, the pen automatically behaves like a paintbrush. Hold it like a pen, it behaves like a pen?with no need to manually turn a switch on the device or choose a different stylus mode.

The implications of the technology are considerable. Musical instruments such as flutes or saxophones and many other objects all build on similar shapes. A digital stylus with grip and orientation sensors conceivably could duplicate all, while enabling the user to hold the stylus in the manner that is most natural. Even game controllers could be adapted to modify their behavior depending on how they are held, whether as a driving device for auto-based games or as a weapon in games such as Halo.

Avatars Get Real

Avatars can serve as a visual representation of someone's presence in a gaming scenario. They can play a similar role on social networks. They even can act as virtual representations of ourselves in telephony applications.

What they can?t do is look like us. But a project from Microsoft Research Asia aims to change all that.

The 3-D, Photo-Real Talking Head is an ingenious attempt to bring an air of realism to our avatar representations. Lijuan Wang, a postdoctoral researcher at that facility, explains.

"The 3-D, Photo-Real Talking Head project is about creating a 3-D, photorealistic avatar that looks just like you," Wang says. "We only need a short, 2-D video clip of you, talking to a webcam or a Kinect sensor. In this 2-D video, we need to capture all your mouth movements, all your facial animations, eye blinking, eyebrow movement?all those raw materials should be in the training data. Once we get the training data, we'll use that to build a 3-D, photorealistic avatar for you."

"The 3-D, Photo-Real Talking Head project is about creating a 3-D, photorealistic avatar that looks just like you," Wang says. "We only need a short, 2-D video clip of you, talking to a webcam or a Kinect sensor. In this 2-D video, we need to capture all your mouth movements, all your facial animations, eye blinking, eyebrow movement?all those raw materials should be in the training data. Once we get the training data, we'll use that to build a 3-D, photorealistic avatar for you."

The technology invoked combines 3-D modeling and the 2-D video, and, in so doing, creates a realistic avatar with natural head motion and facial expressions. It?s not a simple matter.

"A cartoon avatar is relatively easy," Wang says, "but a more humanlike and realistic avatar is very challenging. People may see some realistic avatars in games or movies, but it is very expensive to do that. They may need to use an expensive, motion-capture system to capture a real person?s motion, or they may need to have an artist to work frame by frame. We are looking for an easier way for everyone to automatically create an avatar for themselves."

The project uses a simple 3-D head model for rotation and the translation, with all the facial animation rendered by a 2-D imaging sequence, which is synthesized atop the 3-D model.

"It?s just like our experience when we watch a movie," Wang says. "A 2-D imaging sequence is projected to a 2-D flat screen. Here, we project a 2-D face image to a 3-D head model. When your mouth opens and closes in the 2-D video, its projection on the 3-D model also opens and closes, and since it?s 3-D, it can be viewed from different directions. All the 2-D images are photorealistic, and the output 3-D is a photorealistic talking head?or avatar. It?s very natural and acceptable to human eyes. It looks natural, and it moves in natural ways."

For 3-D, photorealistic avatars, the most difficult part is soft tissues on the face: the eyes, lips, tongue, teeth, wrinkles on the face, all of which require highly accurate geometry modeling. Building such models--and running them on a PC--are complex chores, but that work represents much of what Wang and her colleagues have achieved.

There are, Wang notes, many potential applications for this technology.

"It can be used in telepresence," she says. "You can create an immersive virtual experience that has multiple people appearing together in the same space when they physically cannot.

"Another potential use is in gaming. It also can be used in social networking, and there are a lot of consumer-based applications, like online shopping. You could use your model to try out makeup and try on clothes."