Nvidia 2016 GPU Technology Conference Is About Artificial Intelligence

As artificial intelligence sweeps across the technology landscape, NVIDIA unveiled today at its annual GPU Technology Conference a series of new products and technologies focused on deep learning, virtual reality and self-driving cars. NVIDIA CEO and Co-founder Jen-Hsun Huang unveiled a deep-learning supercomputer in a box — a single integrated system with the computing throughput of 250 servers. The NVIDIA DGX-1, with 170 teraflops of half precision performance, can speed up training times by over 12x.

Huang described the current state of AI, pointing to a wide range of ways it’s being deployed. He noted more than 20 cloud-services giants — from Alibaba to Yelp, and Amazon to Twitter — that generate vast amounts of data in their hyperscale data centers and use NVIDIA GPUs for tasks such as photo processing, speech recognition and photo classification.

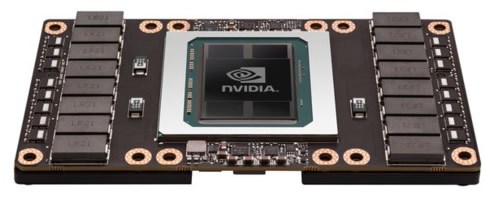

Underpinning DGX-1 is a new processor, the NVIDIA Tesla P100 GPU - Nvidia's most advanced accelerator and the first to be based on the company’s 11th generation Pascal architecture.

Based on five technologies - which Jen-Hsun smilingly called "miracles" — the Tesla P100 enables a new class of servers that can deliver the performance of hundreds of CPU server nodes.

These five breakthroughs are the following:

- NVIDIA Pascal architecture for exponential performance leap -- A Pascal-based Tesla P100 solution delivers over a 12x increase in neural network training performance compared with a previous-generation NVIDIA Maxwell-based solution.

- NVIDIA NVLink for maximum application scalability -- The NVIDIA NVLink high-speed GPU interconnect scales applications across multiple GPUs, delivering a 5x acceleration in bandwidth compared to today's best-in-class solution. Up to eight Tesla P100 GPUs can be interconnected with NVLink to maximize application performance in a single node, and IBM has implemented NVLink on its POWER8 CPUs for fast CPU-to-GPU communication.

- 16nm FinFET for unprecedented energy efficiency -- With 15.3 billion transistors built on 16 nanometer FinFET fabrication technology, the Pascal GPU is the world's largest FinFET chip ever built.

- CoWoS with HBM2 for big data workloads -- The Pascal architecture unifies processor and data into a single package to deliver compute efficiency. A new approach to memory design, Chip on Wafer on Substrate (CoWoS) with HBM2, provides a 3x boost in memory bandwidth performance, or 720GB/sec, compared to the Maxwell architecture.

- New AI algorithms for peak performance -- New half-precision instructions deliver more than 21 teraflops of peak performance for deep learning.

The Tesla P100 GPU accelerator delivers a new level of performance for a range of HPC and deep learning applications, including the AMBER molecular dynamics code, which runs faster on a single server node with Tesla P100 GPUs than on 48 dual-socket CPU server nodes.

Tesla P100 Specifications

- 5.3 teraflops double-precision performance, 10.6 teraflops single-precision performance and 21.2 teraflops half-precision performance with NVIDIA GPU BOOST technology

- 160GB/sec bi-directional interconnect bandwidth with NVIDIA NVLink

- 16GB of CoWoS HBM2 stacked memory

- 720GB/sec memory bandwidth with CoWoS HBM2 stacked memory

- Enhanced programmability with page migration engine and unified memory

- ECC protection for increased reliability

- Server-optimized for highest data center throughput and reliability

General availability for the Pascal-based NVIDIA Tesla P100 GPU accelerator in the new NVIDIA DGX-1 deep learning system is in June. It is also expected to be available beginning in early 2017 from server manufacturers.

A key early customer for DGX-1 is Massachusetts General Hospital. It’s set up a clinical data center, with NVIDIA as a founding partner, that will use AI to help diagnose disease starting in the fields of radiology and pathology.

While NVIDIA hardware has long made headlines, software is key to advancing GPU-accelerated computing. Nvidia announced the NVIDIA SDK, and Jen-Hsun described a series of major updates that it’s getting.

The keynote’s visual highlight was a view of a VR experience built on NASA’s research to send visitors to Mars. The Mars 2030 VR experience developed with FUSION Media, with advice from NASA, was demoed by personal computing pioneer Steve Wozniak.

Jen-Hsun showed how Nvidia's Iray technology can create interactive, virtual 3D worlds with great fidelity. These Iray VR capabilities allow Nvidia to create environments that let users strap on a headset and prowl around photorealistic virtual environments — like a building not yet constructed.

"With Iray VR, you’ll be able to look around the inside of a virtual car, a modern loft, or the interior of our still unfinished Silicon Valley campus with uncanny accuracy," Nvidia said.

Expect for more details on the availability of Iray VR capabilities later this spring.

To show what VR can do, Google sent along 5,000 Google Cardboard VR viewers. Nvidia passed them out after the keynote so GTC attendees can experience NVIDIA Iray VR technology on their phones.

Continuing efforts to help build autonomous vehicles with super-human levels of perception, Nvidia also introduced an end-to-end mapping platforms for self-driving cars.

It’s designed to help automakers, map companies and startups create HD maps and keep them updated, using the compute power of NVIDIA DRIVE PX 2 in the car and NVIDIA Tesla GPUs in the data center.

Maps are a key component for self-driving cars. Automakers will need to equip vehicles with powerful on-board supercomputers capable of processing inputs from multiple sensors to precisely understand their environments.

Nvidia is also putting its DRIVE PX 2 AI supercomputer into the cars that will compete in the Roborace Championship, the first global autonomous motor sports competition.

Part of the new Formula E ePrix electric racing series, Roborace combines the intrigue of robot competition with earth-friendly alternative energy racing.

Every Roborace will pit 10 teams, each with two driverless cars equipped with NVIDIA DRIVE PX 2, against each other in one-hour races. The teams will have identical cars. Their sole competitive advantage: software.

DRIVE PX 2 provides supercomputer-class performance - up to 24 trillion operations a second for AI applications -in a case the size of a lunchbox. And such a small box is exactly what these racecars need.