Chinese Companies Adopt Nvidia's HGX-2 Server and Turing T4 Cloud GPU

Adoption of the NVIDIA T4 Cloud GPU as well as the NVIDIA HGX-2 server platform is accelerating, with more tech giants unveiling products and services based on Nvidia's platforms, the company announced at GTC China.

NVIDIA Turing T4 Cloud GPU

Baidu, Tencent, JD.com and iFLYTEK have begun using T4 to expand and accelerate their hyperscale datacenters. In addition, China’s computer makers — including Inspur, Lenovo, Huawei, Sugon, IPS and H3C — have announced a wide range of new T4 servers.

NVIDIA T4 is being used to accelerate AI inference and training in fields including healthcare, finance and retail.

Last week, Nvidia announced at the SC18 supercomputing show that the T4 was featured in 57 separate server designs, and Google Cloud announced T4 availability to its Google Cloud Platform customers.

Among previously announced server companies featuring the NVIDIA T4 are Dell EMC, Hewlett Packard Enterprise, IBM, Lenovo and Supermicro.

Based on the new NVIDIA Turing architecture, the T4 GPU features multi-precision Turing Tensor Cores and new RT Cores, combined with accelerated containerized software stacks to deliver high performance at scale.

Among China server companies featuring T4 GPUs are Inspur, Huawei, Lenovo, Sugon, Inspur Power System and H3C. Their new systems include:

- Inspur NF5280M4 /NF5280M5/NF5288M5/NF5468M5

- Huawei G2500/2288 HV5/ 5288V5/G530 V5/G560 V5

- Lenovo ThinkSystem SR630/SR650

- Sugon X580-G30/X745-G30/X780-G30/X780-G35/X785-G30 / X740-H30

- Inspur Power System: FP5295G2

- H3C Uniserver G4900G3

Systems are expected to begin shipping before the end of the year.

Roughly the size of a candy bar, the low-profile, 70-watt T4 GPU has the flexibility to fit into a standard server or any Open Compute Project hyperscale server design. Server designs can range from a single T4 GPU all the way up to 20 GPUs in a single node.

The T4 GPU’s multi-precision capabilities power AI performance for a wide range of AI workloads at four different levels of precision, offering 8.1 TFLOPS at FP32, 65 TFLOPS at FP16 as well as 130 TOPS of INT8 and 260 TOPS of INT4. For AI inference workloads, a server with two T4 GPUs can replace up to 54 CPU-only servers. For AI training, a server with two T4 GPUs can replace nine dual-socket, CPU-only servers.

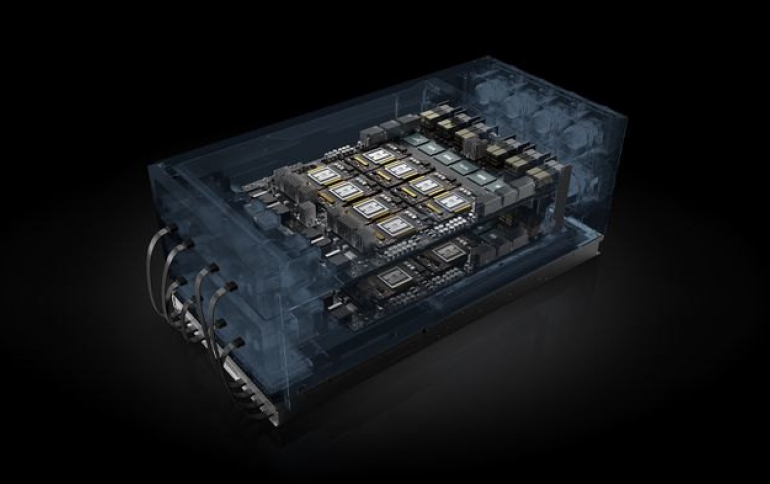

NVIDIA HGX-2 GPU-Accelerated Platform

NVIDIA also announced adoption of the NVIDIA HGX-2 accelerated server platform for AI deep learning, machine learning and high performance computing.

The platform is delivering two petaflops of compute performance in a single node. It can run AI machine learning workloads nearly 550x faster, AI deep learning workloads nearly 300x faster and HPC workloads nearly 160x faster, all compared to a CPU-only server, according to Nvidia.

The newest adoptions of the HGX-2 announced today at GTC China include:

Baidu and Tencent are using HGX-2 for a wide range of even AI services, both for their internal use and for their cloud customers.

Inspur is China’s first to build an HGX-2 server. Its Inspur AI Super-Server AGX-5 is designed to solve the performance expansion problem of AI, deep learning and HPC.

Huawei, Lenovo and Sugon announced that they have become NVIDIA HGX-2 cloud server platform partners.

Previously announced HGX-2 support and adoption comes from global server makers, including Foxconn, Inventec, QCT, Quanta, Supermicro, Wistron and Wiwynn. Additionally, Oracle announced last month its plans to bring the NVIDIA HGX-2 platform to Oracle Cloud Infrastructure in both bare-metal and virtual machine instances.

The HGX-2 cloud server platform features multi-precision computing capabilities, providing flexibility to support the future of computing. It fuses high-precision FP64 and FP32 for accurate HPC, while also enabling faster, reduced-precision FP16 and INT8 for deep learning and machine learning.

The HGX-2 incorporates features such as NVIDIA NVSwitch interconnect fabric, which links 16 NVIDIA Tesla V100 Tensor Core GPUs to work as a single, giant GPU delivering two petaflops of AI performance. It also provides 0.5TB of memory and 16TB/s of aggregate memory bandwidth.