MLPerf Releases Results for Machine Learning Inference Benchmark

After introducing the first inference benchmarks in June of 2019, today the MLPerf consortium released 595 inference benchmark results from 14 organizations.

The MLPerf Inference v0.5 machine learning inference benchmark has been designed to measure how well and how quickly various accelerators and systems execute trained neural networks.

The initial version of the benchmark, v0.5 currently only covers 5 networks/benchmark, and it doesn’t yet have any power testing metrics, which would be necessary to measure overall energy efficiency.

In any case, the benchmark has attracted the attention from the major hardware vendors, all of whom are keen to show off what their hardware can do on a standardized test, and to demonstrate to clients why their solution is superior.

Of the 595 benchmark results released today, 166 are in the Closed Division intended for direct comparison of systems. The results span 30 different systems. The benchmarks show a 4-order-of-magnitude difference in performance and a 3-order-of-magnitude range in estimated power consumption and range from embedded devices and smartphones to large-scale data center systems. The remaining 429 open results are in the Open Division and show a more diverse range of models, including low precision implementations and alternative models.

Companies in China, Israel, Korea, the United Kingdom, and the United States submitted benchmark results. These companies include: Alibaba, Centaur Technology, Dell EMC, dividiti, FuriosaAI, Google, Habana Labs, Hailo, Inspur, Intel, NVIDIA, Polytechnic University of Milan, Qualcomm, and Tencent.

According to David Kanter, Inference and Power Measurement Co-chair, “We are very excited about our roadmap, future versions of MLPerf will include additional benchmarks such as speech-to-text and recommendation, and additional metrics such as power consumption.”

“MLPerf is also developing a smartphone app that runs inference benchmarks for use with future versions. We are actively soliciting help from all our members and the broader community to make MLPerf better,” stated Vijay Janapa Reddi, Associate Professor, Harvard University, and MLPerf Inference Co-chair.

Commeting on the results, Nvidia touted a win, saying that it posted the fastest results.

MLPerf's five inference benchmarks, applied across four inferencing scenarios, covered AI applications such as image classification, object detection and translation. Nvidia topped all five benchmarks for both data center-focused scenarios (server and offline), with its Turing GPUs.

Nvidia's Xavier SoC turned in the highest performance among commercially available edge and mobile SoCs that submitted for MLPerf under edge-focused scenarios of single-stream and multi-stream.

Nvidia credits the programmability of its platform across a range of AI workloads for its strong performance.

"AI is not just about accelerating a neural network phase of the AI application, it is about accelerating the whole end to end pipeline," said Kharya. "At Nvidia, we are focused on providing a single architecture and single programming platform and optimization for all of the range of products that we offer."

Intel's results on First MLPerf inference results

Intel’s forthcoming AI ASIC for inference, the Intel Nervana Neural Network Processor (NNP-I), already shows strong performance—both in pure, raw performance and in performance-per-watt. Running two pre-production Intel Nervana NNP-I processors on pre-alpha software, Intel was able to achieve an amazing 10,567 images/sec in Offline scenario and 10,263 images/sec in Server scenario for ImageNet image classification on ResNet-50 v1.5 when using ONNX. Intel expects continued improvements as the company's team further matures the software stack and tests the production products for the next MLPerf inference disclosure.

Intel Xeon Scalable processors power most of the world’s inference in data centers today. Intel released built-in AI acceleration with Intel AVX-512 instructions in the first generation of Intel Xeon Scalable processors in 2017, enabling a large number of operations to be done in parallel with a larger number of cores. Intel built upon this foundation with Intel DL Boost technology integrated into the 2nd Generation Intel Xeon Scalable processors that were released earlier this year. Intel DL Boost’s Vector Neural Network Instructions (VNNI) maximize compute resources by combining three instruction sets into one while enabling INT8 deep learning inference.

With these integrated acceleration technologies, Intel Xeon Scalable processors delivered the following MLPerf inference benchmark results:

- 9,468 images/sec in Offline scenario and 5,262 images/sec in Server scenario for COCO object detection on SSD-MobileNet v1 when using the OpenVINO toolkit, the lowest latency in single stream measurement among all submissions; and

- 29,203 images/sec in Offline scenario and 27,245 images/sec in Server scenario for ImageNet image classification on MobileNet v1; 5,966 images/sec in Offline scenario and 4,851 images/sec in Server scenario for ImageNet image classification on ResNet-50 v1.5, both when using PyTorch.

The latest MLPerf benchmark results for the Intel Core i3-1005G1 are also proof of strong AI opportunities in client-side applications: 218 images/sec for COCO object detection on SSD-MobileNet v1 with excellent latency, 508 images/sec for ImageNet image classification on MobileNet v1, and 101 images/sec for ImageNet image classification on ResNet-50 v1.5, all when using the OpenVINO toolkit.

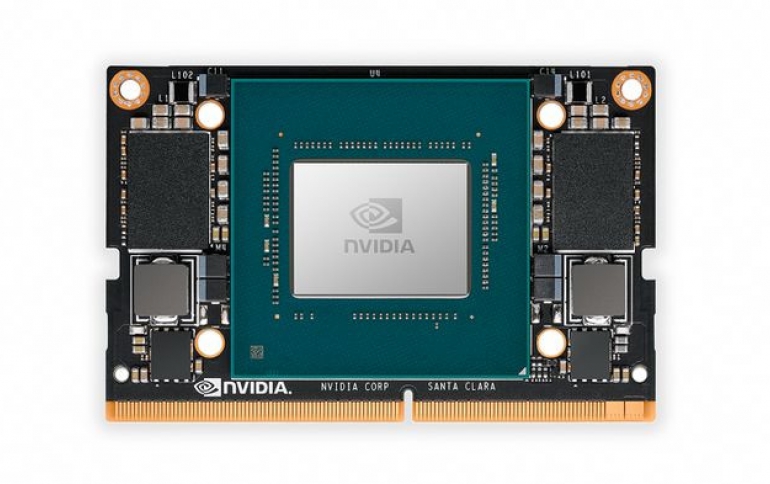

Nvidia Xavier Performance comes to nano form factor for $399

Nvidia also today introduced Jetson Xavier NX, a powerful AI supercomputer for robotic and embedded computing devices at the edge.

With a compact form factor smaller than the size of a credit card, the Jetson Xavier NX module delivers performance up to 21 TOPS for running modern AI workloads, and consumes as little as 10 watts of power.

Jetson Xavier NX can be used in embedded edge computing devices that demand increased performance but are constrained by size, weight, power budgets or cost. These include small commercial robots, drones, intelligent high-resolution sensors for factory logistics and production lines, optical inspection, network video recorders, portable medical devices and other industrial IoT systems.

Jetson Xavier NX delivers up to 14 TOPS (at 10W) or 21 TOPS (at 15W), running multiple neural networks in parallel and processing data from multiple high-resolution sensors simultaneously in a Nano form factor (70x45mm). For companies already building embedded machines, Jetson Xavier NX runs on the same CUDA-X AI software architecture as all Jetson offerings.

As part of NVIDIA’s one software architecture approach, Jetson Xavier NX is supported by NVIDIA JetPack software development kit, which is a complete AI software stack that can run modern and complex AI networks, accelerated libraries for deep learning as well as computer vision, computer graphics, multimedia and more.

Jetson Xavier NX module specifications:

- GPU: NVIDIA Volta with 384 NVIDIA CUDA cores and 48 Tensor Cores, plus 2x NVDLA

- CPU: 6-core Carmel Arm 64-bit CPU, 6MB L2 + 4MB L3

- Video: 2x 4K30 Encode and 2x 4K60 Decode

- Camera: Up to six CSI cameras (36 via virtual channels); 12 lanes (3x4 or 6x2) MIPI CSI-2

- Memory: 8GB 128-bit LPDDR4x; 51.2GB/second

- Connectivity: Gigabit Ethernet

- OS Support: Ubuntu-based Linux

- Module Size: 70x45mm

Jetson Xavier NX is the latest addition to the Jetson family, which includes Jetson Nano, the Jetson AGX Xavier series and the Jetson TX2 series. Jetson Xavier NX offers a rich set of IOs, from high-speed CSI and PCIe to low-speed I2Cs and GPIOs.

Jetson Xavier NX is also pin-compatible with Jetson Nano, allowing shared hardware designs and those with Jetson Nano carrier boards and systems to upgrade to Jetson Xavier NX. It also supports all major AI frameworks, including TensorFlow, PyTorch, MxNet, Caffe and others.

Priced at $399, the Jetson Xavier NX module will be available in March. Developers can begin application development today using the Jetson AGX Xavier Developer Kit with a software patch to emulate Jetson Xavier NX.