Google's Pichai Calls For AI Regulation

Sundar Pichai, the CEO of Google-owner Alphabet, urged regulators on Monday to take a “proportionate approach” when drafting rules for artificial intelligence (AI).

Regulators are grappling with ways to govern AI in order to curb potential misuse, as companies and law enforcement agencies increasingly adopt the technology.

Pichai said there was no question AI needs to be regulated, but that rulemakers should tread carefully.

“Sensible regulation must also take a proportionate approach, balancing potential harms with social opportunities. This is especially true in areas that are high risk and high value,” he said during a conference in Brussels organized by think tank Bruegel.

Regulators should tailor rules according to different sectors, Pichai said, citing medical devices and self-driving cars as examples that require different rules.

He also called on governments to align their rules and agree on core values.

One area of concern is so-called “deep fakes” - video and audio clips that have been manipulated using AI. Pichai said Google had released open datasets to help the research community build better tools to detect such fakes.

Another concern is facial recognition technology, which Pichai said could be used for “nefarious reasons”.

Google Cloud is not offering general-purpose facial recognition application programming interfaces (APIs) while it establishes policy and technical safeguards, he said.

The Google chief said existing rules like Europe’s privacy legislation GDPR and regulation for medical devices like AI-assisted heart monitors would serve as strong foundations for governing AI in some areas, but that for self-driving cars, governments would need to establish regulations.

The European Commission is taking a tougher line on AI than the United States, aiming to strengthen existing regulations that protect Europeans’ privacy and data rights.

Earlier this month, the U.S. government published regulatory guidelines on AI aimed at limiting authorities’ overreach and urged Europe to avoid an aggressive approach.

Google has also come under intense criticism over how it handles users’ privacy with some of its AI projects. Google faces a U.S.federal inquiry after the Wall Street Journal in November reported how it collects the health-care data from millions of Americans to design new AI software. It’s also facing scrutiny over the methods it uses for training algorithms that run Google Assistant.

Supporting AI skills training

MolenGeek started in 2015 in Molenbeek, Belgium, as a coding school for anyone to learn digital skills. But unlike many other schools, MolenGeek is driven by a social mission of fostering inclusion, integration and community development in this culturally diverse suburb of Brussels.

In five years, it’s become a co-working space for young people from all backgrounds, enabling them to network and share their experiences. Out of Molengeek's community of 800 active members, 195 people from predominantly underprivileged backgrounds have gone through entrepreneurship skills training, and 35 new startups have been built and grown out of their incubator program.

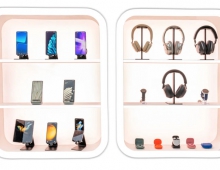

Sundar Pichai announced at MolenGeek an additional Google.org grant of $250,000—over EUR 200,000—to expand its coding school and increase the community’s access to new kinds of digital skills training. MolenGeek will use the funds to develop a new six-month content module focused on artificial intelligence and data analytics. This is part of MolenGeek’s longer-term plan to open a second hub in Brussels later this year, which will include an incubator dedicated to the needs of AI-focused startups, as well as a six-month AI training program. In addition to funding, Google AI experts will also provide MolenGeek with ongoing mentorship and opportunities for Googlers to volunteer.