IBM Launches Research Collaboration Center to Move Computing Beyond CPUs and GPUs

IBM is investing $2 billion into a new artificial intelligence research hub in New York focused on computer-chip research, development, prototyping, testing and simulation.

In the pursuit of more advanced hardware for the AI era, IBM is working across its Systems, Research and Watson divisions to take a fresh approach to AI, which requires significant changes in the fundamentals of systems and computing design.

IBM, with support from New York State (NYS), SUNY Polytechnic Institute, and the founding partnership members, announced an ambitious plan to create a global research hub to develop next-generation AI hardware and expand their joint research efforts in nanotechnology. The IBM Research AI Hardware Center will be the nucleus of a new ecosystem of research and commercial partners collaborating with IBM researchers to further accelerate the development of AI-optimized hardware innovations.

Samsung is a strategic IBM partner in both manufacturing and research. Mellanox Technologies is a leading supplier of high-performance, end-to-end smart interconnect solutions which accelerate many of the world’s leading AI and machine learning (ML) platforms. Synopsys is the leader in software platforms, emulation and prototyping solutions, and IP for developing the high-performance silicon chips and secure software applications that are driving advancements in AI.

IBM says that partnerships with leading semiconductor equipment companies Applied Materials and Tokyo Electron Limited (TEL) are crucial to the successful introduction of disruptive materials and devices to fuel our AI hardware roadmap.

The company is advancing plans with its SUNY Polytechnic Institute host in Albany, New York, to provide expanded infrastructure support and academic collaborations, and with neighboring Rensselaer Polytechnic Institute (RPI) Center for Computational Innovations (CCI) for academic collaborations in AI and computation. Working through the Center, IBM and its partners will advance a range of technologies from chip level devices, materials, and architecture, to the software supporting AI workloads.

Today’s systems have achieved improved AI performance by infusing machine-learning capabilities with high-bandwidth CPUs and GPUs, specialized AI accelerators and high-performance networking equipment. To maintain this trajectory, IBM believes that new thinking is needed to accelerate AI performance scaling to match to ever-expanding AI workload complexities.

The IBM Research AI Hardware Center will enable IBM and its partner ecosystem to overcome current machine-learning limitations through approaches that include approximate computing through our Digital AI Cores and in-memory computing through our Analog AI Cores. IBM says that these technologies will help pave a path to 1,000x AI performance efficiency improvement over the next decade.

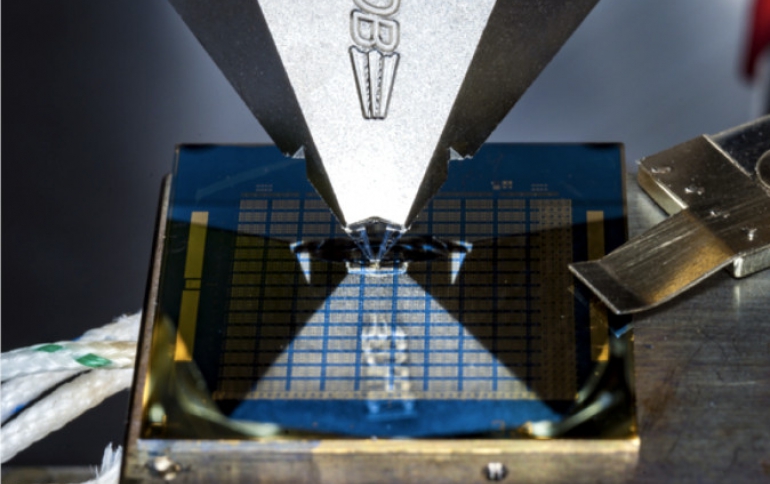

The Center will host research and development, emulation, prototyping, testing, and simulation activities for new AI cores specially designed for training and deploying advanced AI models, including a test bed in which members can demonstrate Center innovations in real-world applications. Specialized wafer processing for the IBM Research AI Hardware Center will be done in Albany, with some support at IBM’s Thomas J. Watson Research Center in Yorktown Heights, N.Y.

A key area of research and development will be systems that meet the demands of deep learning inference and training processes. Such systems offer significant accuracy improvements over more general machine learning for unstructured data. Those intense processing demands will grow exponentially as algorithms become more complex in order to deliver AI systems with increased cognitive abilities. Other research efforts will focus on creating a multi-year roadmap for the development and delivery of specialized accelerator cores and chip architectures that can further improve AI performance.