Intel and Mobileye Begin Testing Their Autonomous Fleet in Jerusalem

The first phase of the Intel and Mobileye 100-car autonomous vehicle (AV) fleet has begun operating in the challenging traffic conditions of Jerusalem.

In the coming months, Intel plans to expand the fleet to the U.S. and other regions.

Mobileye, based in Israel, working with Intel, has armed their autonomous car with cameras and an AI system, governed by a formal safety envelope that the company calls Responsibility-Sensitive Safety. RSS is a model that formalizes the common sense principles of what it means to drive safely into a set of mathematical formulas that a machine can understand (safe following/merging distances, right of way, and caution around obstructed objects, for example). If the AI-based software proposes an action that would violate one of these common sense principles, the RSS layer rejects the decision. Put simply, RSS is what prevents the AV from causing dangerous situations along the way.

During this initial phase, the fleet is powered only by cameras. In a 360-degree configuration, each vehicle uses 12 cameras, with eight cameras providing long-range surround view and four cameras utilized for parking.

"The goal in this phase is to prove that we can create a comprehensive end-to-end solution from processing only the camera data," says Amnon Shashua, senior vice president at Intel and the CEO of Mobileye.

The camera-only phase is Mobileye's strategy for achieving what the company refers to as "true redundancy" of sensing. True redundancy refers to a sensing system consisting of multiple independently engineered sensing systems, each of which can support fully autonomous driving on its own. This is in contrast to fusing raw sensor data from multiple sources together early in the process, which in practice results in a single sensing system. According to Mobileye, true redundancy provides two advantages: The amount of data required to validate the perception system is massively lower (square root of 1 billion hours vs. 1 billion hours); in the case of a failure of one of the independent systems, the vehicle can continue operating safely in contrast to a vehicle with a low-level fused system that needs to cease driving immediately.

The radar/lidar layer will be added in the coming weeks as a second phase of Mobileye's development and then synergies among sensing modalities can be used for increasing the "comfort" of driving.

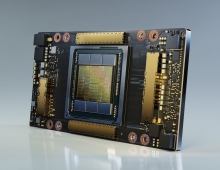

The end-to-end compute system in the AV fleet is powered by four Mobileye EyeQ 4s. An EyeQ4 SoC has 2.5 Terra OP/s (TOP/s) (for deep networks with an 8-bit representation) running at 6 watts of power. Produced in 2018, the EyeQ4 is Mobileye's latest SoC and this year will see four production launches, with an additional 12 production launches slated for 2019. The SoC targeting fully autonomous is the Mobileye EyeQ 5, whose engineering samples are due later this year. An EyeQ5 has 24 TOP/s and is roughly 10 times more powerful than an EyeQ4. In production Mobileye is planning for three EyeQ5s to power a full L4/L5 AV. Therefore, the current system on roads today includes approximately one-tenth of the computing power that Mobileye will have available in the next-gen EyeQ5-based compute system beginning in early 2019.