Ultra-sensitive Camera Sensor May Eliminate Flash

Kodak said on Thursday it has developed digital camera technology that nearly eliminates the need for flash photography.

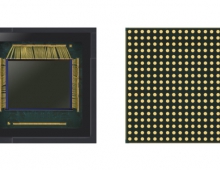

The world's biggest maker of photographic film says its proprietary sensor technology significantly increases sensitivity to light. Image sensors act as a digital camera's eyes by converting light into an electric charge to begin the capture process.

Kodak said the new technology advances an existing Kodak standard in digital imaging. Today, the design of almost all color image sensors is based on the "Bayer Pattern," an arrangement of red, green, and blue pixels first developed by Kodak scientist Bryce Bayer in 1976.

In this design, half of the pixels on the sensor are used to collect green light, with the remaining pixels split evenly between sensitivity to red and blue light.

After exposure, software reconstructs a full color signal for each pixel in the final image. Kodak's new proprietary technology adds "clear" pixels to the red, green, and blue elements that form the image sensor array, collecting a higher proportion of the light striking the sensor.

Manufacturing customers interested in the design will likely get a chance to sample it in early 2008, although it is not sure when devices using the technology would be in stores. The technology could be used at first in devices such as cell phones and eventually products made for industrial and scientific imaging.

Kodak said the new technology advances an existing Kodak standard in digital imaging. Today, the design of almost all color image sensors is based on the "Bayer Pattern," an arrangement of red, green, and blue pixels first developed by Kodak scientist Bryce Bayer in 1976.

In this design, half of the pixels on the sensor are used to collect green light, with the remaining pixels split evenly between sensitivity to red and blue light.

After exposure, software reconstructs a full color signal for each pixel in the final image. Kodak's new proprietary technology adds "clear" pixels to the red, green, and blue elements that form the image sensor array, collecting a higher proportion of the light striking the sensor.

Manufacturing customers interested in the design will likely get a chance to sample it in early 2008, although it is not sure when devices using the technology would be in stores. The technology could be used at first in devices such as cell phones and eventually products made for industrial and scientific imaging.