AMD To Replace GPU Computing With heterogeneous Computing

AMD will support the specifications describing a uniform memory access technology in its upcoming Kaveri processor set to ship before the end of the year.

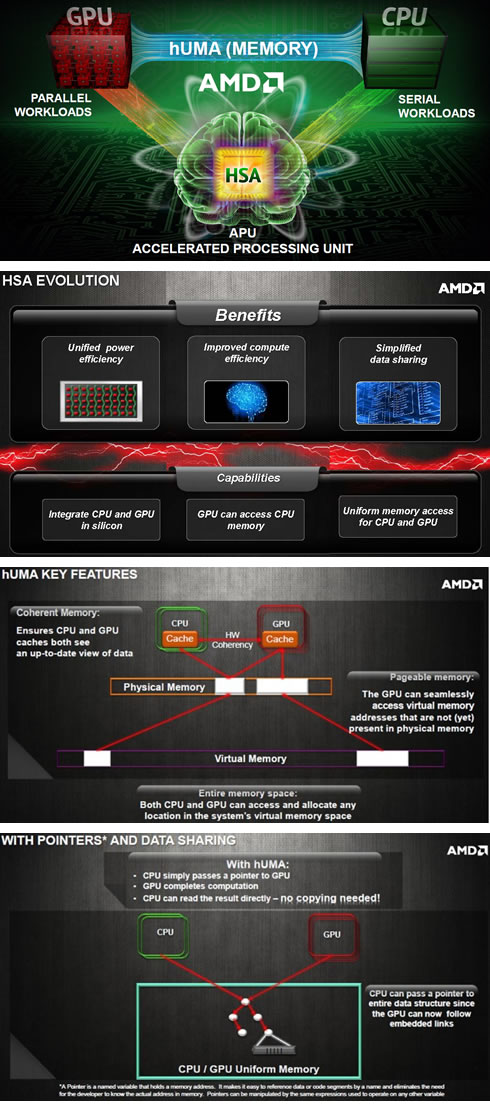

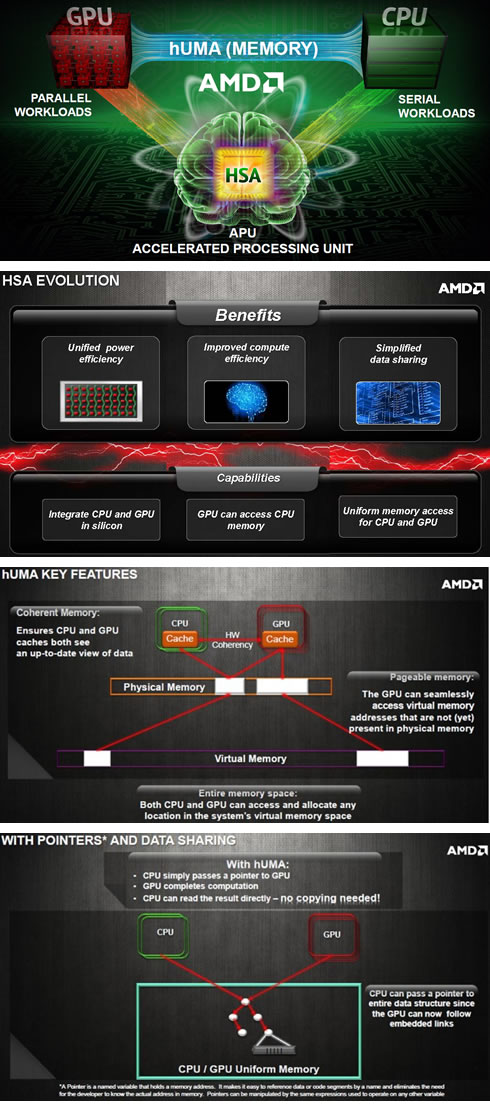

The Heterogeneous Systems Architecture (HSA) group is defining specifications for SoCs that mix CPUs, GPUs and potentially other cores. One of its main goals is defining a shared memory space for chip designers and software developers.

To that end, last week AMD held a press conference last week to discuss what it's calling "heterogeneous Uniform Memory Access" (hUMA).

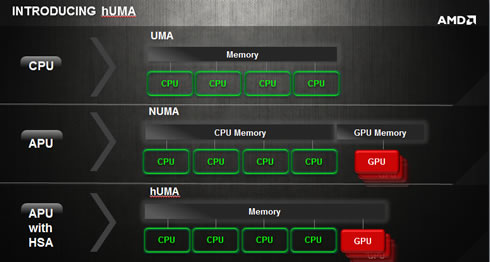

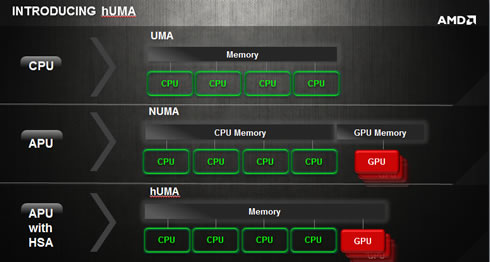

Breaking down bottlenecks in how GPU is accessing the memory is important to the future of programming because it allows apps to efficiently move the right tasks to the best suited processing element. heterogeneous Uniform Memory Access or hUMA is a sophisticated shared memory architecture used in APUs (Accelerated Processing Units). In a hUMA architecture, CPU and GPU (inside APU) have full access to the entire system memory. hUMA main features include:

- Access to entire system memory space: CPU and GPU processes to dynamically allocate memory from the entire memory space

- Pageable Memory: GPU can take page faults, and is no longer restricted to page locked memory

- Bi-directional coherent memory: Any updates made by one processing element will be seen by all other processing elements - GPU or CPU.

Typically in GPGPU chips, whenever a CPU program wants to do some computation on the GPU, it has to copy all the data from the CPU's memory into the GPU's memory. When the GPU computation is finished, all the data has to be copied back. This wastes time and makes it difficult to mix and match code that runs on the CPU and code that runs on the GPU.

HSA's uniform memory access solves the problem, as it lets graphics cores share the same address space as CPU cores with bi-directional coherent access. It gives GPUs full access to both physical and virtual memory. The GPU can directly access CPU memory addresses, allowing it to both read and write data that the CPU is also reading and writing.

AMD says the approach will simplify work for apps programmers allowing them to offer new experiences such as visually rich, intuitive, human-like interactivity. The company also suggests the techniques could boost performance and power efficiency.

Through HSA, AMD wants to enable mainstream languages and programming models and bring heterogeneous compute to the broad development community. The HSA group will provide support for its approach in C++, Java and Python.

The first processor to come to market with HSA hUMA is codenamed Kaveri. It will combine 2-3 compute units using AMD's Steamroller cores with a GPU. The GPU will have full access to system memory.

The upcoming PS4 is also expected to be powered by essentially an HSA system as it its CPU and GPU will have full access to all the system's memory.

To that end, last week AMD held a press conference last week to discuss what it's calling "heterogeneous Uniform Memory Access" (hUMA).

Breaking down bottlenecks in how GPU is accessing the memory is important to the future of programming because it allows apps to efficiently move the right tasks to the best suited processing element. heterogeneous Uniform Memory Access or hUMA is a sophisticated shared memory architecture used in APUs (Accelerated Processing Units). In a hUMA architecture, CPU and GPU (inside APU) have full access to the entire system memory. hUMA main features include:

- Access to entire system memory space: CPU and GPU processes to dynamically allocate memory from the entire memory space

- Pageable Memory: GPU can take page faults, and is no longer restricted to page locked memory

- Bi-directional coherent memory: Any updates made by one processing element will be seen by all other processing elements - GPU or CPU.

Typically in GPGPU chips, whenever a CPU program wants to do some computation on the GPU, it has to copy all the data from the CPU's memory into the GPU's memory. When the GPU computation is finished, all the data has to be copied back. This wastes time and makes it difficult to mix and match code that runs on the CPU and code that runs on the GPU.

HSA's uniform memory access solves the problem, as it lets graphics cores share the same address space as CPU cores with bi-directional coherent access. It gives GPUs full access to both physical and virtual memory. The GPU can directly access CPU memory addresses, allowing it to both read and write data that the CPU is also reading and writing.

AMD says the approach will simplify work for apps programmers allowing them to offer new experiences such as visually rich, intuitive, human-like interactivity. The company also suggests the techniques could boost performance and power efficiency.

Through HSA, AMD wants to enable mainstream languages and programming models and bring heterogeneous compute to the broad development community. The HSA group will provide support for its approach in C++, Java and Python.

The first processor to come to market with HSA hUMA is codenamed Kaveri. It will combine 2-3 compute units using AMD's Steamroller cores with a GPU. The GPU will have full access to system memory.

The upcoming PS4 is also expected to be powered by essentially an HSA system as it its CPU and GPU will have full access to all the system's memory.