New User Interface Technology Converts Entire Rooms into Digital Spaces

Japaese researchers will commence field trials on Fujitsu Laboratories-developed user interface (UI) technology that converts an entire room into a digital space, and on a co-creation support application developed by Fujitsu Social Science Laboratory. The trial will be conducted from August at the HAB-YU platform, a venue developed by Fujitsu Design to facilitate new value-creation initiatives.

Using the new space-digitalizing UI technology liberates people from the small screens of smart devices, tablets, and PCs, and projects a virtual window system over a large shared space, such as walls and tables, letting people display and share information with simple operations. For example, participants in a workshop can connect their smart devices to the large-format displays in the meeting space, and display the contents of their smart-device screens on walls and tables. Participants' operations conducted on the window system are transmitted to the appropriate devices to enable participants to share files between smart devices with intuitive operations on walls and tables.

Using the co-creation support application, which implements this new UI technology, materials that people bring into a space can be displayed and shared in a large format so that information can be easily organized when people are brainstorming or collaborating.

Fujitsu Laboratories, Fujitsu Design, and Fujitsu Social Science Laboratory are deploying the technology and application in workshops held at the HAB-YU platform in field trials to validate their effectiveness in supporting the creation of new ideas. The three companies seek to transform workstyles using ICT for the creation of knowledge.

How it works

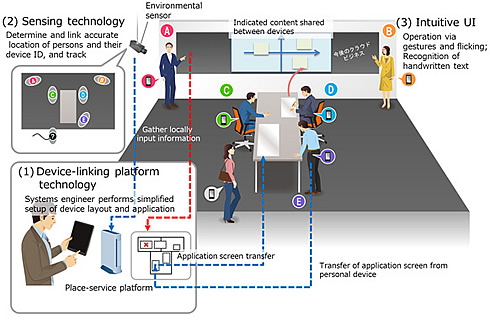

The space-digitalizing technology automatically connects hand-held smart devices and the display hardware installed in the space, and expands the UI throughout the entire space. Multiple devices, each equipped with a projector and camera, are set, and collectively they can cover the walls and tabletops in the space as a single window system. As a result, the smartphone screen content from multiple people can be displayed within the same space.

Merely by configuring simplified layout data to the server of the place-service platform, multiple display devices in the space can be connected and used as a single large-format display. Not only does the technology enable the use of contiguous spaces, it easily displays and supports the operations of screen contents of smart devices brought into the space. Technology was also developed that converts information on walls and tabletops that is pointed at into input data for participants' smart devices.

Fujitsu Laboratories developed sensing technology that uses connections between environmental sensors in the space, including cameras, and the sensors built into smart devices to simultaneously track the location of people and the IDs of the smart devices they are carrying. Connecting environmental sensors, which can accurately track location but not device IDs, and information from inertial sensors in smart devices, which can determine device IDs but not location, makes it possible to use people's movements, including walking and stopping, to sense location and ID. In turn, as each smart device and its owner are identified, it becomes possible to share the screen of a specific user's smart device on the big display.

Fujitsu Laboratories also developed a UI that links to people's movements. Using simple, pre-programmed gestures, a participant can transfer the screen contents of their smart device to the wall in front or launch the application. The Fujitsu Laboratories-developed UI utilizes handwritten text to promote the generation of ideas on large screen displays, such as operations for hand writing notes on content projected on the wall, operations for selecting handwritten notes and having them recognized as text, and operations for displaying the recognized text in the size of a sticky note.