Intel Advances Artificial Intelligence With Nervana Neural Network Processor

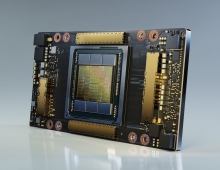

Intel CEO Brian M. Krzanich today spoke at the WSJDLive global technology conference about cognitive and artificial intelligence (AI) technology, and announced Intel's silicon for neural network processing, the Intel Nervana Neural Network Processor (NNP).

Coming before the end of this year, the Intel Nervana Neural Network Processor (NNP) (formerly known as "Lake Crest") promises to revolutionize AI computing across myriad industries. Intel says that by using Intel Nervana technology, companies will be able to develop new classes of AI applications that maximize the amount of data processed and their enable customers to find greater insights. Examples include earlier diagnosis in helathcare, more efficient targetted advertising for social media, accelerated learning in autonomous vehicles or better weather predictions.

Matrix multiplication and convolutions are a couple of the important primitives at the heart of Deep Learning. These computations are different from general purpose workloads since the operations and data movements are largely known a priori. For this reason, the Intel Nervana NNP does not have a standard cache hierarchy and on-chip memory is managed by software directly. Better memory management enables the chip to achieve high levels of utilization of the massive amount of compute on each die. This translates to achieving faster training time for Deep Learning models.

Designed with high speed on- and off-chip interconnects, the Intel Nervana NNP enables massive bi-directional data transfer. A stated design goal was to achieve true model parallelism where neural network parameters are distributed across multiple chips. This makes multiple chips act as one large virtual chip that can accommodate larger models.

Neural network computations on a single chip are largely constrained by power and memory bandwidth. To achieve higher degrees of throughput for neural network workloads, Intel has invented a new numeric format called Flexpoint. Flexpoint allows scalar computations to be implemented as fixed-point multiplications and additions while allowing for large dynamic range using a shared exponent. Since each circuit is smaller, this results in a vast increase in parallelism on a die while simultaneously decreasing power per computation.

Intel says it has multiple generations of Intel Nervana NNP products in the pipeline that will deliver higher performance and enable new levels of scalability for AI models. The company's goal set last year is to achieve 100 times greater AI performance by 2020.

Intel is also investing in frontier technologies that will be needed for other large-scale computing applications of the future. Among these technologies, the company is achieving research breakthroughs in neuromorphic and quantum computing.

Neuromorphic chips are inspired by the human brain, which will help computers make decisions based on patterns and associations. Intel recently announced a self-learning neuromorphic test chip, which uses data to learn and make inferences, gets smarter over time, and does not need to be trained in the traditional way.

Quantum computers have the potential to be powerful computers harnessing the unique capabilities of a large number of qubits (quantum bits), as opposed to binary bits, to perform exponentially more calculations in parallel. This will enable quantum computers to tackle problems conventional computers can't handle, such as simulating nature to advance research in chemistry, materials science and molecular modeling - creating a room temperature superconductor or discovering new drugs.

Last week, Intel announced a 17-qubit superconducting test chip delivered to QuTech, its quantum research partner in the Netherlands. The delivery of this chip demonstrates the fast progress Intel and QuTech are making in researching and developing a working quantum computing system. In fact, Intel expects to deliver a 49-qubit chip by the end of this year.