Intel Launches Artificial Intelligence Chip Springhill

Intel Corp on Tuesday launched its latest processor that will be its first using artificial intelligence (AI) and is designed for large computing centers.

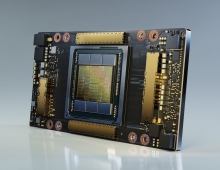

The chip, developed at its development facility in Haifa, Israel, is known as Nervana NNP-T or Springhill and is based on a 10 nanometer Ice Lake processor that will allow it to cope with high workloads using minimal amounts of energy, Intel said.

Intel said its first AI product comes after it had invested more than $120 million in three AI startups in Israel.

Intel engineers also presented technical details on hybrid chip packaging technology, Intel Optane DC persistent memory and chiplet technology for optical I/O.

Turning data into information and then into knowledge requires hardware architectures and complementary packaging, memory, storage and interconnect technologies that can evolve and support emerging and increasingly complex use cases and AI techniques. Dedicated accelerators like the Intel Nervana NNPs are built from the ground up, with a focus on AI to provide the right intelligence at the right time.

What Intel Presented at Hot Chips 2019:

- Intel Nervana NNP-T: Built from the ground up to train deep learning models at scale: Intel Nervana NNP-T (Neural Network Processor) is built to prioritize two key real-world considerations: training a network as fast as possible and doing it within a given power budget. Intel says this deep learning training processor is striking a balance among computing, communication and memory. While Intel Xeon Scalable processors bring AI-specific instructions and provide a foundation for AI, the NNP-T is architected from scratch, building in features and requirements needed to solve for large models, without the overhead needed to support legacy technology. To account for future deep learning needs, the Intel Nervana NNP-T is built with flexibility and programmability so it can be tailored to accelerate a wide variety of workloads – both existing ones today and new ones that will emerge.

Spring Crest (NNP-T) SoC

- PCIe Gen 4 x16 EP

- 4x HBM2

- 64 lanes SerDes

- 24 Tensor Processors

- Up to 119 TOPS

- 60 MB on-chip distributed memory

- Management CPU and Interfaces

- 2.5D packaging

Implementation

- TSMC CLN16FF+

- 680mm2, 1200mm2 interposer

- 27 Billion Transistors

- 60mm x 60mm/6-2-6 3325 pin BGA package

- 4x8GB HBM2-2400 memory

- Up to 1.1Ghz core frequency

- 64 lanes SerDes HSIO up to 3.58Tbps aggregate BW

- PCIe Gen 4 x16, SPI, I2C, GPIOs

- PCIe & OAM form factors

- Air-cooled, 150-250W typical workload power

- Intel Nervana NNP-I: High-performing deep learning inference for major data center workloads: Intel Nervana NNP-I is purpose-built for inference and is designed to accelerate deep learning deployment at scale, leveraging Intel’s 10nm process technology with Ice Lake cores to offer high performance per watt across all major data center workloads. Additionally, the Intel Nervana NNP-I offers a high degree of programmability. As AI becomes pervasive across every workload, having a dedicated inference accelerator that is easy to program, has short latencies, has fast code porting and includes support for all major deep learning frameworks allows companies to harness the full potential of their data as actionable insights.

Features

- TOPs (INT8): 48 -92 SoC

- TDP: 10-50w

- TOPs/w: 2.0-4.8 TOPs/w

- Inference Engines (ICE): 10-12 ICEs

- Total SRAM: 75MB

- DRAM BW: 68 GB/s

- Lakefield: Hybrid cores in a three-dimensional package: Lakefield introduces a product with 3D stacking and IA hybrid computing architecture for a new class of mobile devices. Leveraging Intel’s latest 10nm process and Foveros packaging technology, Lakefield achieves a significant reduction in standby power, core area and package height over previous generations of technology. With high computing performance and ultra-low thermal design power, new thin form-factor devices, 2 in 1s, and dual-display devices can operate always-on and always-connected at very low standby power.

- TeraPHY: An in-package optical I/O chiplet for high-bandwidth, low-power communication: Intel and Ayar Labs demonstrated the first integration of monolithic in-package optics (MIPO) with a high-performance system-on-chip (SOC). The Ayar Labs TeraPHY optical I/O chiplet is co-packaged with the Intel Stratix 10 FPGA using Intel Embedded Multi-die Interconnect Bridge (EMIB) technology, offering high-bandwidth, low-power data communication from the chip package with determinant latency for distances up to 2 km. This collaboration will enable new approaches to architecting computing systems for the next phase of Moore’s Law by removing the traditional performance, power and cost bottlenecks in moving data.

- Intel Optane DC persistent memory: Architecture and performance: Intel Optane DC persistent memory, now shipping in volume, is the first product in the memory/storage hierarchy’s entirely new tier called persistent memory. Based on Intel 3D XPoint technology and in a memory module form factor, it can deliver large capacity at near-memory speeds, latency in nanoseconds, while also natively delivering the persistence of storage. Details of the two operational modes (memory mode and app direct mode) as well performance examples show how this new tier can support a complete re-architecting of the data supply subsystem to enable faster and new workloads.