NVIDIA at GTC 2019

NVIDIA founder and CEO Jensen Huang delivered on Monday the opening keynote address at the 10th annual GPU Technology Conference (GTC) in Silicon Valley.

Huang, speaking at the San Jose State University Events Center, talked about real-time Ray tracing in games and older graphics cards, AI, the GeForce NOW cloud gaming service, new RTX servers, powerful workstations for data scientists and more.

Real-Time Ray Tracing Integrated into Unreal Engine, Unity and 1st-Party AAA Game Engines

NVIDIA announced several developments that reinforce NVIDIA GeForce GPUs as the core platform that allows game developers to add real-time ray tracing effects to games.

The announcements, which build on the central role Microsoft DirectX Ray Tracing (DXR) plays in the PC gaming ecosystem, include:

- Integration of real-time ray tracing into Unreal Engine and Unity game engines.

- NVIDIA is adding ray tracing support to GeForce GTX GPUs (GeForce GTX 1060 6GB GPUs or higher.)

- The introduction of NVIDIA GameWorks RTX, a set of tools and rendering techniques that help game developers add ray tracing to games.

- New games and experiences that showcase real-time ray tracing such as Dragonhound, Quake II RTX and others.

Unreal Engine 4.22 is available in preview now, with final release details expected in Epic’s GDC keynote on Wednesday. Starting on April 4, Unity will offer optimized, production-focused, real-time ray tracing support with a custom experimental build available on GitHub to all users with full preview access in the 2019.03 Unity release.

Real-time ray tracing support from other first-party AAA game engines includes DICE/EA’s Frostbite Engine, Remedy Entertainment’s Northlight Engine and engines from Crystal Dynamics, Kingsoft, Netease and others.

NVIDIA GeForce GTX GPUs powered by Pascal and Turing architectures (GeForce GTX 1060 6GB GPUs or higher) will be able to take advantage of ray tracing-supported games via a driver expected in April. The new driver will enable tens of millions of GPUs for games that support real-time ray tracing, accelerating the growth of the technology and giving game developers a massive installed base.

With this driver, GeForce GTX GPUs will execute ray traced effects on shader cores. Game performance will vary based on the ray-traced effects and on the number of rays cast in the game, along with GPU model and game resolution. Games that support the Microsoft DXR and Vulkan APIs are all supported.

However, GeForce RTX GPUs, which have dedicated ray tracing cores built directly into the GPU, deliver the ultimate ray tracing experience. NVIDIA says that they provide up to 2-3x faster ray tracing performance with a more visually immersive gaming environment than GPUs without dedicated ray tracing cores.

NVIDIA GameWorks RTX is a set of tools that help developers implement real-time ray-traced effects in games. GameWorks RTX is available to the developer community in open source form under the GameWorks license and includes plugins for Unreal Engine 4.22 and Unity’s 2019.03 preview release.

Included in GameWorks RTX:

- RTX Denoiser SDK – a library that enables real-time ray tracing by providing denoising techniques to lower the required ray count and samples per pixel. It includes algorithms for ray traced area light shadows, glossy reflections, ambient occlusion and diffuse global illumination.

- Nsight for RT – a standalone developer tool that saves developers time by helping to debug and profile graphics applications built with DXR and other supported APIs.

GDC also marks the debut of a variety of ray-traced experiences and games, including:

- Control — a new demo video from Remedy Entertainment featuring ray-traced global illumination, reflections and shadows.

- Dragonhound — Nexon’s upcoming online action RPG monster battle game that will feature real-time ray-traced reflections and shadows.

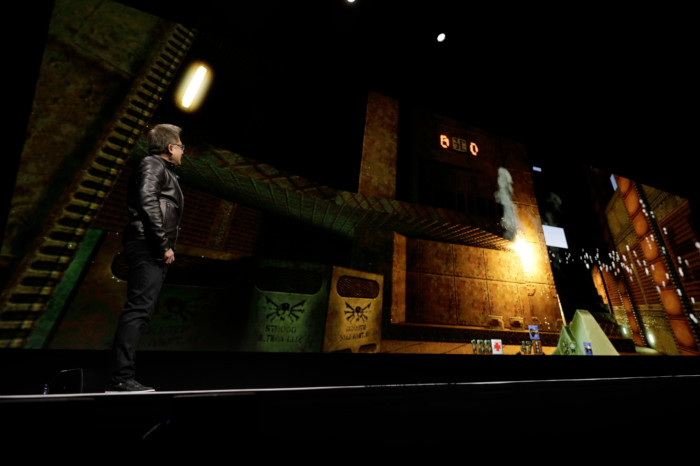

- Quake II RTX — uses ray tracing for all of the lighting in the game in a unified lighting algorithm called path tracing. The classic Quake II game was modified in the open source community to support ray tracing and NVIDIA’s engineering team further enhanced it with improved graphics and physics. Quake II RTX is the first ray-traced game using NVIDIA VKRay, a Vulkan extension that allows any developer using Vulkan to add ray-traced effects to their games.

GeForce NOW Cloud Gaming Service for PC Gamers

NVIDIA also talked about the GeForce NOW cloud gaming service, which offers a click-to-play interface and an open ecosystem, allowing PC games to be played anywhere, anytime on any Mac or PC. It is a gaming PC in the cloud for the 1 billion computers that aren’t game ready.

The service works by upgrading your local, underpowered or incompatible hardware — whether it’s a PC with integrated graphics or a Mac that doesn’t have access to all the latest games — into a powerful GeForce gaming PC in the cloud.

GeForce NOW is an open platform, allowing users to “bring their own games” from the top stores. NVIDIA says there are already more than 500 games supported on GeForce NOW, with more added every week.

In addition to the 15 existing GeForce NOW data centers in North America and western Europe, Softbank and LG Uplus will be among the first to deploy RTX cloud gaming servers in Japan and Korea, respectively, later this year.

NVIDIA announced new RTX blade servers that are optimized to stream a high-performance PC gaming experience from cloud data centers, including GeForce NOW. NVIDIA RTX Servers also include fully optimized software stacks available for Optix RTX rendering, VR and AR, and professional visualization applications.

NVIDIA founder and CEO Jensen Huang unveiled the latest RTX Server configuration. It comprises 1,280 Turing GPUs on 32 RTX blade servers, which offer a significant leap in cloud-rendered density, efficiency and scalability.

Each RTX blade server packs 40 GPUs into an 8U space and can be shared by multiple users with NVIDIA GRID vGaming or container software. Mellanox technology is used as the backbone storage and networking interconnect to deliver the apps and updates instantly to thousands of concurrent users.

NVIDIA has optimized RTX servers for use by cloud gaming operators, enabling them to render and stream games at the performance levels of GeForce RTX 2080 GPUs to any client device.

With low-latency access to RTX Servers at the network edge, cloud-rendered AR and VR applications become a reality. AT GTC, NVIDIA is showcasing AR/VR demos running on cloud-based hardware at GTC, including an RTX Server-powered demo from Envrmnt, the XR arm of Verizon.

The company is also collaborating with AT&T and Ericsson to bring these experiences to life on mobile networks. At AT&T Foundry, using NVIDIA CloudVR software, NVIDIA was able to play an interactive VR game, over a 5G radio streamed from an RTX Server.

With RTX Servers on 5G Edge networks, users will have access to cloud gaming services like GeForce NOW and AR and VR applications on just about any device.

The NVIDIA RTX platform comes in 2U, 4U and 8U form factors and supports multiple NVIDIA GPU options from Quadro RTX GPUs and Quadro vDWS software for professional apps to NVIDIA GPUs with GRID vGaming software for cloud gaming and consumer AR/VR.

2U and 4U RTX servers are available from NVIDIA's OEM partners today. The new 8U RTX blade server will initially be available from NVIDIA in Q3.

CUDA-X AI SDK for GPU-Accelerated Data Science

Also introduced today at NVIDIA’s GPU Technology Conference, CUDA-X AI is an end-to-end platform for the acceleration of data science.

CUDA-X AI arrives as businesses turn to AI — deep learning, machine learning and data analytics — to make data more useful. The typical workflow for all these: data processing, feature determination, training, verification and deployment.

NVIDIA says that CUDA-X AI unlocks the flexibility of our NVIDIA Tensor Core GPUs to address this end-to-end AI pipeline. It is capable of speeding up machine learning and data science workloads by as much as 50x and it consists of more than a dozen specialized acceleration libraries.

CUDA-X AI already accelerating data analysis with cuDF, deep learning primitives with cuDNN; machine learning algorithms with cuML; and data processing with DALI, among others. It’s integrated into major deep learning frameworks such as TensorFlow, PyTorch and MXNet.

CUDA-X AI acceleration libraries are freely available as individual downloads or as containerized software stacks from the NVIDIA NGC software hub.

They can be deployed in desktops, workstations, servers and on cloud computing platforms, including the new NVIDIA T4 servers.

Microsoft Azure Machine Learning (AML) service is the first major cloud platform to integrate RAPIDS, a key component of NVIDIA CUDA-X AI. With access to the RAPIDS open source suite of libraries, data scientists can do predictive analytics with unprecedented speed using NVIDIA GPUs on AML service.

RAPIDS on AML service comes in the form of a Jupyter Notebook that through the use of the AML service SDK creates a resource group, workspace, cluster and an environment with the right configurations and libraries for the use of RAPIDS code. Template scripts are provided to enable the user to experiment with different data sizes and number of GPUs as well to set up a CPU baseline.

High-Performance Workstations for Data Scientists

NVIDIA has teamed with the OEMs and system builders to deliver powerful new workstations designed to help data scientists, analysts and engineers make better business predictions faster.

NVIDIA-powered workstations for data science are based on a reference architecture made up of dual, high-end NVIDIA Quadro RTX GPUs and NVIDIA CUDA-X AI accelerated data science software, such as RAPIDS, TensorFlow, PyTorch and Caffe. CUDA-X AI is a collection of libraries that enable modern computing applications to benefit from NVIDIA’s GPU-accelerated computing platform.

Powered by the latest NVIDIA Turing GPU architecture and designed for enterprise deployment, dual Quadro RTX 8000 and 6000 GPUs deliver up to 260 teraflops of compute performance and 96GB of memory using NVIDIA NVLink interconnect technology.

CUDA-X AI includes cuDNN for accelerating deep learning primitives, cuML for accelerating machine learning algorithms, TensorRT for optimizing trained models for inference and over 15 other libraries. Together they work with NVIDIA Tensor Core GPUs to accelerate the end-to-end workflows for developing and deploying AI-based applications. CUDA-X AI can be integrated into deep learning frameworks, including TensorFlow, PyTorch and MXNet, and leading cloud platforms, including AWS, Microsoft Azure and Google Cloud.

NVIDIA-powered systems are available immediately from global workstation providers such as Dell, HP and Lenovo and regional system builders, including AMAX, APY, Azken Muga, BOXX, CADNetwork, Carri, Colfax, Delta, EXXACT, Microway, Scan, Sysgen and Thinkmate.

NVIDIA-Powered Enterprise Servers Optimized for Data Science

Mainstream servers optimized to run NVIDIA’s data science acceleration software are now available from seven systems manufacturers, including Cisco, Dell EMC, Fujitsu, Hewlett Packard Enterprise (HPE), Inspur, Lenovo and Sugon.

Featuring NVIDIA T4 GPUs and fine-tuned to run NVIDIA CUDA-X AI acceleration libraries, the servers provide businesses a standard platform for data analytics and a wide range of other enterprise workloads.

Drawing only 70 watts of power and designed to fit into existing data center infrastructures, the T4 GPU can accelerate AI training and inference, machine learning, data analytics and virtual desktops.

Systems announced today by Cisco, Dell EMC, Fujitsu, HPE, Inspur, Lenovo and Sugon are NVIDIA NGC-Ready validated, a designation reserved for servers with demonstrated ability to excel in a full range of accelerated workloads.

All software tested as part of the NGC-Ready validation process is available from NVIDIA NGC, a repository of GPU-accelerated software, pre-trained AI models, model training for data analytics, machine learning, deep learning and high performance computing accelerated by CUDA-X AI.

NGC-Ready validated T4 servers announced today are:

Cisco UCS C240 M5

Dell EMC PowerEdge R740/R740xd

Fujitsu PRIMERGY RX2540 M5

HPE ProLiant DL380 Gen10

Inspur NF5280M5

Lenovo ThinkSystem SR670

Sugon W760-G30

NVIDIA also today launched an enterprise support service exclusively for customers with NGC-Ready systems, including all NGC-Ready T4 systems as well as previously validated NVLink and Tesla V100-based servers and NVIDIA-powered workstations.

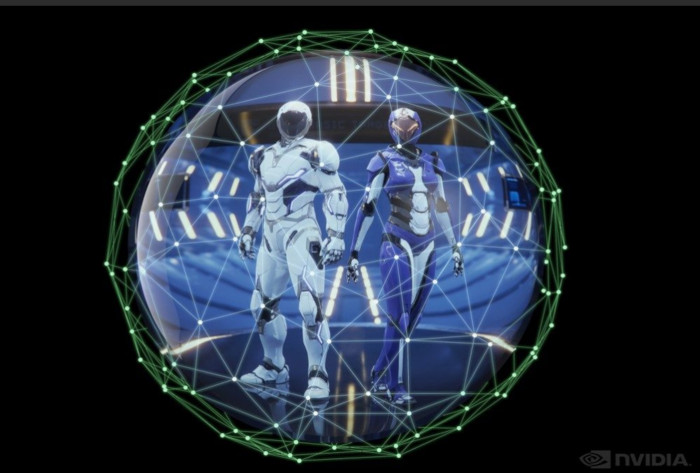

Omniverse - Open, Interactive 3D Design Collaboration Platform for Multi-Tool Workflows

NVIDIA also introduced Omniverse, an open collaboration platform to simplify studio workflows for real-time graphics.

Omniverse includes portals — two-way tunnels — that maintain live connections between applications such as Autodesk Maya, Adobe Photoshop and Epic Games’ Unreal Engine.

This new open collaboration platform streamlines 2D and 3D product pipelines across industries. It supports Pixar’s Universal Scene Description technology for exchanging information about modeling, shading, animation, lighting, visual effects and rendering across multiple applications. It also supports NVIDIA’s Material Definition Language, which allows artists to exchange information about surface materials across multiple tools.

With Omniverse, artists can see live updates made by other artists working in different applications. They can also see changes reflected in multiple tools at the same time. For example an artist using Maya with a portal to Omniverse can collaborate with another artist using UE4 and both will see live updates of each others’ changes in their application.

Artists will also be able to view updates made in real time through NVIDIA’s Omniverse Viewer, which gives users a live look at work being done in a wide variety of tools.

The Omniverse Viewer delivers the highest quality photorealistic images in real time by taking advantage of rasterization as well as support for NVIDIA RTX RT Cores, CUDA cores and Tensor Core-enabled AI.

NVIDIA is now accepting applications for its Omniverse lighthouse program.

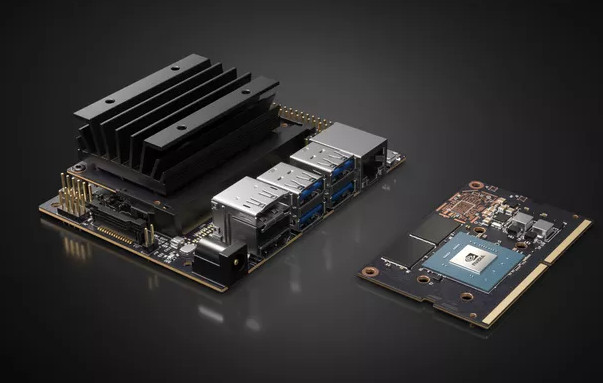

$99 AI computer for developers and researchers

A new product from Nvidia announced today at GTC, the $99 AI computer called the Jetson Nano, is ready to handle tasks like object recognition and autonomous navigation without relying on cloud processing power.

Previous Jetson boards have been used to power a range of devices, from shelf-scanning robots made for Lowe’s to Skydio’s autonomous drones. But the Nano developer kit of the Nano is targeting “embedded designers, researchers, and DIY makers” for $99, and production-ready modules for commercial companies for $129 (with a minimum buy of 1,000 modules).

The company also unveiled an open-source $250 autonomous robotics kit named JetBot). It includes a Jetson Nano along with a robot chassis, battery pack, and motors, allowing users to build their own self-driving robot.

The $99 devkit offers 472 gigaflops of computing powered by a quad-core ARM A57 processor, 128-core Nvidia Maxwell GPU, and 4GB of LPDDR RAM. The Nano also supports a range of popular AI frameworks, including TensorFlow, PyTorch, Caffe, Keras, and MXNet. Available ports and interfaces include USB-A and B, gigabit Ethernet, and support for microSD storage.

Nvidia says it hopes the Nano’s price point should open up AI hardware development to new users.

NVIDIA and Toyota Research Institute Partner on Autonomous Transportation

Toyota Research Institute-Advanced Development (TRI-AD) and NVIDIA announced a new collaboration to develop, train and validate self-driving vehicles.

The partnership builds on an ongoing relationship with Toyota to utilize the NVIDIA DRIVE AGX Xavier AV computer and is based on close development between teams from NVIDIA, TRI-AD in Japan and Toyota Research Institute (TRI) in the United States. The partnership includes advancements in:

- AI computing infrastructure using NVIDIA GPUs

- Simulation using the NVIDIA DRIVE Constellation platform

- In-car AV computers based on DRIVE AGX Xavier or DRIVE AGX Pegasus

The agreement includes the development of an architecture that can be scaled across many vehicle models and types, accelerating the development and production timeline, and simulating the equivalent of billions of miles of driving in challenging scenarios.

Simulation has proven to be a valuable tool for testing and validating AV hardware and software before it is put on the road. As part of the collaboration, TRI-AD and TRI are utilizing the NVIDIA DRIVE Constellation platform for components of their simulation workflow.

DRIVE Constellation is a data center solution, comprising two side-by-side servers. The first server — Constellation Simulator — uses NVIDIA GPUs running DRIVE Sim software to generate the sensor output from a virtual car driving in a realistic virtual world. The second server — Constellation Vehicle — contains the DRIVE AGX car computer, which processes the simulated sensor data. The driving decisions from Constellation Vehicle are fed back into Constellation Simulator, aiming to realize bit-accurate, timing-accurate hardware-in-the-loop testing.

DRIVE AP2X Level 2+ Autonomous Vehicle Platform

NVIDIA announced NVIDIA DRIVE AP2X — a complete Level 2+ automated driving solution encompassing DRIVE AutoPilot software, DRIVE AGX and DRIVE validation tools.

DRIVE AP2X incorporates DRIVE AV autonomous driving software and DRIVE IX intelligent cockpit experience. Each runs on the NVIDIA Xavier system-on-a-chip (SoC) utilizing DriveWorks acceleration libraries and DRIVE OS, a real-time operating system.

DRIVE AP2X Software 9.0, which will be released next quarter, adds a wide range of new autonomous driving capabilities. It includes more deep neural networks, facial recognition capabilities and additional sensor integration options.

To enhance mapping and localization, DRIVE AP2X software will include MapNet, a DNN that identifies lanes and landmarks. For comfortable lane-keeping, a suite of three distinct path planning DNNs will provide greater accuracy and safety.

ClearSightNet allows the vehicle to detect camera blindness, as when the sun shines directly into the sensor, or when mud or snow limits its vision. This allows the car to take action to make up for any sensor obstructions.

For driver monitoring, a new DNN enables facial recognition. Ecosystem partners and manufacturers can enable face identification to open or start the car as well as make seating and other cabin adjustments.

DRIVE AP2X software will also feature new visualization capabilities. To build trust in the autonomous driving capabilities, a confidence view provides vehicle occupants a visualization of the car’s surround camera perception, current speed, speed limit and driver monitoring, all on one screen.

NVIDIA also detailed the DRIVE Planning and Control software layer as part of its DRIVE AV software suite.

This layer consists of a route planner, a lane planner and a behavior planner that work together to enable a comfortable driving experience. A primary component of the DRIVE Planning and Control software is NVIDIA Safety Force Field (SFF).

This driving policy analyzes and predicts the dynamics of the surrounding environment. It takes in sensor data and determines a set of actions to protect the vehicle and other road users.