New NVIDIA EGX Edge Supercomputing Platform Accelerates AI, IoT, 5G at the Edge

NVIDIA on Monday announced the NVIDIA EGX Edge Supercomputing Platform – a high-performance, cloud-native platform optimized to take advantage of AI, IoT and 5G and offer companies with the ability to build next-generation services.

Early adopters of the platform – which combines NVIDIA CUDA-X software with NVIDIA-certified GPU servers and devices – include Walmart, BMW, Procter & Gamble, Samsung Electronics and NTT East, as well as the cities of San Francisco and Las Vegas.

“We’ve entered a new era, where billions of always-on IoT sensors will be connected by 5G and processed by AI,” said Jensen Huang, NVIDIA founder and CEO, at a keynote at the start of MWC Los Angeles. “Its foundation requires a new class of highly secure, networked computers operated with ease from far away.

“We’ve created the NVIDIA EGX Edge Supercomputing Platform for this world, where computing moves beyond personal and beyond the cloud to operate at planetary scale,” he said.

Enterprises deploying AI workloads at scale are using a combination of on-premises data centers and the cloud, bringing the AI models to where the data is being collected. Deploying these workloads at the edge, say in a retail store or parking garage, can be very challenging if IT expertise is not available as one might have with data centers.

Kubernetes eliminates many of the manual processes involved in deploying, managing and scaling applications. It provides a consistent, cloud-native deployment approach across on-prem, the edge and the cloud.

However, setting up Kubernetes clusters to manage hundreds or even thousands of applications across remote locations can be cumbersome, especially when human expertise isn’t readily available at every edge locale. Nvidia is addressing these challenges through the NVIDIA EGX Edge Supercomputing Platform.

NVIDIA EGX is a cloud-native, software-defined platform designed to make large-scale hybrid-cloud and edge operations possible and efficient.

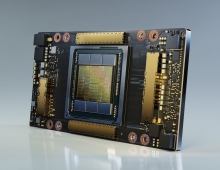

Within the platform is the EGX stack, which includes an NVIDIA driver, Kubernetes plug-in, NVIDIA container runtime and GPU monitoring tools, delivered through the NVIDIA GPU Operator. Operators codify operational knowledge and workflows to automate lifecycle management of containerized applications with Kubernetes.

The GPU Operator is a Helm chart deployed, cloud-native method to standardize and automate the deployment of all necessary components for provisioning GPU-enabled Kubernetes systems. NVIDIA, Red Hat and others in the cloud-native community have collaborated on creating the GPU Operator.

The GPU Operator also allows IT teams to manage remote GPU-powered servers the same way they manage CPU-based systems. This makes it easy to bring up a fleet of remote systems with a single image and run edge AI applications without additional technical expertise on the ground.

The EGX stack architecture is supported by Nvidia's hybrid-cloud management partners, such as Canonical, Cisco, Microsoft, Nutanix, Red Hat and VMware, to further simplify deployments and provide a consistent experience from cloud and data center to the edge.

The EGX platform features software to support a wide range of applications, including NVIDIA Metropolis, which can be used to build smart cities and intelligent video analytics applications, as well as the new NVIDIA Aerial software developer kit. Aerial allows telcos to build completely virtualized 5G radio access networks that are programmable, scalable and energy efficient, and can enable them to offer new services such as smart factories, AR/VR and cloud gaming.

Aerial provides two SDKs — CUDA Virtual Network Function (cuVNF) and CUDA Baseband (cuBB) — to simplify building scalable and programmable, software-defined 5G RAN networks using off-the-shelf servers with NVIDIA GPUs.

- The NVIDIA cuVNF SDK provides optimized input/output and packet processing, sending 5G packets directly to GPU memory from GPUDirect-capable network interface cards.

- The NVIDIA cuBB SDK provides a GPU-accelerated 5G signal processing pipeline, including cuPHY for L1 5G Phy, delivering high throughput and efficiency by keeping all physical layer processing within the GPU’s high-performance memory.

The NVIDIA Aerial SDK runs on the NVIDIA EGX stack, bringing GPU acceleration to carrier-grade Kubernetes infrastructure.

The NVIDIA EGX stack includes an NVIDIA driver, NVIDIA Kubernetes plug-in, NVIDIA Container runtime plug-in and NVIDIA GPU monitoring software.

To simplify the management of GPU-enabled servers, telcos can install all required NVIDIA software as containers that run on Kubernetes — open-source software widely used to speed the deployment and management of software of all kinds.

As also announced today, early ecosystem partners collaborating with NVIDIA include Microsoft, Ericsson and Red Hat.

Walmart is deploying EGX it in its Levittown, New York, Intelligent Retail Lab. Using EGX’s advanced AI and edge capabilities, Walmart is able to compute in real time more than 1.6 terabytes of data generated each second and can use AI to automatically alert associates to restock shelves, open up new checkout lanes, retrieve shopping carts and ensure product freshness in meat and produce departments.

Samsung Electronics, in another early EGX deployment, is using AI at the edge for highly complex semiconductor design and manufacturing processes.

Other early EGX deployments include BMW, NTT East and Procter & Gamble.

To accelerate the move to edge computing, NVIDIA has expanded its server-certification program to include a new designation, NGC-Ready for Edge, identifying systems powered by NVIDIA T4, Quadro RTX 8000 and V100 Tensor Core GPUs capable of running the most demanding AI workloads at the edge. Dell Technologies, Hewlett Packard Enterprise, Lenovo, QCT and Supermicro are among the first to work with NVIDIA to certify their systems, now totaling more than 20 validated servers from more than a dozen manufacturers worldwide.

The EGX software stack architecture is supported by Canonical, Cisco, Nutanix, Red Hat and VMware.