SK hynix Showcases Strength of Its Next-Gen Solutions at COMPUTEX Taipei 2024

SK hynix presented its leading AI memory solutions at COMPUTEX Taipei 2024 from June 4–7. As one of Asia’s premier IT shows, COMPUTEX Taipei 2024 welcomed around 1,500 global participants including tech companies, venture capitalists, and accelerators under the theme “Connecting AI”. Making its debut at the event, SK hynix underlined its position as a first mover and leading AI memory provider through its lineup of next-generation products.

“Connecting AI” With the Industry’s Finest AI Memory Solutions

Themed “Memory, The Power of AI,” SK hynix’s booth featured its advanced AI server solutions, groundbreaking technologies for on-device AI PCs, and outstanding consumer SSD products.

HBM3E, the fifth generation of HBM1, was among the AI server solutions on display. Offering industry-leading data processing speeds of 1.18 terabytes (TB) per second, vast capacity, and advanced heat dissipation capability, HBM3E is optimized to meet the requirements of AI servers and other applications.

1High Bandwidth Memory (HBM): A high-value, high-performance product that possesses much higher data processing speeds compared to existing DRAMs by vertically connecting multiple DRAMs with through-silicon via (TSV).

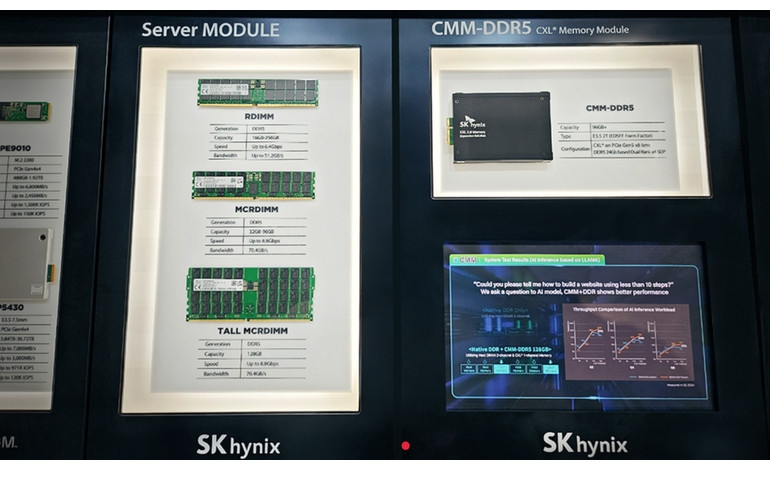

Another technology which has become crucial for AI servers is CXL®2 as it can increase system bandwidth and processing capacity. SK hynix highlighted the strength of its CXL portfolio by presenting its CXL Memory Module-DDR53 (CMM-DDR5), which significantly expands system bandwidth and capacity compared to systems only equipped with DDR5. Other AI server solutions on display included the server DRAM products DDR5 RDIMM4 and MCR DIMM5. In particular, SK hynix showcased its tall 128-gigabyte (GB) MCR DIMM for the first time at an exhibition.

2Compute Express Link® (CXL®): A PCIe-based next-generation interconnect protocol on which high-performance computing systems are based.

3Double Data Rate 5 (DDR5): A server DRAM that effectively handles the increasing demands of larger and more complex data workloads by offering enhanced bandwidth and power efficiency compared to the previous generation, DDR4.

4Registered Dual In-line Memory Module (RDIMM): A high-density memory module used in servers and other applications to vertically connect DRAM dies.

5Multiplexer Combined Ranks Dual In-line Memory Module (MCR DIMM): A module product with multiple DRAMs bonded to a motherboard in which two ranks—basic information processing units—operate simultaneously, resulting in improved speed.

Visitors to the booth could also see some of the company’s enterprise SSDs (eSSD), including the PS1010 and PE9010. In particular, the PCIe6 Gen5-based PS1010 is ideal for AI, big data, and machine learning applications due to its rapid sequential read speed. Additionally, SK hynix’s U.S. subsidiary Solidigm strengthened the eSSD lineup by presenting its flagship products D5-P5430 and D5-P5336 that have ultrahigh capacities of up to 30.72 TB and 61.44 TB, respectively.

6Peripheral Component Interconnect Express (PCIe): A high-speed input/output interface with a serialization format used on the motherboard of digital devices.

In line with the growing on-device AI7 trend, SK hynix showcased its groundbreaking memory solutions for on-device AI PCs. These included PCB01, a PCIe Gen5 client SSD for on-device AI which offers the industry’s highest sequential read speed of 14 gigabytes per second (GB/s) and a sequential write speed of 12 GB/s. Alongside PCB01, SK hynix displayed other products optimized for on-device AI PCs, including the next-generation graphic DRAM GDDR7 and LPCAMM28, a module solution that has the performance effect of replacing two existing DDR5 SODIMMs9.

7On-device AI: The performance of AI computation and inference directly within devices such as smartphones or PCs, unlike cloud-based AI services that require a remote cloud server.

8Low Power Compression Attached Memory Module 2 (LPCAMM2): LPDDR5X-based module solution that offers power efficiency and high performance as well as space savings.

9Small Outline Dual In-line Memory Module (SODIMM): A server DRAM smaller than the regular DIMM used in desktop PCs.

Attendees could also get a glimpse of SK hynix’s consumer SSD products. Platinum P51 and Platinum P41, highly reliable consumer SSD solutions which offer excellent speed to boost PC performance, were displayed at the show. Set to be mass-produced later in 2024, Platinum P51 utilizes SK hynix’s “Aries”—the industry’s first high-performance in-house controller. Platinum P51 offers sequential read speeds of up to 14 GB/s and sequential write speeds of up to 12 GB/s, nearly doubling the speed specifications of the previous generation Platinum P41. This enables the loading of LLMs10 required for AI training and inference in less than one second.

10Large language model (LLM): As language models trained on vast amounts of data, LLMs are essential for performing generative AI tasks as they create, summarize, and translate texts.

An updated version of the portable SSD Beetle X31 was also unveiled at the event. This compact and stylish SSD uses a USB 3.2 Gen 2 interface to reach a rapid operation speed of 10 gigabits per second (Gbps). In addition to the existing 512 GB and 1 TB versions, SK hynix plans to launch the higher-capacity 2 TB version in the third quarter of 2024.

Earlier in May, the outstanding design of SK hynix’s SSD lineup was highlighted when its stick-type SSD Tube T31 and heat sink for Platinum P41, Haechi H02, both won a prestigious 2024 Red Dot Design Award.

Lastly, SK hynix’s ESG strategy was highlighted at the show, including its efforts to enhance the energy efficiency of its products. The company’s range of energy-efficient solutions are particularly suited for improving the sustainability of AI applications, which require significant amounts of power.

Continuing to Push the Boundaries of AI Memory

In line with the theme of COMPUTEX Taipei 2024, SK hynix continues to advance its technologies to help realize a future where “Connecting AI” becomes an everyday reality. The company will continue to participate in global conferences that present the industry’s latest trends to push its AI memory capabilities to greater heights.