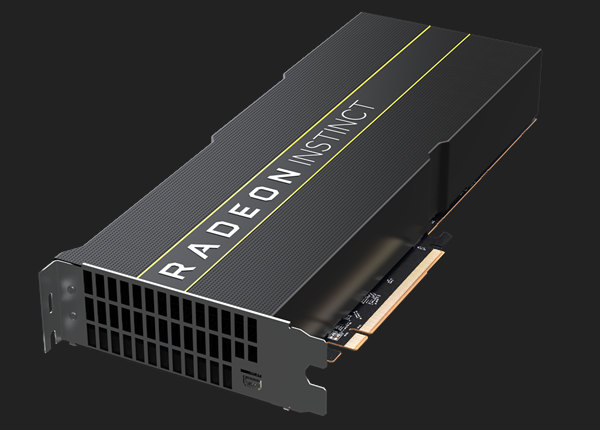

AMD Unveils First 7nm Radeon Instinct MI60 and MI50 Accelerators For Artificial Intelligence, Data centers

AMD today announced the AMD Radeon Instinct MI60 and MI50 accelerators, the first 7nm datacenter GPUs, designed to deliver the compute performance required for deep learning, HPC, cloud computing and rendering applications.

Researchers, scientists and developers will use AMD Radeon Instinct accelerators to solve challenges, including large-scale simulations, climate change, computational biology, disease prevention and more.

"Legacy GPU architectures limit IT managers from effectively addressing the constantly evolving demands of processing and analyzing huge datasets for modern cloud datacenter workloads," said David Wang, senior vice president of engineering, Radeon Technologies Group at AMD. "Combining world-class performance and a flexible architecture with a robust software platform and the industry’s leading-edge ROCm open software ecosystem, the new AMD Radeon Instinct™ accelerators provide the critical components needed to solve the most difficult cloud computing challenges today and into the future."

The AMD Radeon Instinct MI60 and MI50 accelerators feature flexible mixed-precision capabilities, powered by high-performance compute units that expand the types of workloads these accelerators can address, including a range of HPC and deep learning applications. The accelerators were designed to process workloads such as rapidly training complex neural networks, delivering higher levels of floating-point performance, greater efficiencies and new features for datacenter and departmental deployments.

The accelerators provides flexible mixed-precision FP16, FP32 and INT4/INT8 capabilities. The AMD Radeon Instinct MI60 is the world’s fastest double precision PCIe 4.0 capable accelerator, delivering up to 7.4 TFLOPS peak FP64 performance. The Radeon Instinct MI50 delivers up to 6.7 TFLOPS FP64 peak performance.

Two Infinity Fabric Links per GPU deliver up to 200 GB/s of peer-to-peer bandwidth – up to 6X faster than PCIe 3.0 alone – and enable the connection of up to 4 GPUs in a hive ring configuration (2 hives in 8 GPU servers).

The AMD Radeon Instinct MI60 provides 32GB of HBM2 Error-correcting code (ECC) memory, and the Radeon Instinct MI50 provides 16GB of HBM2 ECC memory. Both GPUs provide full-chip ECC and Reliability, Accessibility and Serviceability (RAS) technologies.

The chip generally delivers within 7% of an NVIDIA Volta’s performance before optimizations with less than half the die area (331mm2 compared to 800+mm2). Specifically, AMD said Vega will deliver 29.5 Tera FP16 operations/second for AI training. In inference jobs, it can hit 59 TeraOps/s for 8-bit integer and 118 TOPS for four-bit integer tasks.

In addition, AMD added hardware virtualization to the chip. Thus, one 7nm Vega can support up to 16 virtual machines or a single virtual machine can split its work across more than eight GPUs.

AMD MxGPU Technology, a hardware-based GPU virtualization solution, which is based on the SR-IOV (Single Root I/O Virtualization) technology, makes it difficult for hackers to attack at the hardware level, helping provide security for virtualized cloud deployments.

The AMD Radeon Instinct MI60 accelerator is expected to ship to datacenter customers by the end of 2018. The AMD Radeon Instinct MI50 accelerator is expected to begin shipping to data center customers by the end of Q1 2019.

AMD also announced a new version of the ROCm open software platform for accelerated computing that supports the architectural features of the new accelerators, including optimized deep learning operations (DLOPS) and the AMD Infinity Fabric Link GPU interconnect technology. Designed for scale, ROCm allows AMD's customers to deploy high-performance, energy-efficient heterogeneous computing systems in an open environment.

In addition to support for the new Radeon Instinct accelerators, ROCm software version 2.0 provides updated math libraries for the new DLOPS; support for 64-bit Linux operating systems including CentOS, RHEL and Ubuntu; optimizations of existing components; and support for the latest versions of the most popular deep learning frameworks, including TensorFlow 1.11, PyTorch (Caffe2) and others.

The ROCm 2.0 open software platform is expected to be available by the end of 2018.

EPYC Processors Now Available on Amazon Web Services

Also today at Next Horizon event, AMD and Amazon Web Services announced the immediate availability of the first AMD EPYC processor-based instances on Amazon Elastic Compute Cloud (EC2).

Part of the most popular AWS instance families, the new AMD EPYC processor powered offerings feature high core density and memory bandwidth. The companies claim that cost savings are driven by the core density of AMD EPYC processors that offer M5 and T3 instance customers a balance of compute, memory, and networking resources for web and application servers, backend servers for enterprise applications, and test/development environments with seamless application migration.

The new instances are available as variants of Amazon EC2’s memory optimized and general purpose instance families. AMD-based R5 and M5 instances can be launched via the AWS Management Console or AWS Command Line Interface and are available today in US East (Ohio, N. Virginia), US West (Oregon), Europe (Ireland) and Asia Pacific regions, with availability in additional regions planned soon. AMD-based T3 instances will be available in the coming weeks. AMD-based M5 and R5 instances are available in six sizes with up to 96 vCPUs, up to 768 GB of memory. AMD-based T3 instances will be available in 7 sizes with up to 8 vCPUs and 32 GB of memory.

The new instances can be purchased as On-Demand, Reserved, or Spot instances.

Specifications

| Instinct MI50 | Instinct MI60 | |

| GPU Architecture | Vega20 | |

| Lithography | TSMC 7nm FinFET | |

| Stream Processor | 3840 | 4096 |

| Compute Units | 60 | 64 |

| Peak Engine Clock | 1746 MHz | 1800 MHz |

| Peak Half Precision (FP16) Performance | 26.8 TFLOPs | 29.5 TFLOPs |

| Peak Single Precision (FP32) Performance | 13.4 TFLOPs | 14.7 TFLOPs |

| Peak Double Precision (FP64) Performance | 6.7 TFLOPs | 7.4 TFLOPs |

| Peak INT8 Performance | 53.6 TFLOPs | 58.9 TFLOPs |

| OS Support | Linux x86_64 | |

| Memory Size | 16 GB | 32 GB |

| Memory Type (GPU) | HBM2 | |

| Memory Interface | 4096-bit | |

| Memory Clock | 1 GHz | |

| Memory Bandwidth | 1024 GB/s | |

| Memory ECC Support | Yes (Full-Chip) | |

| Form Factor | PCIe Add-in Card | |

| Bus Type | PCIe 4.0 x16, PCIe 3.0 x16 | |

| Infinity Fabric Links | 2 | |

| Peak Infinity Fabric Link Bandwidth | 100 GB/s | |

| TDP | 300W | |

| Cooling | Passive | |

| Board Width | Double Slot | |

| Board Length | 10.5" (267 mm) | |

| Board Height | Full Height | |

| External Power Connectors | 1x PCIe 6-pin, 1x PCIe 8-pin | |

| RAS Support | yes | |

| Software API Support | OpenGL 4.6 OpenCL: 2.0 Vulkan: 1.0 ROCm (Radeon Open eCosystem) |

OpenGL 4.6 OpenCL: 2.0 Vulkan: 1.0 ROCm (Radeon Open eCosystem) |